10 Most Popular Supervised Learning Algorithms In Machine Learning

Supervised Learning Algorithms are the most widely used approaches in machine learning. Its popularity is due to its ability to predict a wide range of problems accurately.

However, its effectiveness depends on the quality of the training data and the choice of the algorithm and model architecture used.

In this guide, you'll learn the basics of supervised learning algorithms, techniques and understand how they are applied to solve real-world problems.

10 most popular supervised learning algorithms

We will also explore 10 of the most popular supervised learning algorithms and discuss how they could be used in your future projects. Before we drive further, let’s see the table of contents for this article.

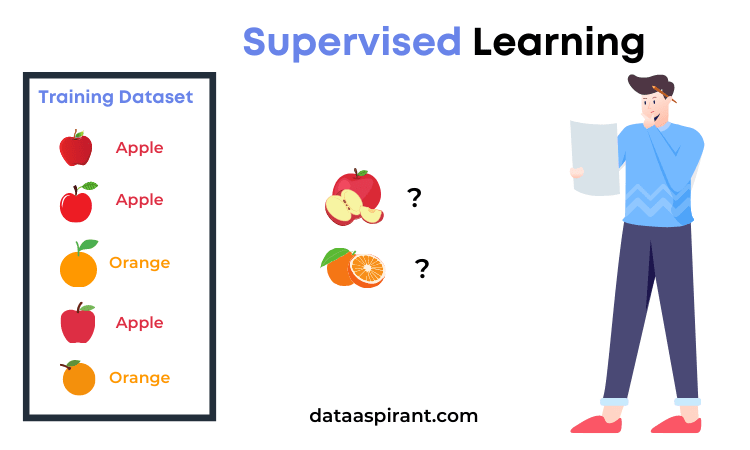

What is Supervised Learning?

Supervised learning is a type of machine learning where a set of labelled data is used to train a model for future predictions. Labelled data consists of input features and output values, allowing the algorithm to make decisions based on the provided data.

Supervised learning can be used to perform classification or regression tasks. Standard supervised learning algorithms includes

All these techniques vary in complexity, but all rely on labelled data in order to produce prediction results.

Supervised learning can be used in a wide variety of tasks. Such as

- Image and object recognition: This involves training a model to identify and classify images or objects based on labelled data.

- Spam filtering: In this application, a model is trained to distinguish between spam and non-spam emails based on labelled data.

- Sentiment analysis: This involves training a model to analyze text and identify the sentiment behind it, such as positive, negative, or neutral.

- Fraud detection: In this application, a model is trained to detect fraudulent transactions based on labelled data.

- Credit scoring: This involves training a model to predict a borrower's creditworthiness based on labelled data.

- Medical diagnosis: A model can be trained to diagnose diseases or medical conditions based on labelled data from medical records or imaging data.

- Speech Recognition: In speech recognition involves training a model to recognize and transcribe spoken words or phrases based on labelled data.

- Recommendation systems: In this application, a model is trained to recommend products or services to users based on their preferences and past behaviour.

- Weather forecasting: A model can be trained to predict weather patterns and provide forecasts based on labelled historical data.

- Stock price prediction: involves training a model to predict stock prices based on historical data and other relevant factors.

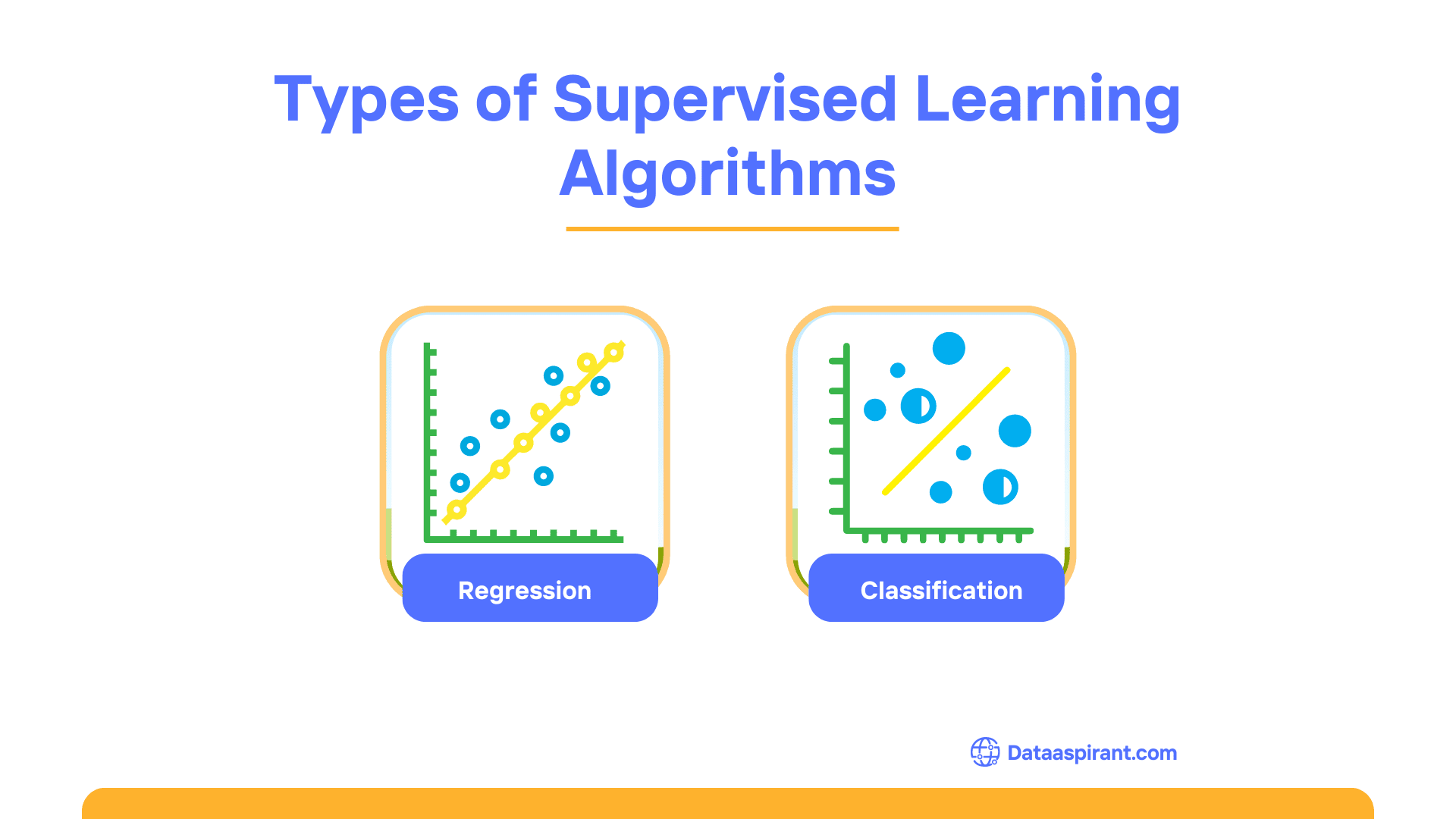

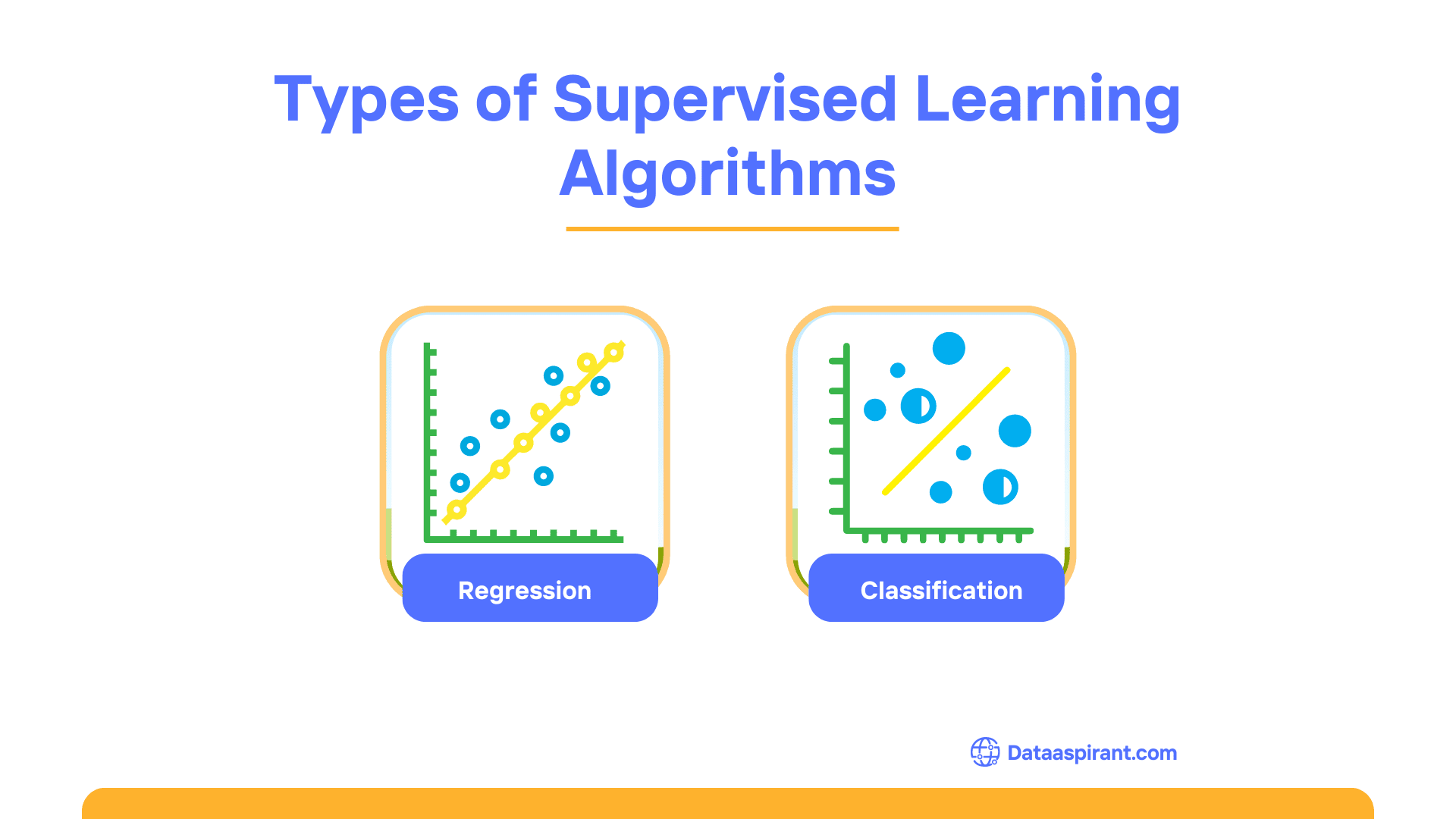

Different Types of Supervised Learning Algorithms

Supervised learning algorithms can be further divided into two categories depending on the type of output they produce.

- Regression Algorithms

- Classification Algorithms

Regression Algorithms

Regression algorithms are used to predict a continuous numerical value, such as a house's price or a day's temperature. Different types of regression algorithms exist, such as

Classification Algorithms

Classification algorithms are used to predict a categorical or discrete value, such as whether an email is spam. Some examples of classification algorithms includes

Popular Supervised Learning Algorithms

Each of these algorithms is used for a different purpose and offers unique advantages when it comes to accuracy and scalability.

Understanding which best fits your data set will help you make more informed decisions when using machine learning to predict outcomes.

Knowing which supervised learning algorithm to use will depend on the size, complexity and type of data you have available.

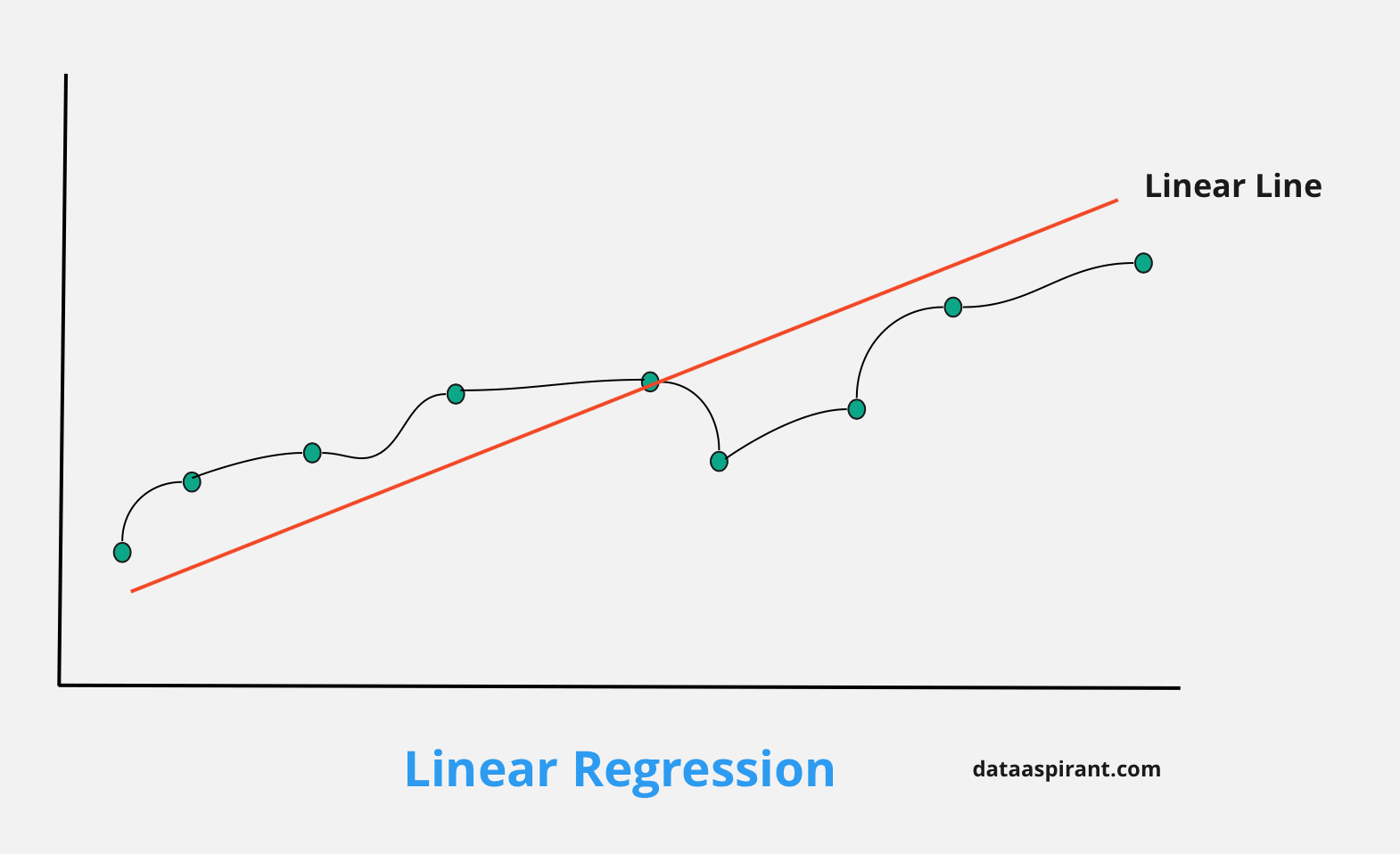

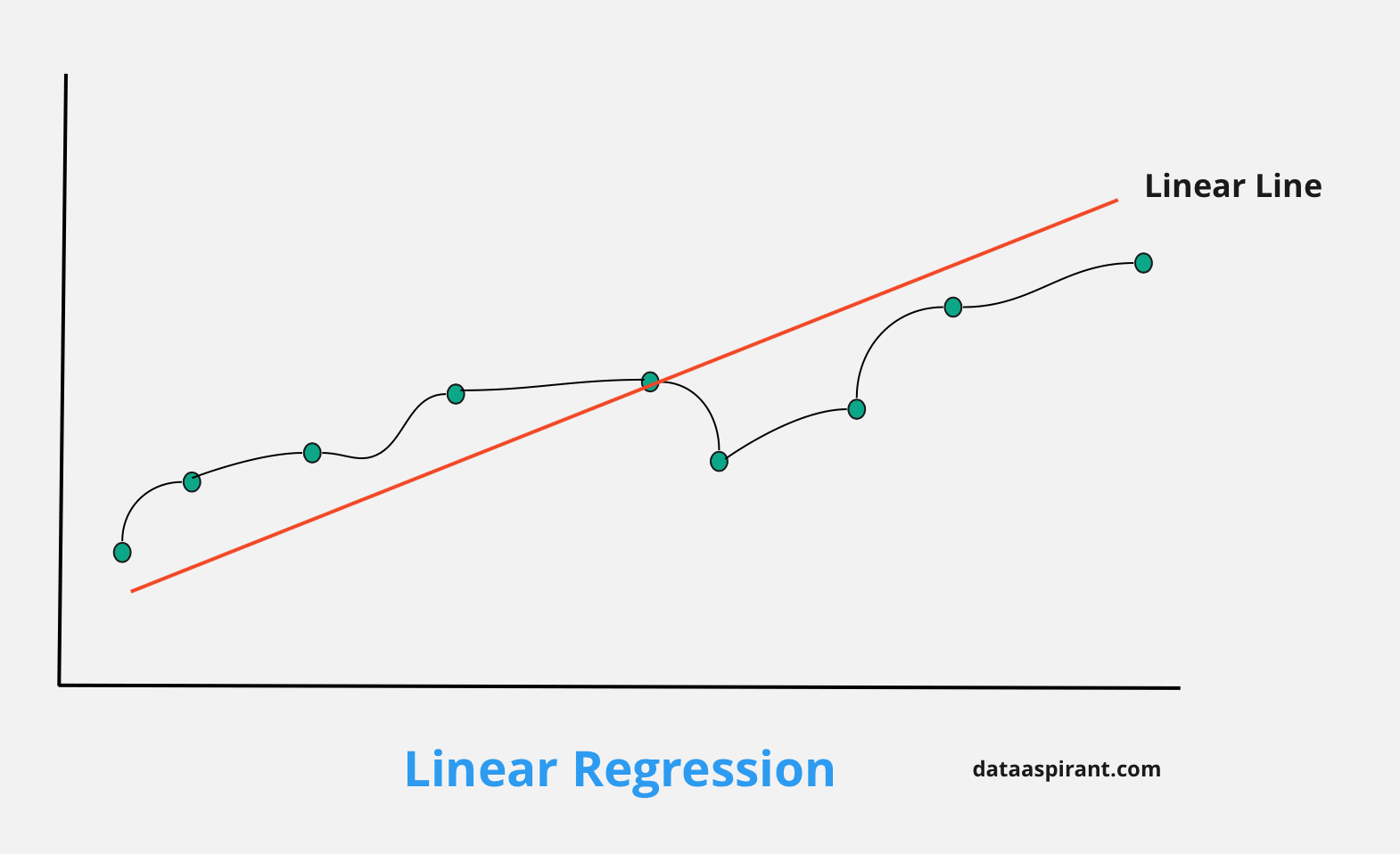

Linear Regression

Linear regression is used to identify the linear relationship between a dependent variable and one or more independent variables.

It is commonly used to predict the value of a continuous variable from the input given by multiple features or to classify observations into categories by considering their correlation with reference variables.

This algorithm works best with an easily interpretable data set, so it is essential to ensure that you understand your data and the assumptions of linear regression before applying this model.

Linear regression models can be trained using the least squares estimation or gradient descent algorithms. To obtain accurate results with linear regression, it is important to understand the linear relationship between variables and to identify potential outliers in your data set.

It is also useful to perform regularization for better predictions, which helps minimize overfitting and enhances the model's generalisation. We can use Lasso regression and ridge regression to handle overfitting.

The output from a linear regression model can be easily interpreted due to its accessible equations, allowing for better decision-making with less complexity.

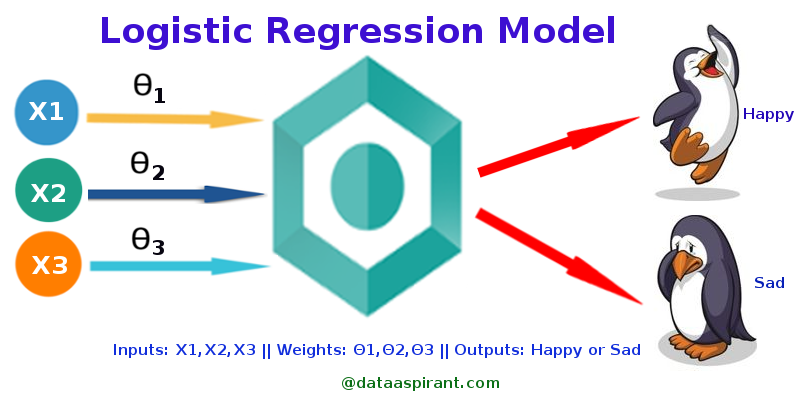

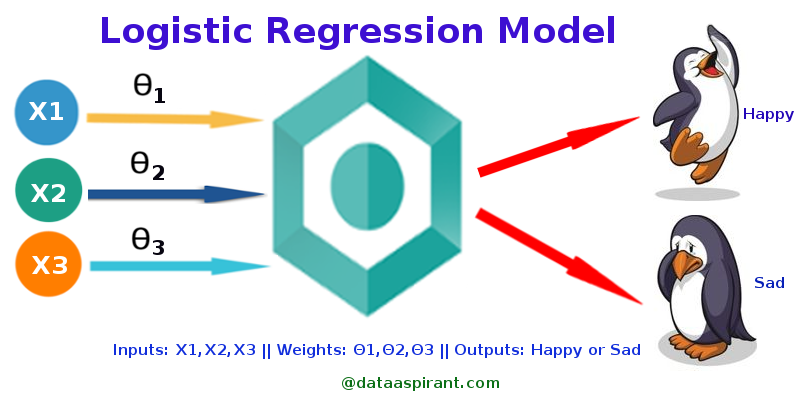

Logistic Regression

Logistic regression is a type of predictive modelling algorithm used for classification tasks. It is used to estimate the probability of an event occurring based on the values of one or more predictor variables.

The predicted probabilities are expected to lie between 0 and 1 and are usually represented as a 0 or 1 if the predicted value is above or below a given threshold.

Logistic regression models are commonly used for binary classification problems, such as predicting whether a customer will subscribe to a service. However, logistic regression can also be used for multiclass classification or prediction tasks.

It is easy to interpret and compare the results of this technique since it provides coefficients per feature and a clearly understandable measure of the likelihood that an observation belongs to one class or another.

An advantage of logistic regression compared to other supervised machine learning algorithms is its simplicity and interpretability.

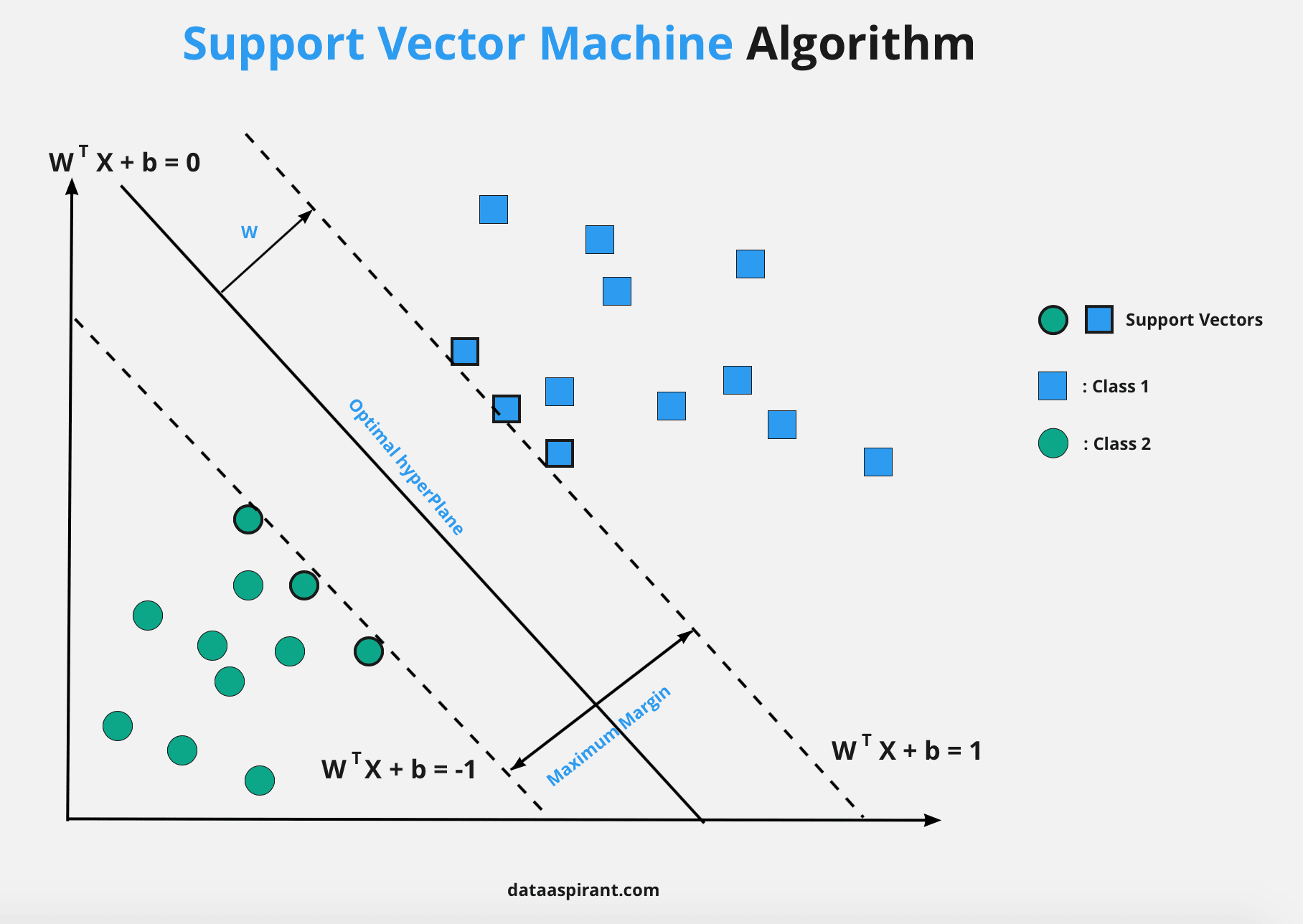

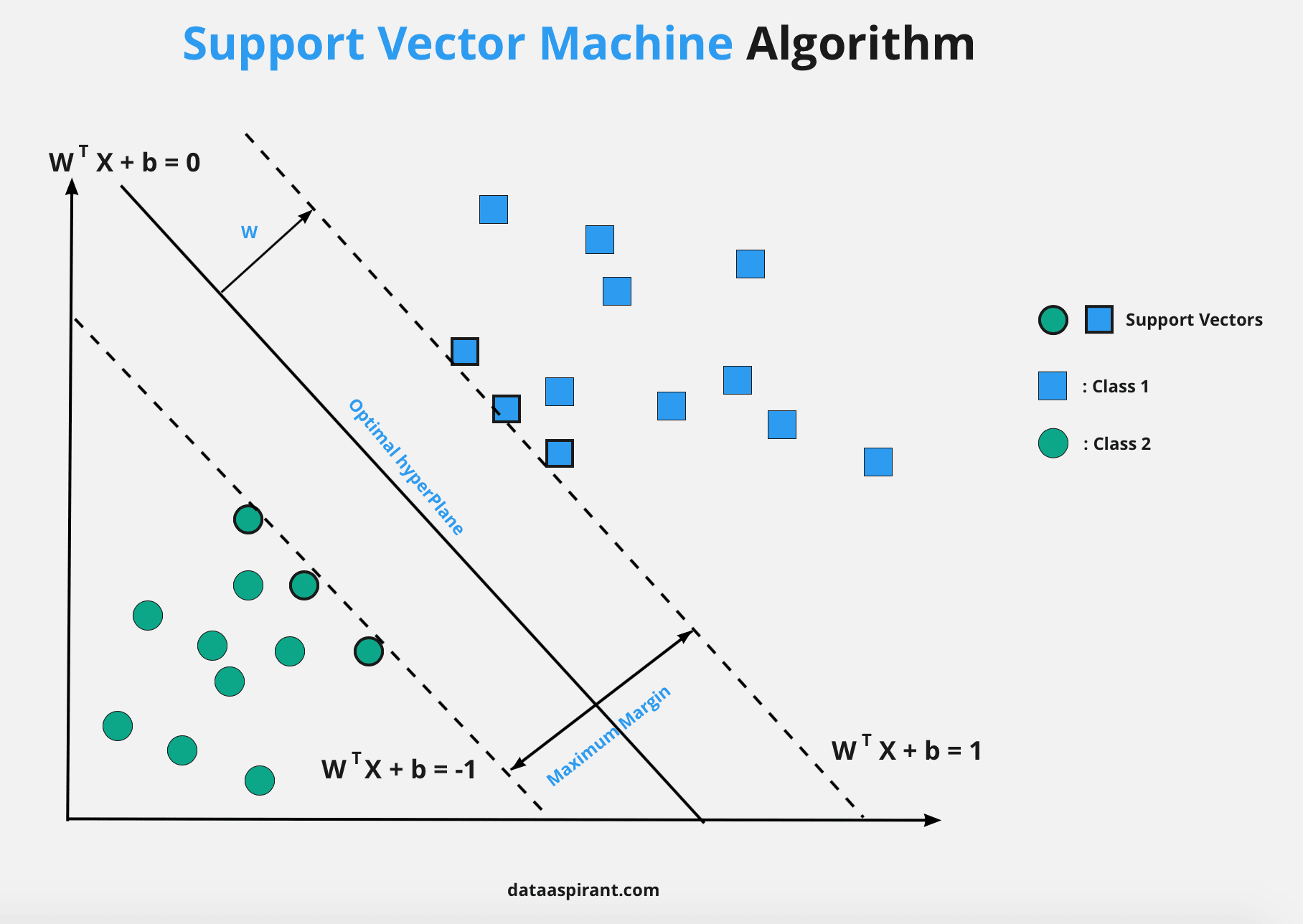

Support Vector Machines (SVM)

Support Vector Machines (SVM) are robust algorithm that use a kernel to map data into a high-dimensional space and then draw a linear boundary between the distinct classes.

SVMs are often used for text classification or image recognition because they can accurately predict categorical variables from large datasets.

However, they require a significant amount of computing power. Also, they need more human interpretability, which can be a drawback when it comes to an understanding why certain decisions were made.

Nevertheless, SVMs have several advantages over other supervised learning algorithms. For instance, they are highly tolerant of noise and can handle non-sense features.

Furthermore, SVMs effectively handle data sets with large feature vectors by exploiting the kernel trick.

Additionally, they work well when there is a significant overlap between classes because SVM is less sensitive to outliers than other models, such as decision trees or linear regression.

With so many benefits, it’s no surprise that SVMs are among today's most popular and powerful supervised learning algorithms.

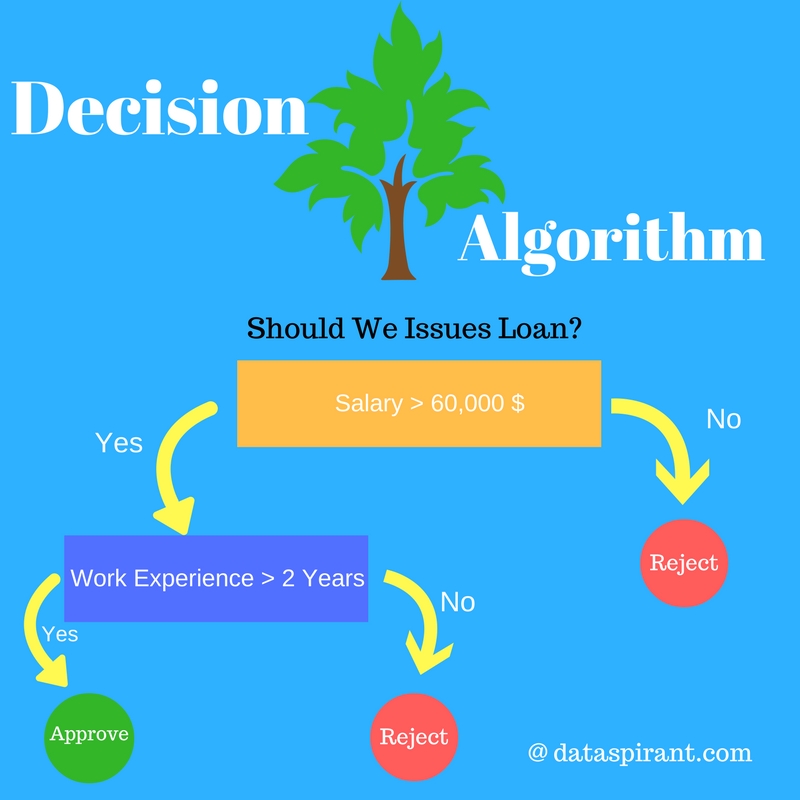

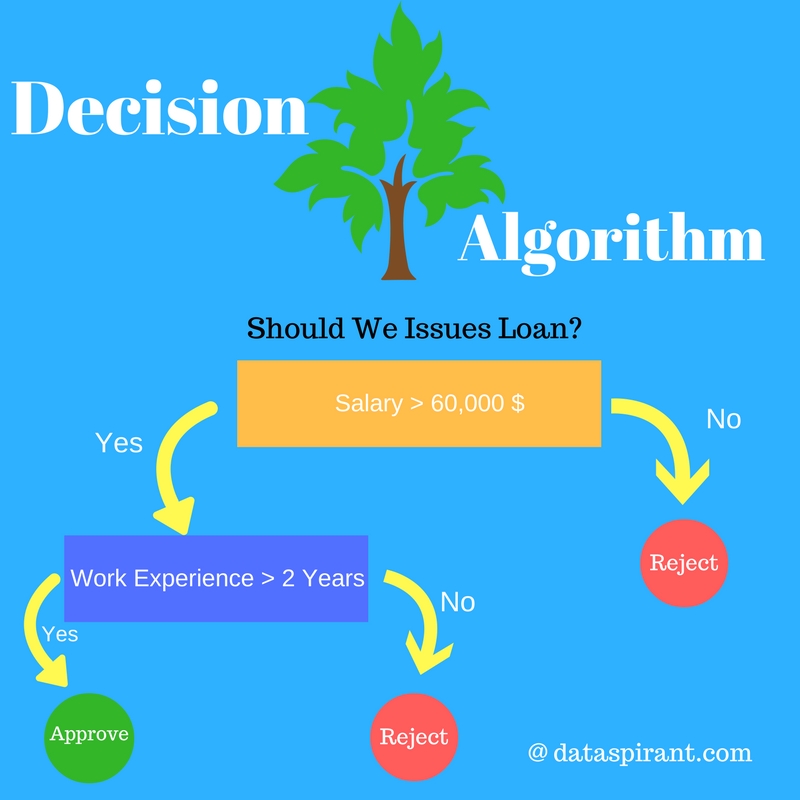

Decision Trees

Decision Trees are popular supervised learning algorithms for classification and regression tasks using a "tree" structure to represent decisions and their associated outcomes.

Each node of the tree represents an attribute, while each branch represents a decision.

So, when a new data point is inputted, it will go down the tree and take different branches depending on the inputted value.

This makes decision trees great for prediction problems because they can quickly determine which category a piece of data belongs to based on previous data training samples.

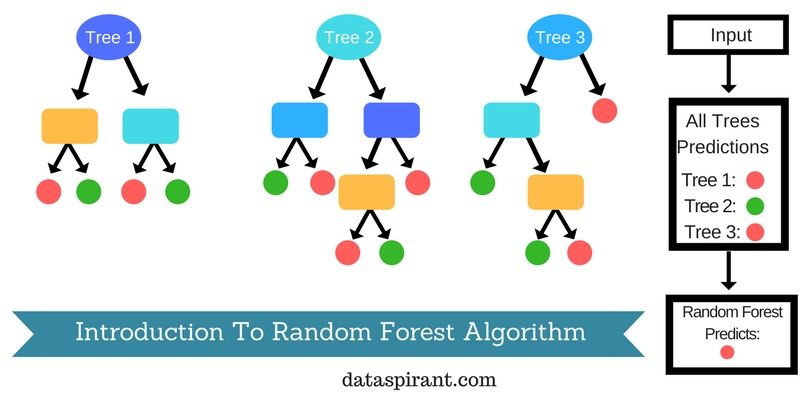

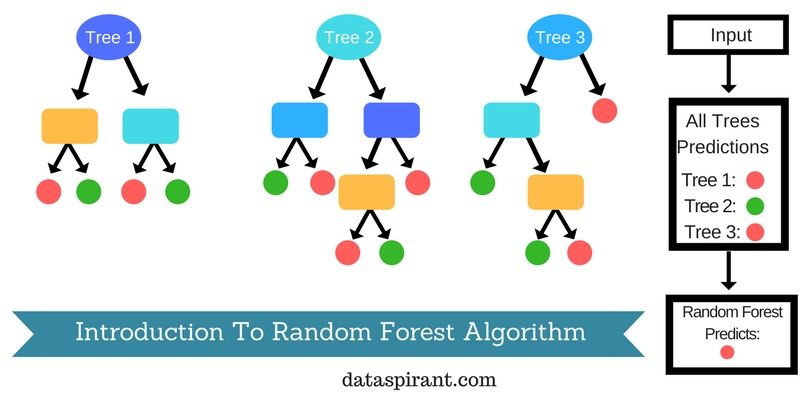

Random Forests

Random forests are essentially multiple decision trees combined to form one powerful "forest" model with better predictive accuracy than individual trees.

They work by randomly choosing sets of observations as well as variables at each node split within the forest, comparing several predictive models before coming up with an optimal outcome that maximizes accuracy.

With the ability to easily see which variable is responsible for each prediction using decision trees, they are easy to analyze and interpret.

Random forests also have high predictive power because of the fact that they reduce variance without significantly losing accuracy compared to individual decision trees.

Using random forests model also reduces overfitting due to the fact that many trees are generated, meaning that it's less likely to fit the data too well. In other words it's handles bias and variance well.

As a result, random forests perform better with larger and more complex datasets making it an ideal algorithm for many supervised learning tasks.

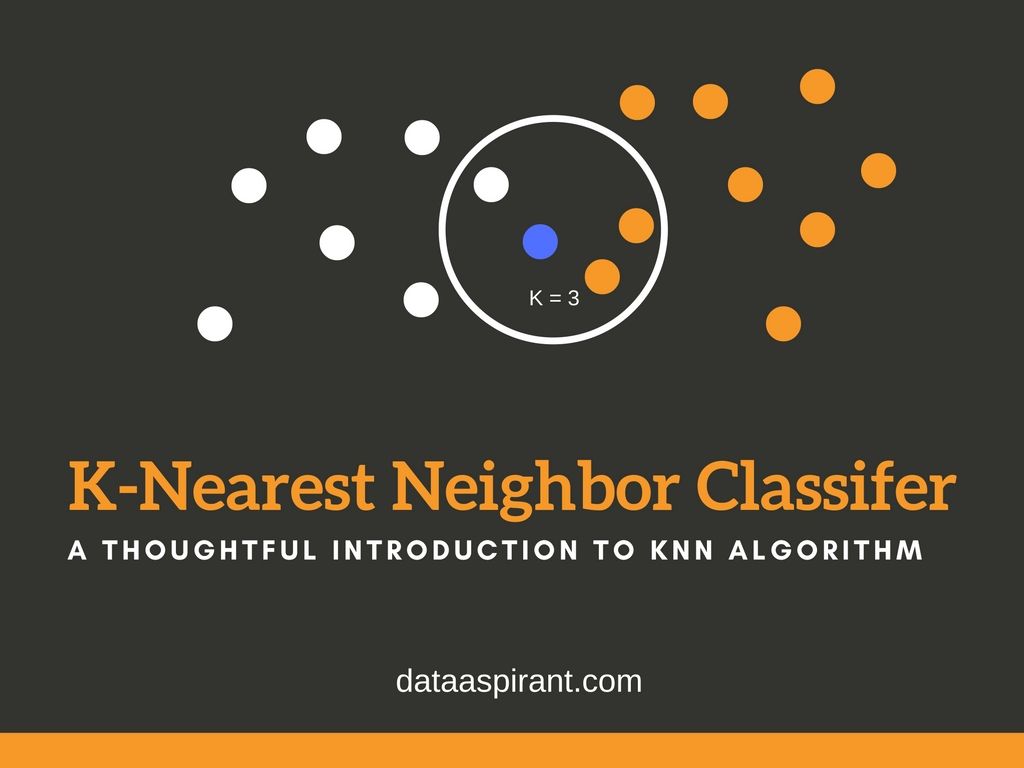

K-Nearest Neighbors (kNN)

kNN is a simpler supervised learning algorithm that models the relationship between a given data point and its “nearest” neighbours.

It aims to classify and predict based on a certain number of ‘nearest neighbours’, having similar features or properties, either in the same class or otherwise.

Looking at the nearest neighbours measures the distance between two data points and uses a voting system to assign them to categories or classes.

In that sense, it is known as an instance-based learning algorithm, as it bears the memory of all points included in the training set while making predictions with new input data.

This makes it particularly suitable when there is no clear knowledge boundary, like with non-linear problems and complex datasets. kNN is a non-parametric learning algorithm but uses the training set to provide values for parameters like the number of neighbours.

Furthermore, as it forms no prior assumption regarding data distribution, it is often used in unstructured data or small datasets and provides valuable results when traditional parametric methods fail.

Therefore, aside from supervised learning tasks with technically-defined inputs, kNN can be used for problems such as recommendation engines that rely heavily on user or item similarity.

As this algorithm does not construct a model with coefficients that measure potential relationships between variables, further inspection of exact details may be hard to interpret though.

Hence, despite its strong performance, consequently providing good accuracy scores in many machine learning tasks, its complexity also makes it prone to overfitting if too many points are included in the training set.

Naive Bayes Classifier

Naive Bayes Classification is a powerful machine learning technique used for both supervised and unsupervised learning.

It uses Bayes' Theorem to calculate the probability that a given data point belongs to one class or another based on its input features.

This makes it perfect for applications such as

- Text classification,

- Recommendation systems,

- Sentiment analysis,

- Image recognition

Bayes’ Theorem, which states that the probability of an event occurring is equal to the likelihood of the evidence multiplied by a prior belief.

A Naïve Bayes classifier, this means that it looks at all of the features associated with a data point and calculates their individual probabilities and factors in any prior beliefs to provide an overall estimate at how likely that data point belongs to one particular class or another. This makes it a powerful and fast approach for predicting classification outcomes.

Gaussian Naive Bayes Classifier

Gaussian Naïve Bayes is an adaptation of the regular naïve Bayes classifier that relies on a normal distribution, or gaussian distribution, to estimate the probability of a given data set.

This type of classifier is useful when estimating data using only basic parameters like mean and standard deviation. This model can accurately predict an outcome using these simple parameters — making it a reliable and efficient choice for many applications.

The Gaussian Naive Bayes classifier is a variant of the Naive Bayes classifier that assumes a Gaussian distribution for the continuous features.

It is called "Gaussian" because it assumes that the probability distribution of the features given the class label is Gaussian.

This means that the probability density function of the features can be written as:

f(x | y) = (1 / (sqrt(2 * pi) * sigma)) * exp(-(x - mu)^2 / (2 * sigma^2))

Where

- mu is the mean of the features for the given class label,

- sigma is the standard deviation of the features for the given class label,

- pi is the mathematical constant.

The Gaussian Naive Bayes classifier calculates the probability of each class given the input features by multiplying the probability density functions of the features for each class label.

Mathematically, the Gaussian Naive Bayes classifier can be written as:

P(y | x) = P(y) * (prod(i=1 to n) f(x_i | y))

Where

- n is the number of features,

- x_i is the i-th feature,

- prod(i=1 to n) f(x_i | y) is the product of the probability density functions of the features given the class label.

XGBoost Algorithm

XGBoost stands for “eXtreme Gradient Boosting” and is a robust machine-learning algorithm that can help you better understand your data and make informed decisions.

It implements gradient-boosting decision trees, wherein it uses multiple trees to output the final result. Professionals in varied fields have widely used it due to its speed and performance on large datasets.

It doesn't need any parameter optimization or tuning and can be used immediately after installation.

The essential advantage of using XGBoost is due to two reasons:

- Execution Speed

- Model Performance

Execution speed is an important factor to consider when dealing with large datasets. When you use XGBoost, there are no restrictions on the size of your dataset, so you can work with datasets that are larger than what would be possible with other algorithms.

On the other hand, model performance is essential because it allows you to create models that can perform better than other models.

Even in the popular kaggle competitions, the XGBoost has used more than any other classification algorithms such as random forest (RF), gradient boosting machines (GBM), and gradient boosting decision trees (GBDT). This is one of the key reasons we need to learn how the xgboost algorithm works.

CatBoost Algorithm

CatBoost is a gradient boosting algorithm for decision trees developed by Yandex. It has applications in many industries and is used for search, recommendation systems, personal assistants, self-driving cars and weather forecasting tasks.

CatBoost works by building successive decision trees, which are trained with a reduced loss compared to the previous trees. The number of trees constructed is determined by initial parameters set before training begins.

Gradient boosting is a machine learning technique that builds an ensemble of weak prediction models, typically decision trees, to create a strong predictor. Each tree is trained on the errors of the previous tree in the ensemble.

The idea is to iteratively reduce the residual errors by adding more trees to the ensemble. CatBoost uses the same gradient boosting approach but has some unique features that set it apart from other boosting frameworks.

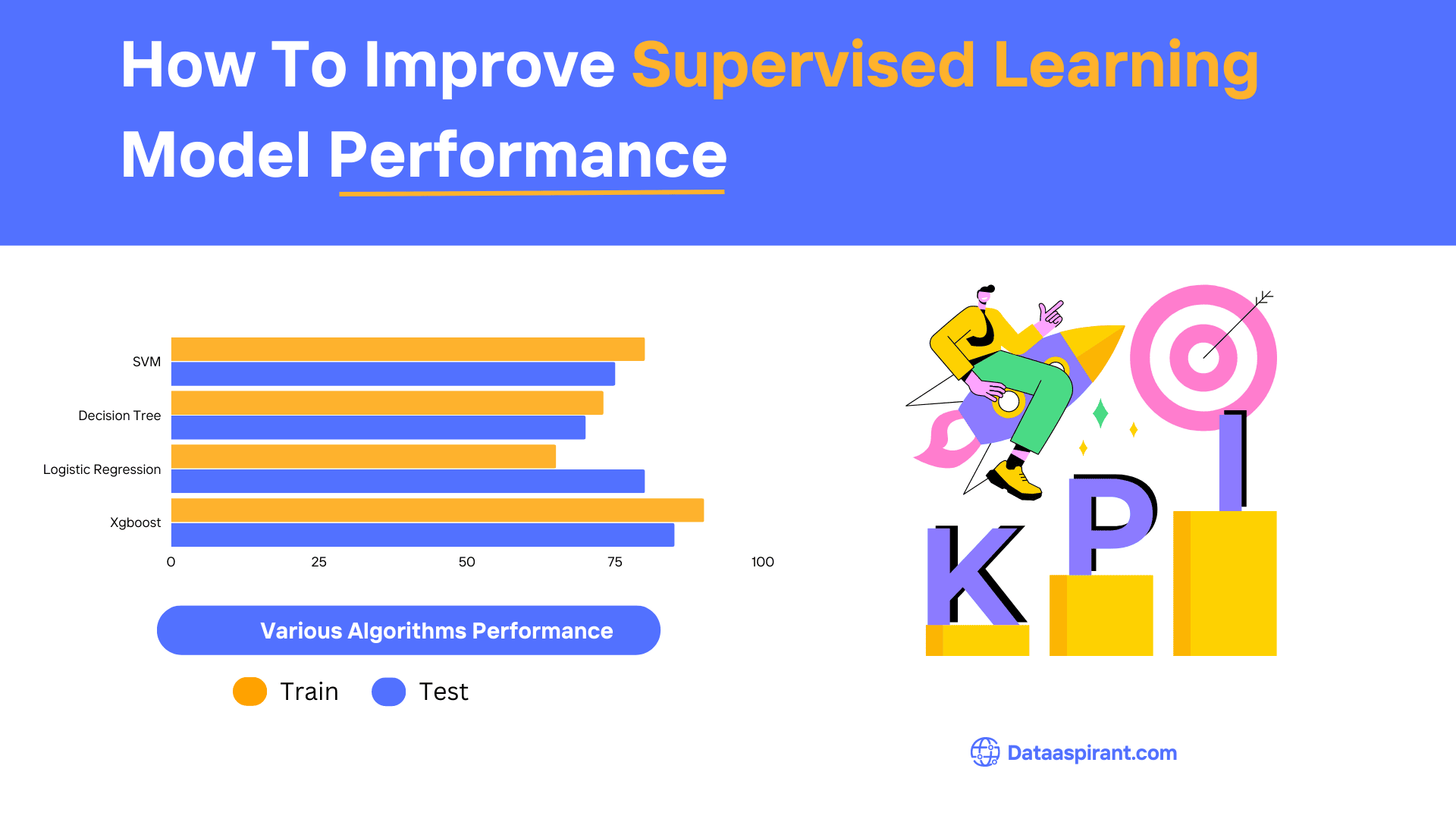

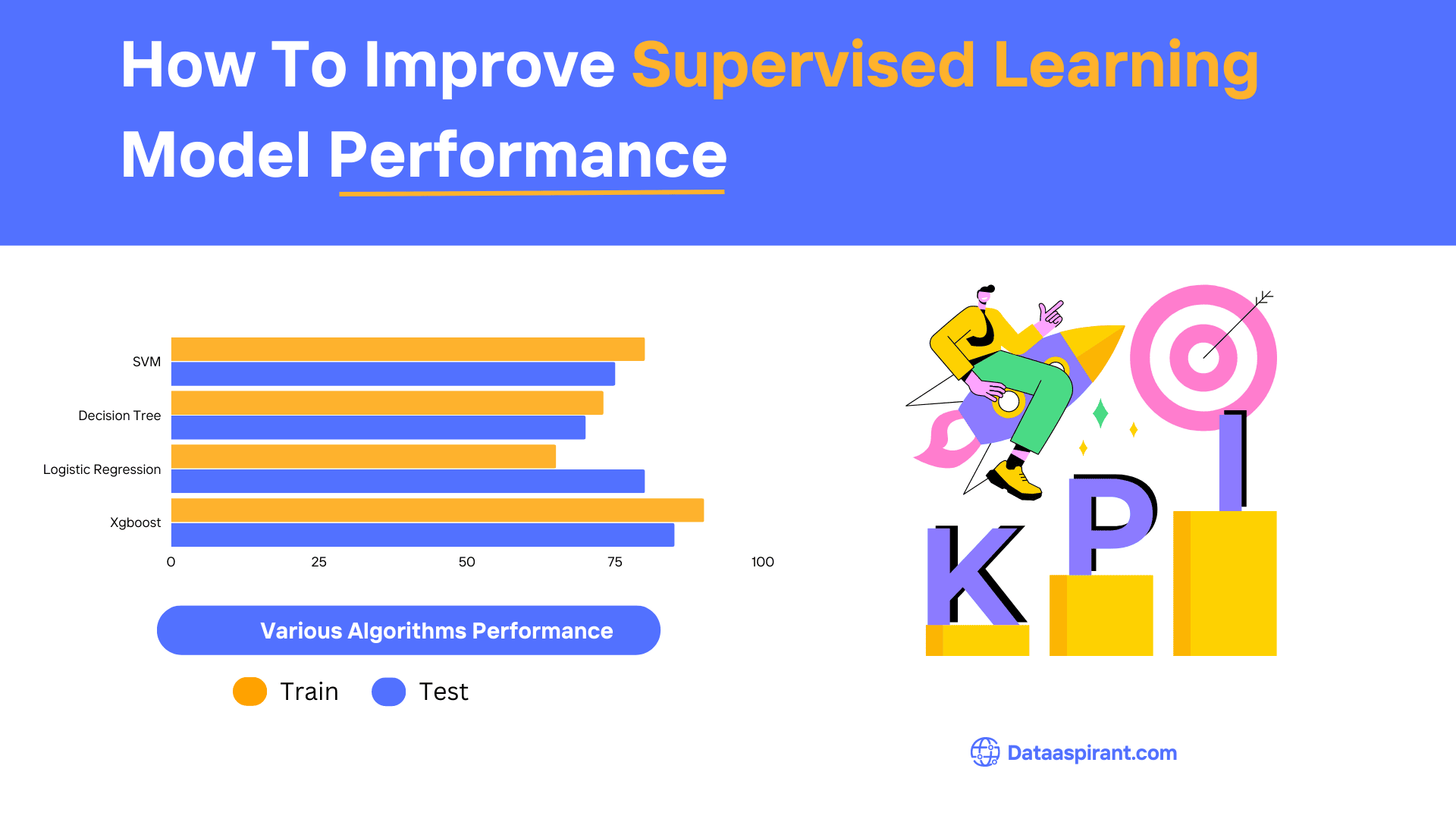

How to Improve Supervised Learning Model Performance

After building your machine learning model, it will be important to assess its performance. To do this, you'll need to evaluate the model's accuracy and determine any potential issues or areas for improvement.

A common approach is to use a holdout test data set, which consists of data that isn't used for training the model.

By assessing the model's performance against this unseen data set, you can accurately determine its efficacy and identify any weaknesses that need to be addressed.

Once any potential issues have been identified, it is then time to improve the model. One of the most effective ways to do this is to use what's known as hyperparameter optimization.

This technique involves changing different variables related to the model (e.g., algorithm type, number of layers) in order to get better results.

Additionally, you can also experiment with various data preprocessing techniques, such as oversampling and undersampling in order to improve accuracy and avoid bias.

Ultimately, it is by exploring different approaches and fine-tuning your machine learning model that you can optimize its performance for your specific use case.

Building an End-to-End Machine Learning Solution with Supervised Learning

Once the model has been trained and tested, it can be deployed as an end-to-end machine learning solution.

This is done by integrating the model into the software application, where predictions should be made based on user data.

After deployment, monitoring and optimising how it functions is essential to ensure that its performance meets expectations, especially over long periods.

Performance metrics such as accuracy and recall must also be measured against test datasets for the deployed models. This will help determine which model provides the most accurate solution for the problem at hand.

Practical Tips for Developing Supervised Machine Learning Solutions

There are a few practical tips to keep in mind when developing machine learning solutions. First, the data used to train and test the model should be reliable.

Secondly, choosing the best model for the problem at hand is important. Lastly, when creating supervised models, it is best practice to perform extensive tests to ensure there are no significant issues before deploying it in production.

During development, it is important to use data that accurately reflects the environment in which the model will be used. The data should reflect the real world and not just existing bias.

It’s also important to choose a correct and generalised model, meaning that it also works in unseen data. When working with supervised learning models, you should perform tests before deployment to make sure your model performs as expected, such as cross-validation and inference testing.

These tests help you create robust models capable of offering accurate predictions even under unforeseen circumstances.

Conclusion

Supervised Learning is a powerful approach to machine learning that has been widely used in various applications, including image recognition, natural language processing, and fraud detection. It involves training a model on labelled data to accurately predict new, unseen data.

Several algorithms can be used in this process, including

- Linear Regression,

- Logistic Regression,

- Decision Trees,

- Random Forest,

- Support Vector Machines (SVM),

- Naive Bayes

The algorithm choice depends on the problem at hand and the dataset's characteristics.

While Supervised Learning has proven to be highly effective, its success depends on the quality of the training data, the choice of algorithm and model architecture used, and the performance metrics used to evaluate the model.

Therefore, it's essential to carefully analyze the problem, select the right algorithm and model architecture, and evaluate the model's performance to obtain the best possible results.

Frequently Asked Questions (FAQs) On Supervised Learning Algortihms

1. What is Supervised Learning in Machine Learning?

Supervised learning is a type of machine learning where the algorithm is trained on labeled data. The model learns from this data to make predictions or decisions based on new, unseen data.

2. What are the 10 Most Popular Supervised Learning Algorithms?

The 10 most popular algorithms include Linear Regression, Logistic Regression, Decision Trees, Random Forest, Support Vector Machines (SVM), Naive Bayes, K-Nearest Neighbors (KNN), Gradient Boosting Machines (GBM), Neural Networks, and AdaBoost.

3. How Does Linear Regression Work?

Linear Regression predicts a continuous output based on the linear relationship between input variables. It's often used for forecasting and finding out which factors influence outcomes.

4. What is Logistic Regression?

Logistic Regression is used for binary classification problems. It predicts the probability of occurrence of an event by fitting data to a logistic function.

5. Can You Explain Decision Trees?

Decision Trees are flowchart-like structures where each internal node represents a test on an attribute, each branch represents the outcome, and each leaf node represents a class label.

6. What is a Random Forest?

Random Forest is an ensemble learning method that operates by constructing multiple decision trees during training and outputting the class that is the mode of the classes (classification) or mean prediction (regression) of the individual trees.

7. How Do Support Vector Machines (SVM) Work?

SVMs are effective for classification and regression challenges. They work by finding the hyperplane that best divides a dataset into classes.

8. What is Naive Bayes?

Naive Bayes classifiers are a family of simple probabilistic classifiers based on applying Bayes' theorem with strong independence assumptions between the features.

9. How is K-Nearest Neighbors (KNN) Used?

KNN is a simple algorithm that stores all available cases and classifies new cases based on a similarity measure (e.g., distance functions).

10. What are Gradient Boosting Machines (GBM)?

GBMs are a machine learning technique for regression and classification problems, which produce a prediction model in the form of an ensemble of weak prediction models, typically decision trees.

11. Can You Explain Neural Networks?

Neural Networks are a set of algorithms, modeled loosely after the human brain, that are designed to recognize patterns. They interpret sensory data through a kind of machine perception, labeling, or clustering of raw input.

12. What is AdaBoost?

AdaBoost, short for Adaptive Boosting, is an ensemble method that combines multiple weak learners to create a strong learner for improving the accuracy of the model.

13. How Do You Choose the Right Supervised Learning Algorithm?

The choice depends on the size and type of data, the task to be performed (classification or regression), and the complexity of the problem. Experimentation and cross-validation are often necessary to find the best algorithm.

Recommended Courses

Machine Learning Course

Rating: 4.5/5

Deep Learning Course

Rating: 4.5/5

NLP Course

Rating: 4/5