Learn How Multiple Linear Regression Works In Minutes

Welcome to this comprehensive guide on multiple linear regression, a versatile and powerful technique for analyzing relationships between multiple variables.

As one of the foundational methods in data analysis and machine learning, multiple linear regression enables you to gain valuable insights from your data, make informed decisions, and drive success in various industries.

Whether you are a beginner or an experienced data enthusiast, this blog post aims to provide you with an in-depth understanding of multiple linear regression and the tools needed to apply it effectively.

We will start by exploring the basics of multiple linear regression and the assumptions underlying the model. We will then delve into the process of collecting and preparing data, building the model, and validating and optimizing its performance.

Comprehensive Guide on Multiple Linear Regression

Along the way, we will examine real-world applications across different industries and provide practical examples to illustrate the concepts discussed.

Whether you're a student, a data enthusiast, or a professional looking to enhance your analytics skills, this post will serve as a valuable resource for mastering multiple linear regression quickly.

By the end of this post, you will be well-equipped to tackle your own multiple linear regression projects with confidence and skill.

So, let's dive in and start your journey towards mastering multiple linear regression!

Introduction to Multiple Linear Regression

If you're new to the machine learning field, you might have heard about various algorithms and techniques that help uncover patterns and make predictions from data. One such technique is multiple linear regression, a powerful and widely-used method in data analysis.

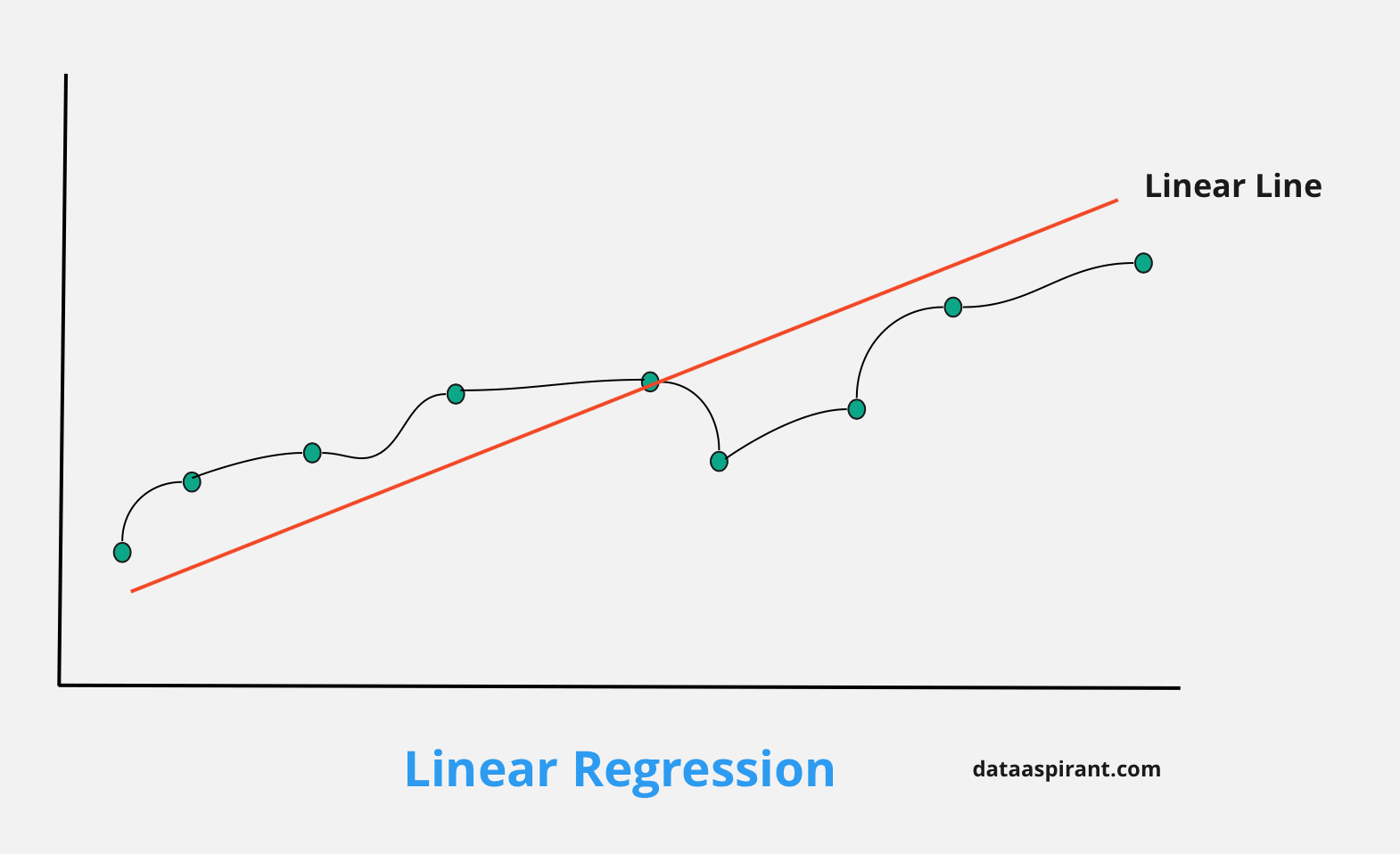

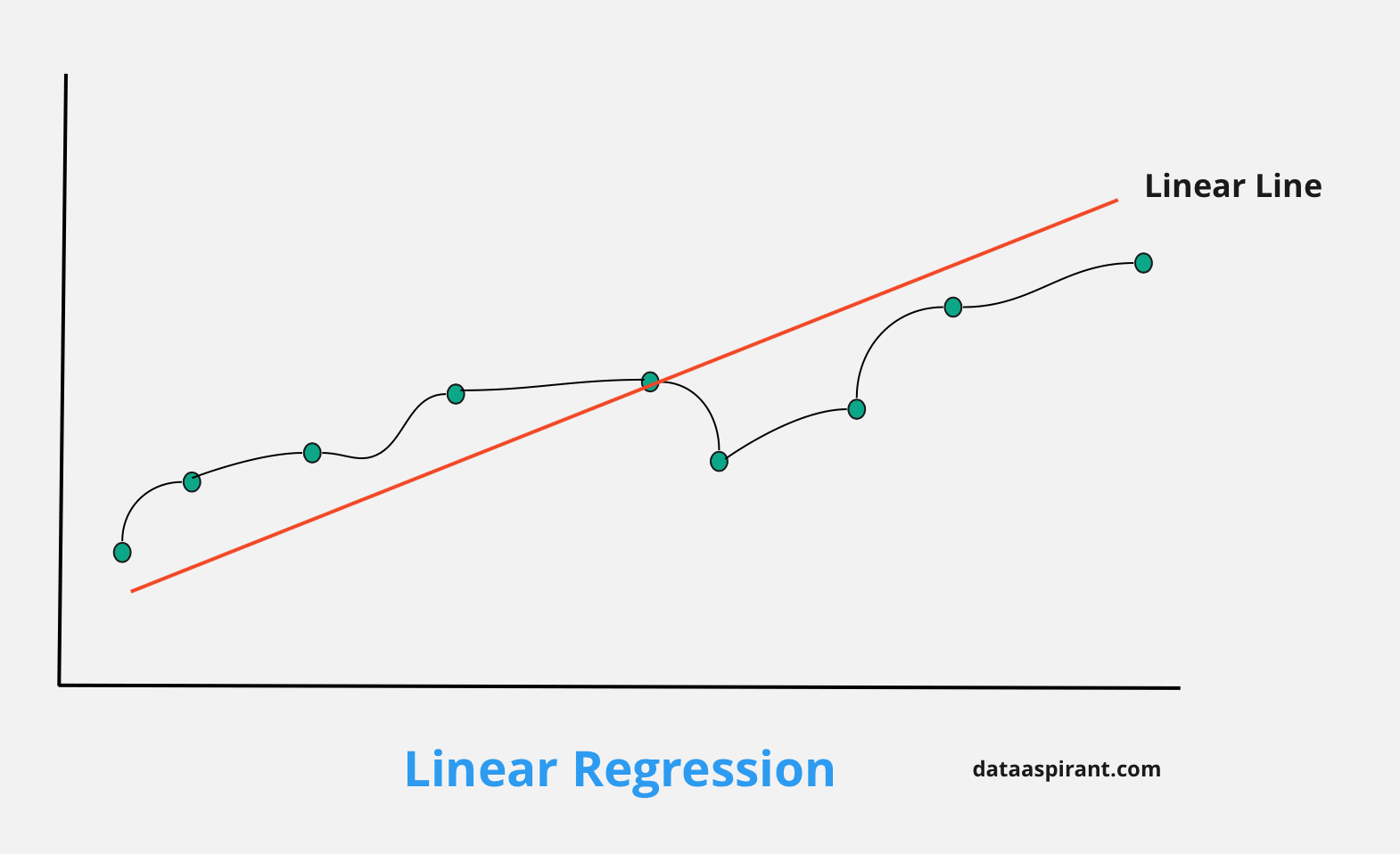

Multiple linear regression is an extension of simple linear regression, a statistical method used to model the relationship between a dependent variable (the outcome we want to predict) and one or more independent variables (the predictors).

In simple linear regression, we only have one independent variable; in multiple linear regression, we can have two or more independent variables.

The goal is to find the best-fitting line or hyperplane that can describe the relationship between the dependent and independent variables, allowing us to make predictions based on the given data.

The multiple linear regression equation looks like this:

Y = β₀ + β₁X₁ + β₂X₂ + ... + βnXn + ε

Here,

- Y is the dependent variable,

- X₁, X₂, ..., Xn are the independent variables,

- β₀ is the intercept,

- β₁, β₂, ..., βnₖ are the coefficients of the independent variables,

- ε is the random error term.

The coefficients represent the strength and direction of the relationship between each independent and dependent variable.

Importance of Multiple Linear Regression In Data Analysis

Multiple linear regression is a widely-used technique in data analysis for several reasons:

- Simplicity: The method is easy to understand and implement, making it accessible to beginners in machine learning and data analysis.

- Interpretability: The coefficients in the multiple linear regression model provide insights into the relationships between the dependent and independent variables. This information can be useful for decision-making and understanding the factors influencing the outcome.

- Predictive Power: Multiple linear regression can help make accurate predictions in various fields, such as finance, marketing, healthcare, and sports. It allows us to understand the impact of different factors on the outcome and use this knowledge to make data-driven decisions.

- Flexibility: The technique can handle continuous and categorical independent variables, making it suitable for various applications.

Understanding Multiple Linear Regression With Technical Terms

Before diving into implementing multiple linear regression, it's essential to grasp the fundamental concepts and assumptions underpinning this technique.

In this section, we'll explain the basics of multiple linear regression and the key assumptions that must be met for the technique to be effective.

Simple Linear Regression vs. Multiple Linear Regression

Simple linear regression and multiple linear regression are both techniques used to model the relationship between a dependent variable (the outcome we want to predict) and one or more independent variables (the predictors).

The primary difference between the two lies in the number of independent variables they can handle.

Simple linear regression models the relationship between a single independent variable and the dependent variable, whereas multiple linear regression can accommodate two or more independent variables.

This added complexity allows multiple linear regression to capture more intricate relationships and interactions among the predictors, resulting in a more accurate and comprehensive model.

Key terminology In Mulitple Linear Regression

Dependent variable (Y): The outcome we want to predict or explain.

Independent variable (X): The predictors used to explain the dependent variable's variation.

Coefficients (β): The parameters that determine the relationship between the dependent variable and the independent variables.

Intercept (β₀): The point at which the regression line or hyperplane intersects the Y-axis when all independent variables are equal to zero.

Error term (ε): The random variation in the dependent variable that is not explained by the independent variables.

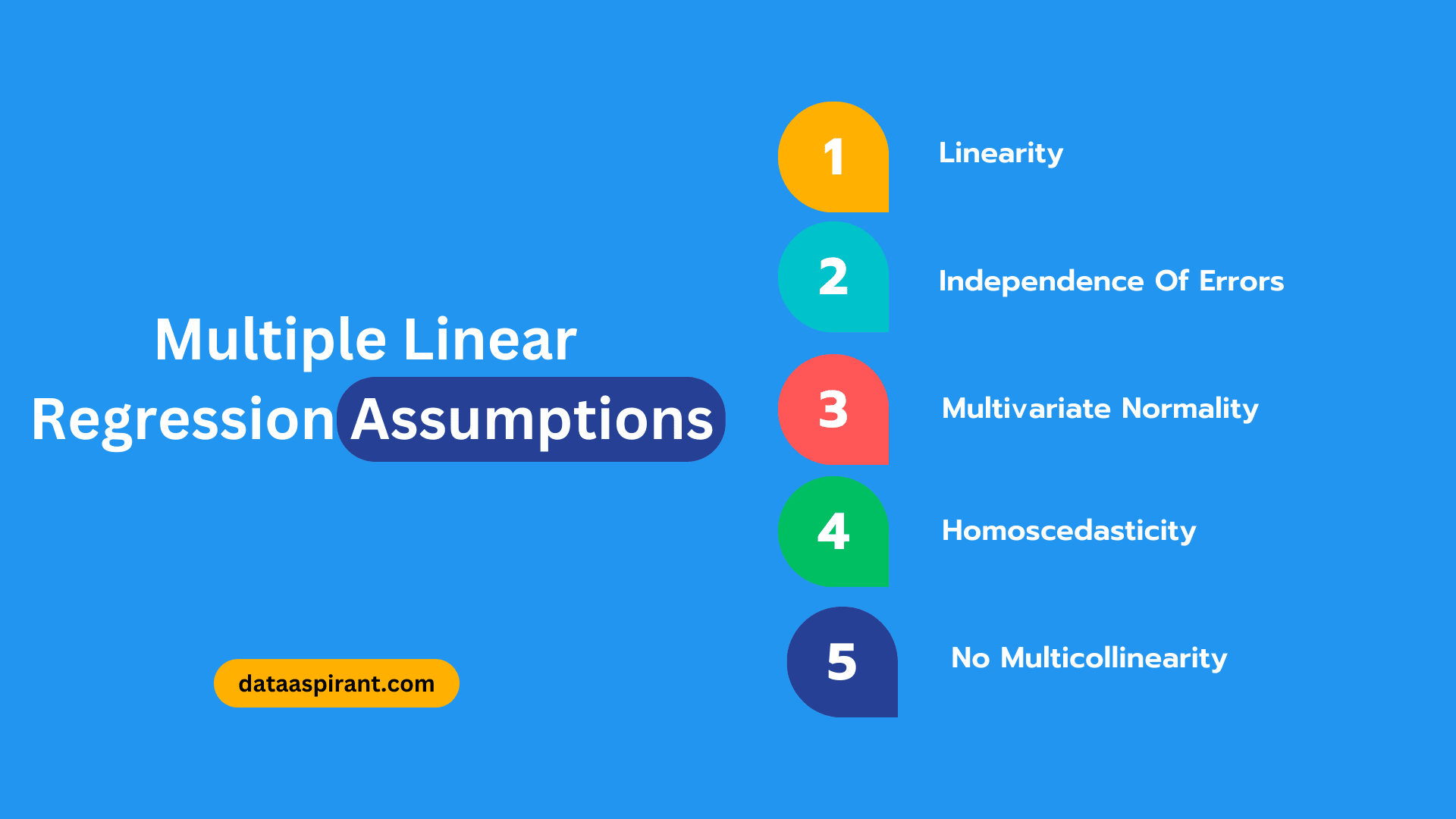

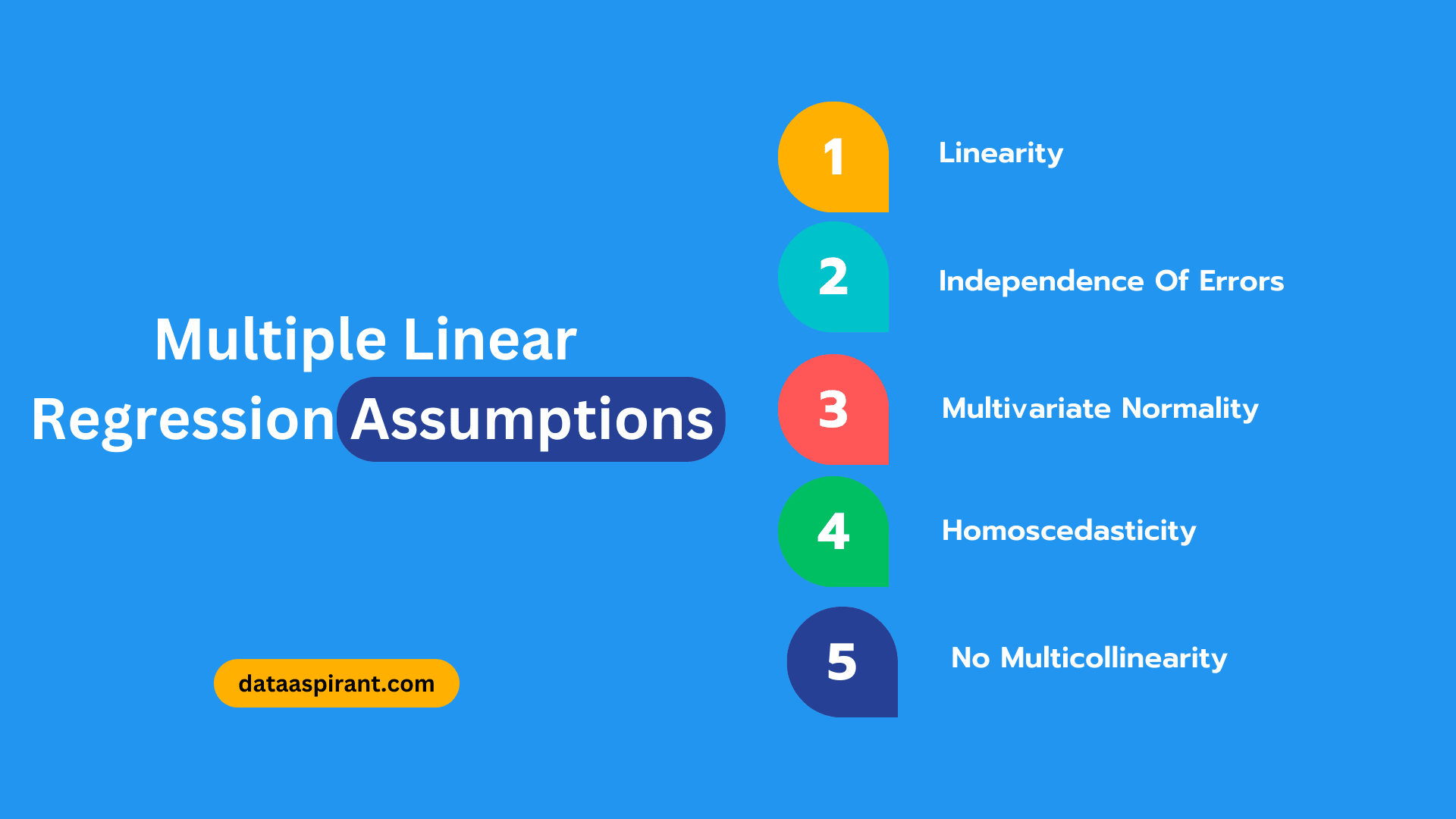

Multiple Linear Regression Assumptions

For multiple linear regression to provide accurate and reliable results, certain assumptions must be met:

- Linearity: The relationship between the dependent variable and each independent variable should be linear. This means that any increase or decrease in the independent variable's value should result in a proportional change in the dependent variable's value.

- Independence Of Errors: The error terms should be independent of each other, meaning that the error associated with one observation should not influence the error of any other observation. This assumption helps ensure that the model's predictions are unbiased and accurate.

- Multivariate Normality: The error terms should follow a multivariate normal distribution, meaning the errors are normally distributed around the regression line or hyperplane. This assumption allows for the generation of accurate confidence intervals and hypothesis tests.

- Homoscedasticity: The error terms should have constant variance across all levels of the independent variables. This means that the spread of the errors should be consistent regardless of the values of the predictors. If this assumption is not met, it could lead to unreliable confidence intervals and hypothesis tests.

- No Multicollinearity: The independent variables should not be highly correlated with one another. High correlation among independent variables can make it difficult to determine the individual effects of each predictor on the dependent variable, leading to unreliable coefficient estimates and reduced model interpretability.

Now Let’s discuss how to check and address violations of these assumptions when building a multiple linear regression model.

Collecting and Preparing Data

Collecting and preparing the data is crucial to building a robust multiple linear regression model. In this section, we'll walk you through the process of identifying variables, collecting data, and cleaning and preprocessing the data to ensure that it's ready for analysis.

Identifying the Variables

Dependent variable (target):

The dependent variable, also known as the target or response variable, is the outcome you want to predict or explain using the independent variables. You'll need to select a single dependent variable in multiple linear regression.

Examples include

- House prices,

- Customer churn rates,

- Sales revenue.

Independent variables (predictors):

The independent variables, also called predictors or features, are used to explain the variations in the dependent variable. In multiple linear regression, you can use two or more independent variables.

When selecting independent variables, consider factors that are likely to influence the dependent variable and have a theoretical basis for inclusion in the model.

Data Collection Methods

Collecting data for multiple linear regression can be done using various methods, depending on your research question and the domain you're working in. Common data collection methods include:

- Surveys and Questionnaires: Collecting responses from individuals or organisations through structured questions.

- Observational Studies: Gathering data by observing subjects or events without any intervention.

- Experiments: Conducting controlled experiments to gather data under specific conditions.

- Existing Databases and Datasets: Using pre-existing data from sources such as government agencies, research institutions, or online repositories.

Data cleaning and preprocessing

Once you've collected the data, the next step is to clean and preprocess it to ensure it's suitable for analysis. This process includes addressing issues such as missing values, outliers, and inconsistent data formats.

- Missing Values: Missing values can occur when data points are not recorded or need to be completed. Depending on the nature and extent of the missing data, you can choose to impute the missing values using methods such as mean, median, or mode imputation or remove the observations with missing values altogether.

- Outliers: Outliers are data points significantly different from most of the data. Outliers can considerably impact the multiple linear regression model, so it's essential to identify and handle them appropriately. You can use visualisation techniques, such as box plots or scatter plots, and statistical methods, such as the Z-score or IQR method, to detect outliers. Depending on the context, you can either remove the outliers or transform the data to reduce their impact.

- Feature Scaling: Feature scaling is the process of standardising or normalizing the independent variables so that they have the same scale. This step is crucial when working with multiple independent variables with different units or ranges, as it ensures that each variable contributes equally to the model. Common scaling techniques include min-max normalization and standardization (Z-score scaling).

- Encoding Categorical Variables: Multiple linear regression requires that all independent variables be numerical. If your dataset includes categorical variables (e.g., gender, color, or region), you must convert them into numerical values. One common method for encoding categorical variables is one-hot encoding, which creates binary (0 or 1) features for each category of the variable.

After completing these preprocessing steps, your data should be ready for building a multiple linear regression model.

Now Let’s discuss the process of model building, validation, and optimization.

Building the Multiple Linear Regression Model With Scikit-Learn

Now that you have a clean and preprocessed dataset, it's time to build the multiple linear regression model. In this section, we'll guide you through selecting the right predictors, implementing the model in Python, and interpreting the results.

Selecting the Right Predictors For Multiple Linear Regression Model

Choosing the most relevant and significant predictors is essential for building an accurate and interpretable multiple linear regression model. Here are three popular techniques for predictor selection:

- Forward Selection: This method starts with an empty model and iteratively adds predictors one at a time based on their contribution to the model's performance. The process continues until no significant improvement in model performance is observed.

- Backward Elimination: This method starts with a model that includes all potential predictors and iteratively removes the least significant predictor one at a time. The process continues until removing any more predictors results in a significant decrease in model performance.

- Stepwise Regression: This method combines both forward selection and backward elimination. It starts with an empty model, adds predictors one at a time, and evaluates the model at each step. It may be removed if a predictor's inclusion no longer improves the model. The process continues until no more predictors can be added or removed without significantly affecting model performance.

Implementing Multiple Linear Regression In Python

Using Scikit-Learn library

Python's Scikit-Learn library is popular for implementing machine learning algorithms, including multiple linear regression. The library provides user-friendly functions for model building, evaluation, and optimization.

Code walkthrough

Here's a simple example of how to implement multiple linear regression using Scikit-Learn:

Multiple Linear Regression Model Diagnostics & Interpretation

After building the multiple linear regression model, evaluating its performance and interpreting the results is essential.

- R-squared and adjusted R-squared: R-squared measures how well the model explains the variation in the dependent variable. It ranges from 0 to 1, with higher values indicating better model performance. Adjusted R-squared is a modified version of R-squared that takes into account the number of predictors in the model. It is useful for comparing models with different numbers of predictors.

- Interpretation of Coefficients: The coefficients in a multiple linear regression model represent the average change in the dependent variable for a one-unit increase in the corresponding independent variable, holding all other predictors constant. Positive coefficients indicate a positive relationship, while negative coefficients indicate an inverse relationship. The magnitude of the coefficients can be used to understand the strength of the relationship between the predictors and the dependent variable.

- Significance of Predictors: The significance of the predictors can be assessed using hypothesis tests, such as t-tests or F-tests. A predictor is considered statistically significant if its p-value is below a predetermined threshold, usually 0.

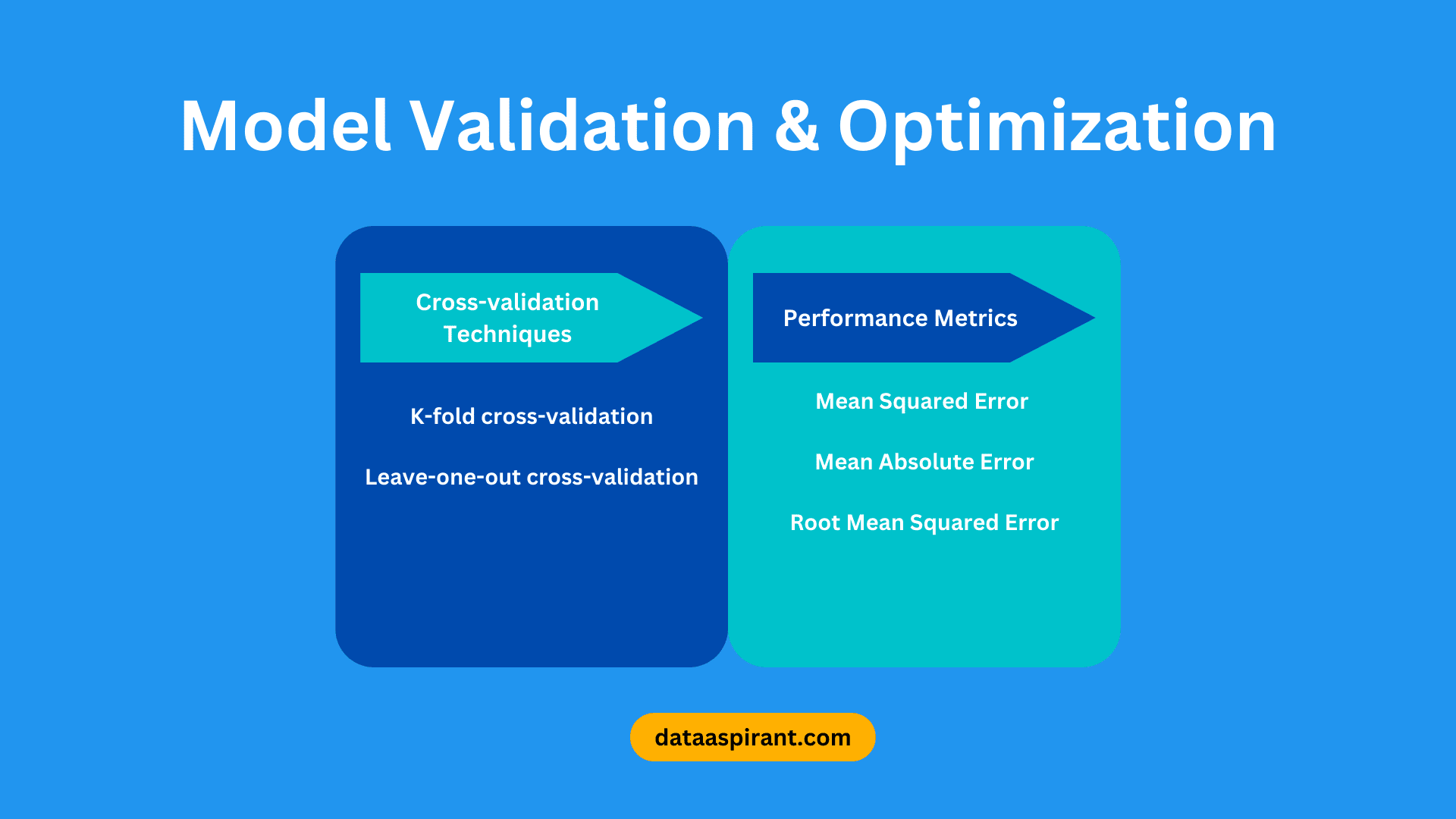

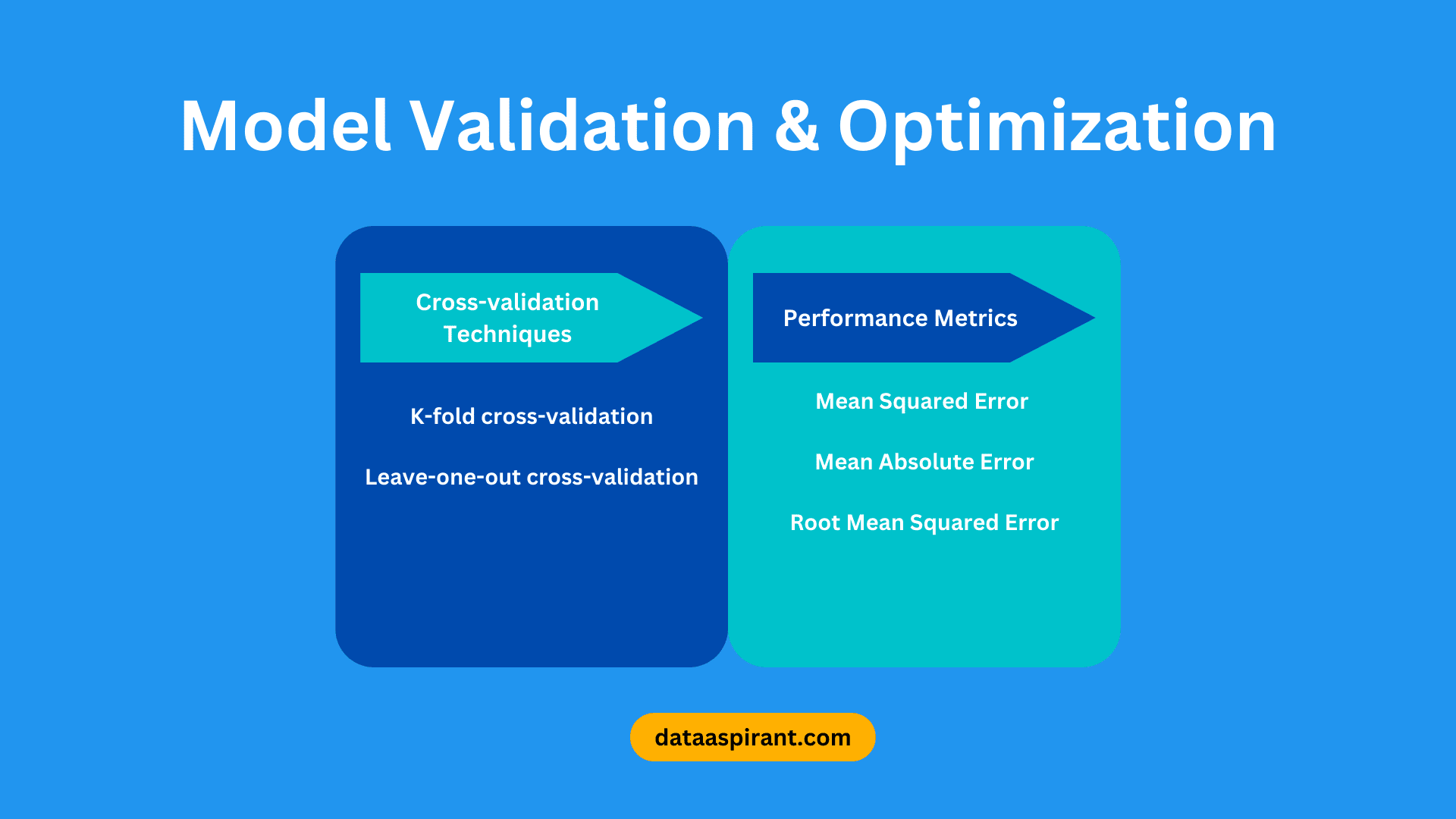

Multiple Linear Regression Model Validation and Optimization

After building the multiple linear regression model, validating and optimising its performance is essential. This section will discuss cross-validation techniques, performance metrics, and identifying and addressing multicollinearity.

Cross-validation Techniques

Cross-validation is a technique used to assess the performance of a model on unseen data. It involves dividing the dataset into multiple subsets, training the model on some of these subsets, and testing the model on the remaining subsets. Common cross-validation techniques include:

- K-fold Cross-Validation: In k-fold cross-validation, the dataset is divided into k equal-sized folds. The model is trained on k-1 folds and tested on the remaining fold. This process is repeated k times, with each fold serving as the test set once. The performance of the model is assessed based on the average performance across all k iterations.

- Leave-One-Out Cross-Validation: This method is a special case of k-fold cross-validation where k equals the number of observations in the dataset. In leave-one-out cross-validation, the model is trained on all observations except one, which serves as the test set. This process is repeated for each observation in the dataset. The performance of the model is assessed based on the average performance across all iterations.

Performance Metrics

Several metrics can be used to evaluate the performance of a multiple linear regression model. These metrics quantify the difference between the predicted values and the actual values of the dependent variable. Common performance metrics include:

- Mean Squared Error (MSE): The MSE is the average of the squared differences between the predicted and actual values. It emphasizes larger errors and is sensitive to outliers.

- Mean Absolute Error (MAE): The MAE is the average of the absolute differences between the predicted and actual values. It is less sensitive to outliers than the MSE.

- Root Mean Squared Error (RMSE): The RMSE is the square root of the MSE. It is expressed in the same units as the dependent variable, making it easier to interpret.

Identifying and Addressing Multicollinearity

Multicollinearity occurs when independent variables in a multiple linear regression model are highly correlated. It can lead to unstable coefficient estimates and reduced interpretability. To detect and address multicollinearity, consider the following steps:

- Variance inflation factor (VIF): The VIF measures how much a coefficient's variance is inflated due to multicollinearity. A VIF value greater than 10 is often considered indicative of multicollinearity. To calculate the VIF for each predictor, use statistical software or Python libraries such as Statsmodels or Scikit-Learn.

- Remedial measures: If multicollinearity is detected, consider the following remedial measures:

- Remove one of the correlated predictors: If two or more predictors are highly correlated, consider removing one of them to reduce multicollinearity.

- Combine correlated predictors: If correlated predictors represent similar information, consider combining them into a single predictor using techniques such as principal component analysis (PCA) or creating interaction terms.

- Regularization techniques: Regularization methods, such as ridge regression or Lasso regression, can help address multicollinearity by adding a penalty term to the regression equation, which shrinks the coefficients of correlated predictors.

By validating and optimizing your multiple linear regression model, you can ensure that it generalizes well to new data and provides accurate and reliable predictions.

Real-World Applications of Multiple Linear Regression

Multiple linear regression is widely used across various industries due to its versatility and ability to model relationships between multiple variables.

Examples of Using Multiple Linear Regression In various industries

- Finance: In the finance industry, multiple linear regression is used to predict stock prices, assess investment risks, and estimate the impact of various factors, such as interest rates, inflation, and economic indicators, on financial assets.

- Healthcare: Multiple linear regression is employed in healthcare to identify risk factors for diseases, predict patient outcomes, and evaluate the effectiveness of treatments. For example, it can be used to model the relationship between a patient's age, weight, blood pressure, and the likelihood of developing a specific medical condition.

- Marketing: In marketing, multiple linear regression is used to analyze customer behaviour and predict sales. It can help businesses understand the impact of different marketing strategies on sales revenue, such as advertising, pricing, and promotions.

- Sports: Multiple linear regression is also used in sports analytics to predict player performance, evaluate team strategies, and determine game outcome factors. For example, it can be employed to predict a basketball player's points scored based on their shooting percentage, minutes played, and other relevant statistics.

Case Studies Where Multiple Linear Regression Used

- Housing Price Prediction: A real estate company might use multiple linear regression to predict housing prices based on features such as square footage, the number of bedrooms and bathrooms, the age of the house, and location. This information can help buyers and sellers make informed decisions and assist the company in setting competitive prices for their listings.

- Customer Churn Prediction: A telecommunications company can use multiple linear regression to predict customer churn based on factors such as customer demographics, usage patterns, and customer service interactions. By identifying customers at risk of leaving, the company can take proactive measures to retain them, such as offering targeted promotions or improving customer support.

- Demand Forecasting: A retail company can use multiple linear regression to forecast product demand based on factors like seasonality, economic conditions, and promotional activities. Accurate demand forecasting helps businesses manage inventory levels, optimize supply chain operations, and plan marketing campaigns effectively.

- Predicting Academic Performance: Educational institutions can use multiple linear regression to predict students' academic performance based on factors such as previous grades, attendance, and socio-economic background. This information can help educators identify students who may need additional support and develop targeted interventions to improve academic outcomes.

These examples and case studies demonstrate the broad applicability of multiple linear regression in various industries. Mastering this technique can unlock valuable insights and make data-driven decisions across various domains.

Conclusion

As we reach the end of this blog post, let's recap the key points and emphasize the importance of continuous learning and skill development.

We encourage you to apply multiple linear regression in real-life projects and harness its full potential.

Recap of key points

- Multiple linear regression is an extension of simple linear regression, allowing for the analysis of relationships between one dependent variable and multiple independent variables.

- Assumptions of multiple linear regression include linearity, independence of errors, multivariate normality, homoscedasticity, and no multicollinearity.

- Data collection, cleaning, and preprocessing are crucial steps in preparing for multiple linear regression analysis.

- Building the model involves selecting the right predictors, implementing the model in Python using libraries like Scikit-Learn, and interpreting the results.

- Model validation and optimization include cross-validation techniques, performance metrics, and addressing multicollinearity.

- Multiple linear regression has diverse real-world applications across various industries, such as finance, healthcare, marketing, and sports.

As you gain experience with multiple linear regression, consider exploring more advanced topics, such as regularization techniques, nonlinear regression, and other machine learning algorithms.

Engaging with the data science community, attending workshops, and participating in online courses can help you further develop your skills and stay ahead in the field.

Now that you have a solid understanding of multiple linear regression, we encourage you to apply this powerful technique to real-life projects. Working with real-world data and solving practical problems will give you invaluable hands-on experience and deepen your understanding of multiple linear regression.

Additionally, incorporating this skill into your projects can lead to valuable insights and data-driven decision-making, ultimately enhancing your professional and personal endeavours.

Recommended Courses

Machine Learning Course

Rating: 4.5/5

Basic Statistics Course

Rating: 4/5

Bayesian Statistics Course

Rating: 4/5