Four Popular Hyperparameter Tuning Methods With Keras Tuner

Four Popular Hyperparameter Tuning Methods With Keras Tuner

The difference between successful people and not very successful people is the dedication towards ideas they have. The same analogy is true for building a highly accurate model. Where getting the best hyperparameters using the hyperparameter tuning packages such as keras tuner changes everything.

To give you a real life example. When I started building the models for online competition sites like kaggle. I used to build the various models with the default parameters.

If I am getting low-performance scores. Then I used to change the algorithm itself. In the end, I am not able to get the best rank on the leaderboard.

Suppose the decision tree algorithm is not giving best score, then I used to change to the random forest algorithm.

After many such iterations. I asked myself when all the machine learning or deep learning algorithms implementation is available online, why only a few people are getting high in the leaderboard.

The reason is straightforward: they know what the best parameters to use to build the model for the given datasets are.

How can we get the best parameters then?

For that, we need to perform the hyperparameter tuning.

Popular Hyperparameter Tuning Methods With Keras Tuner #keras #kerastuner #hyperparameter #machinelearning #deeplearning #python

This means if you know what are the best parameters to use to build the model for the given dataset. You will be king for the data science kingdom.

Just kidding 🙂

But you can achieve decent ranks in the online competition, and you can build accurate and high performing models. You can crack data scientist jobs easily.

So now the question is,

How to perform hyperparameter tuning?

That’s what we are going to discuss in this article. We will learn how we can perform hyperparameter tuning efficiently with the Keras tuner library.

Before we drive further. Let’s have a look at the topics you are going to learn in this article, Only if you read the complete article, though 🙂

Questions While Building Machine Learning or Deep Learning Models?

Questions before building machine learning models

One of the most important things we need to question ourselves when we try to build any machine learning or deep learning models or computer vision models is

- What depth of the tree is good for the decision tree?

- What is the best method to calculate the root for a random forest model?

- How many hidden layers do we have to add to our neural network model?

- How many neurons do we need to use in the RNN hidden layer?

- Which active functions will give better results for models to build on the provided data?

If you observe carefully, all these questions have the same pattern.

Could you guess what it is?

The pattern is we don’t have a specific answer for all these questions. The answer to these questions entirely depend on the data we are using.

Which means the answers will vary from problem to problem. As for each problem, the dataset we are going to use is entirely different.

In simple words, everyone needs to know which hyperparameter values we need to be selected to get a higher model performance.

What Are Hyperparameters?

In the beginning stage of implementing the machine learning (ML) or deep learning (DL) models, people tend to confuse about selecting hyperparameters for their ML or DL models.

What are these hyperparameters?

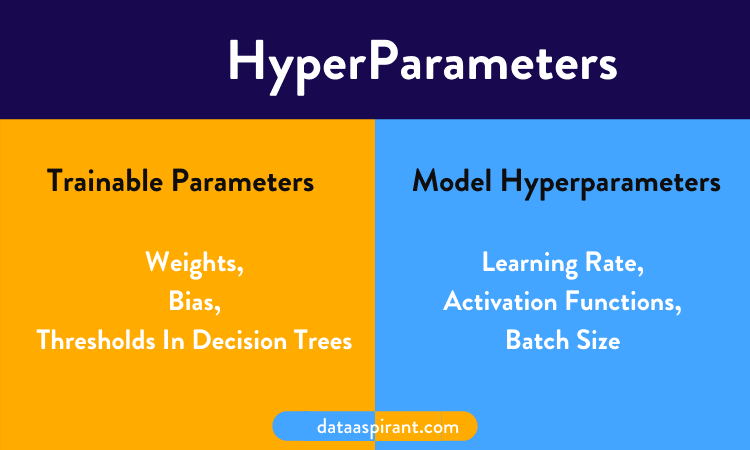

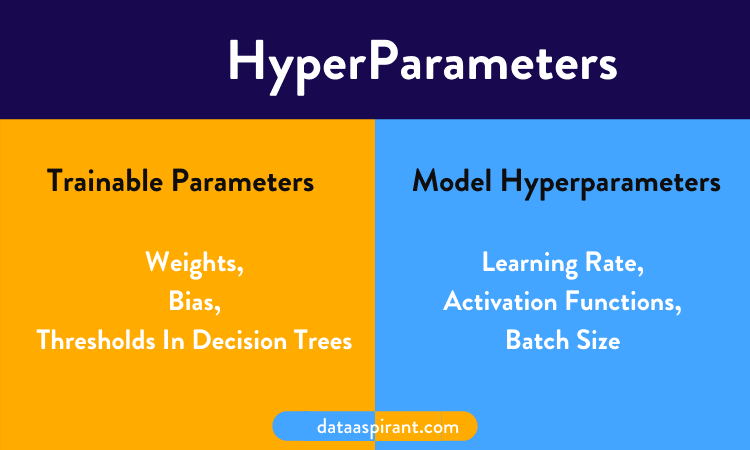

Hyper Parameters

Every machine learning or deep learning algorithm has two kinds of parameters.

- Trainable Parameters

- Model Hyperparamters

Trainable Parameters

Trainable parameters are the configuration variables learned by the algorithms during the model's training. This means these values are learned from the training data, which can not set manually.

For example, weights and bias in neural networks are the trainable model parameters.

Model Hyperparameters

Model Hyperparameters are the external configuration to the model, and we have to provide before starting or beginning of training a model.

These values are not estimated from the training data. But these values are used to estimate model trainable parameters.

For example. While building random forest or decision tree algorithm models, we need to define how many decision trees we need for model training.

When we build neural networks, we need to determine how many hidden layers will give better performance after training the model by optimising the loss functions.

There are many hyperparameters like this. Few or well-known hyperparameters are related to neural networks.

- Number of hidden layers and number of units in each hidden layer

- Dropout

- Initialization of Network weights

- Activation functions

- Learning rate

- Number of epochs

- Batch size, etc.

What Is Hyperparameter Tuning?

We don’t know the most suitable or best values for all these model hyperparameters for our problem statements.

We may take hyperparameter values from other problem statements. Which are already solved by others with high-performance value. Else we can search for the best value by using trial and error techniques.

Hyperparameter tuning is the process of searching for the best values for the hyperparameters of the ideal model.

Till now, you know what the hyperparameters and hyperparameter tuning are. In the next section, we will discuss why this hyperparameter tuning is essential for our model building.

Importance Of Hyperparameter Tuning

Tuning the model hyperparameters is essential because hyperparameters directly control the training ML/DL models' behavior. Also, it shows a significant impact on the trained model, which accurately predict results on unseen data.

In other words, Helps in building the high performance models, when we quantify popular evaluation metrics.

The right choice of the hyperparameter values while tuning the model will shine the trained model effectively.

Nowadays, Artificial intelligence and its subfields, such as machine learning, deep learning, natural language processing, and computer vision techniques, are providing the most reliable & accurate results.

These solutions simplify the traditional/old process techniques in many industries. But building these models require more resources like space and time consuming and computationally expensive.

So finding the best values for hyperparameters of our model is significant to save our resources.

Choosing an appropriate or most suitable hyperparameter for our ML/DL problem statements is the critical task or step while building an effective and accurate model. Most of the ML/DL models' performance depends on their hyperparameter values.

To get efficient results from the problem statements of machine learning or deep learning algorithms. You should focus more while tuning the model hyperparameters.

Tuning of the hyperparameter requires search space. This helps in searching for the best hyperparameters from our choice and range of hyperparameters.

This process will become computationally expensive with the increment of the number of hyperparameters.

So while selecting hyperparameters, we always need to keep in mind which hyperparameters have an impact on the model’s performance.

Few points where exactly hyperparameter tuning will show its impact to improve model performance.

- Accelerate accuracy on the test/validation dataset

- Reduce the number of model parameters

- Reduce the number of hidden layers

- Faster inference speed

Popular Hyperparameter Tuning Methods

Machine learning or deep learning model tuning is a kind of optimization problem. We have different types of hyperparameters for each model.

Our goal here is to find the best combination of those hyperparameter values. These values can help to minimize model loss or maximize the model accuracy values.

There are few hyperparameter tuning techniques available for us. In this section, we will discuss a few of those techniques.

Manual search

In the manual search, we choose a few model hyperparameters and their values by our experience or by random guess.

Build a model with guessed hyperparameter values and then check performance value, either loss or accuracy, or any other performance metrics. This is a repeated process until we are satisfied with our model performance value.

Grid search

This is a very traditional technique for hyperparameter tuning. In the Grid search method, we can set up a grid of specific model hyperparameters and then train/ test our problem statement model on every combination of values.

Random search

This is another kind of hyperparameter tuning technique. Here also, we are set up a grid of specific model hyperparameter values the same as the grid search, but here we are selecting the combination of hyperparameter values randomly.

A random search tuning technique is faster than a grid search, but a grid search is more effective than a random search.

Because grid search utilizes all combinations of selected hyperparameter values, but a random search misses a few of those combinations, maybe that combination will improve model performance value.

But both tuning techniques, Grid and Random search don't use prior information from the past experiments to select the next set of hyperparameter value combinations.

Bayesian optimization

The setting of best values for model hyperparameters will improve the model's performance because most of the ML/DL models frequently require tuning processes. We discussed this point already; we just remember basic points for you.

Above discussed, hyperparameter tuning techniques such as grid and random search use full space, which one allocated for available hyperparameter values in an isolated way without paying attention to the previous results or previous combinations.

The tuning process will become a time-consuming task in these both tuning techniques if the number of hyperparameter values increases. Means search space will gradually increase along with the number of hyperparameters.

In the case of Bayesian optimization tuning techniques, tuning processes will reduce the time spent to get optimal values for the model hyperparameters and also produce better generalization results on the test data.

The Bayesian optimization technique will consider the previous set of hyperparameters while choosing a new set of hyperparameters to evaluate model performance.

This bayesian optimization technique is more effective than previous tuning techniques.

Till now, we know what kind of process we use while tuning the model hyperparameters.

In this article, we decided to discuss the Keras tuner library for this tuning purpose. Next few minutes, we are focusing on that; you can get an idea about the Keras tuner library, a few methods of it.

Introduction to Keras tuner

Keras tuner is a library to perform hyperparameter tuning with Tensorflow 2.0. This library solves the pain points of searching for the best suitable hyperparameter values for our ML/DL models.

In short, Keras tuner aims to find the most significant values for hyperparameters of specified ML/DL models with the help of the tuners.

These tuners are like searching agents to find the right hyperparameter values. Keras tuner comes with the above-mentioned tuning techniques such as random search, Bayesian optimization, etc.

Keras Tuner Methods

Present Keras Tuner provides four kinds of tuners.

- Bayesian Optimization

- Hyperband

- Random search

- Sklearn

We will discuss all these tuner snippets and other useful methods to build tuners in the next section.

In the coming section, we plan to discuss a list of steps to find the best model using a tuner.

Selecting Best Model Using Keras Tuner

In this section will learn all steps you need to perform to get the best hyperparameter values for our model. Let's start discussing a list of steps.

Step 1: Install Libraries

First of all, install all required libraries to build our machine learning or deep learning model.

Step 2: import Libraries

After installing libraries, we need to import those libraries and default libraries to use them.

Step 3: Load dataset

Whatever dataset we need to use for our problem statement to build our model, we have to load that dataset.

Step 4: Preprocessing

The real-time dataset doesn't have any good quality means it has noise, missing values, etc. in this step, we need to apply a few preprocessing steps to make it quality data.

Step 5: Splitting data

We split the whole dataset into the train, test sets or train, test, validation sets.

Train dataset: Used for training a model while building a model. The model will learn patterns from this data.

Test dataset: This is for testing our trained model. We take performance value based on model results after applying on test data because it will apply a learning pattern on test data.

Validation dataset: this is entirely new data. For example, if our dataset has information about bank customers from 2000 to 2010.

We take 2000 to 2009 bank information for training and testing datasets; we have 2010 information or data for validation trained models.

Step 6: Define the model

After splitting the dataset into a train and test, we have to define an ML/DL model for our problem statement.

Step 7: Search Space Definition

In this step, we have to provide values for our model hyperparameters—which means which hyperparameter we want to optimize with their values in some range or few values.

Step 8: Searching for the best model with good hyperparameter values

Here in this Keras, the tuner will train the model with the combination of different hyperparameter values.

Step 9: Get The Best Model

After completing the 8th step, we can get the best model with corresponding hyperparameter values and performance values.

In the next section, we will show how you can find the best hyperparameter with code.

Hyperparameter Tuning With Keras Tuner

Now we will give you the codes to build various Keras tuner methods. You just need to copy these and change them according to your needs. Let’s start with the bayesian optimization tuning methods.

Bayesian Optimization Tuning Implementation With Keras

Arguments Explanation:

- hypermodel: Instance of HyperModel class (or callable that takes hyperparameters and returns a Model instance).

- objective: String. Name of model metric to minimize or maximize, e.g. "val_accuracy".

- max_trials: Int. Total number of trials (model configurations) to test at most. Note that the oracle may interrupt the search before max_trial models have been tested if the search space has been exhausted.

- num_initial_points: Int. The number of randomly generated samples as initial training data for Bayesian optimization.

- alpha: Float or array-like. Value added to the diagonal of the kernel matrix during fitting.

- beta: Float. The balancing factor of exploration and exploitation. The larger it is, the more explorative it is.

- seed: Int. Random seed.

- hyperparameters: HyperParameters class instance. Used to override (or register in advance) hyperparamters in the search space.

- tune_new_entries: Whether hyperparameter entries that are requested by the hypermodel but that were not specified in hyperparameters should be added to the search space, or not. If not, then the default value for these parameters will be used.

- allow_new_entries: Whether the hypermodel is allowed to request hyperparameter entries not listed in hyperparameters.

- **kwargs: Keyword arguments relevant to all Tuner subclasses. Please see the docstring for Tuner.

Hyperband tuning implementation with Keras

Arguments Explanation

hypermodel: Instance of HyperModel class (or callable that takes hyperparameters and returns a Model instance).

objective: String. Name of model metric to minimize or maximize, e.g. "val_accuracy".

max_epochs: Int. The maximum number of epochs to train one model. It is recommended to set this to a value slightly higher than the expected time to convergence for your largest Model, and to use early stopping during training (for example, via tf.keras.callbacks.EarlyStopping).

factor: Int. Reduction factor for the number of epochs and number of models for each bracket.

hyperband_iterations: Int >= 1. The number of times to iterate over the full Hyperband algorithm. One iteration will run approximately max_epochs * (math.log(max_epochs, factor) ** 2) cumulative epochs across all trials. It is recommended to set this to as high a value as is within your resource budget.

seed: Int. Random seed.

hyperparameters: HyperParameters class instance. Can be used to override (or register in advance) hyperparamters in the search space.

tune_new_entries: Whether hyperparameter entries that are requested by the hypermodel but that were not specified in hyperparameters should be added to the search space, or not. If not, then the default value for these parameters will be used.

allow_new_entries: Whether the hypermodel is allowed to request hyperparameter entries not listed in hyperparameters.

**kwargs: Keyword arguments relevant to all Tuner subclasses. Please see the docstring for Tuner.

Random search tuning implementation with Keras

Arguments Explanation:

hypermodel: Instance of HyperModel class (or callable that takes hyperparameters and returns a Model instance).

objective: String. Name of model metric to minimize or maximize, e.g. "val_accuracy".

max_trials: Int. Total number of trials (model configurations) to test at most. Note that the oracle may interrupt the search before max_trial models have been tested.

seed: Int. Random seed.

hyperparameters: HyperParameters class instance. Can be used to override (or register in advance) hyperparamters in the search space.

tune_new_entries: Whether hyperparameter entries that are requested by the hypermodel but that were not specified in hyperparameters should be added to the search space, or not. If not, then the default value for these parameters will be used.

allow_new_entries: Whether the hypermodel is allowed to request hyperparameter entries not listed in hyperparameters.

**kwargs: Keyword arguments relevant to all Tuner subclasses. Please see the docstring for Tuner.

Sklearn tuning implementation with Keras

Arguments Explanation:

oracle: An instance of the kerastuner.Oracle class. Note that for this Tuner, the objective for the Oracle should always be set to Objective('score', direction='max'). Also, Oracles that exploit Neural-Network-specific training (e.g. Hyperband) should not be used with this Tuner.

hypermodel: Instance of HyperModel class (or callable that takes a Hyperparameters object and returns a Model instance).

scoring: An sklearn scoring function. For more information, see sklearn.metrics.make_scorer. If not provided, the Model's default scoring will be used via model.score. Note that if you are searching across different Model families, the default scoring for these Models will often be different. In this case you should supply scoring here in order to make sure your Models are being scored on the same metric.

metrics: Additional sklearn.metrics functions to monitor during search. Note that these metrics do not affect the search process.

cv: An sklearn.model_selection Splitter class. Used to determine how samples are split up into groups for cross-validation.

**kwargs: Keyword arguments relevant to all Tuner subclasses. Please see the docstring for Tuner.

In the above section we have given the codes for various keras tuners. The below explains you use the keras tuners in your model building tasks.

Conclusion

We hope you learned the importance of getting the best hyperparameters for machine learning and deep learning models. You also learned how you can get good performance by performing the hyperparameter tuning using Keras tuner.

Do experiments with all types of keras tuner while you are building models and see how your experiments work.

All the best 🙂

Recommended Courses

How to Win a Data Science Kagglers Competition

Rating: 4.7/5

Popular Python Machine Learning A to Z Course

Rating: 4.5/5

Supervised learning with classification algorithms

Rating: 4/5