How To Build an Effective Email Spam Classification model with Spacy Python

Building Email Spam Classifier with Spacy Python

Capturing data in different forms is increasing exponentially this includes numerical data, text data, image data .. etc.

Numerical data is the main source for building various machine learning and statistical models, but with the increase in text data, people are using natural language processing techniques and extracting meaningful information from text data to get more insights that helps in taking the key business decisions.

Generating insights from text data is not as simple as generating insights from numerical data because the text data won’t be in a structured manner. For processing text data the first step is to convert the unstructured text data into structured data.

We are having various Python libraries to extract text data such as NLTK, spacy, text blob.

In this article, we are using the spacy natural language python library to build an email spam classification model to identify an email is spam or not in just a few lines of code.

Email spam classification model building

Regularly we check our emails, not all the emails which came to our mail account will appear in inbox. Many of them go to spam or junk folders.

Ever wondered how it was happening?

How the mails are classified and sent to inbox or spam folder based on the email text?

Before any email reaching your inbox, Google is using their own email classifier, which will identify whether the recevied email need to send to inbox or spam.

If you are still thinking about how the email classifier works don't worry.

In this article, we are going to build an email spam classifier in python that classifies the given mail is spam or not.

There are a number of ways to build email classifier using Natural Language Processing different algorithms, we can you scikit learn or any other package. But in this article, we are going to use the spacy library to build the email classifier.

The main advantage of spacy is code is well optimized, it will come up with many options which helps us to build a model in very less time and with minimal code.

Without any delay let’s start building the email classification model now.

How to build the efficient email classifier with spacy python

Load spam-ham email dataset

For building the email classifier we are going to use the email spam dataset that was downloaded from kaggle NLP datasets.

Let’s load the data set using Pandas read csv method.

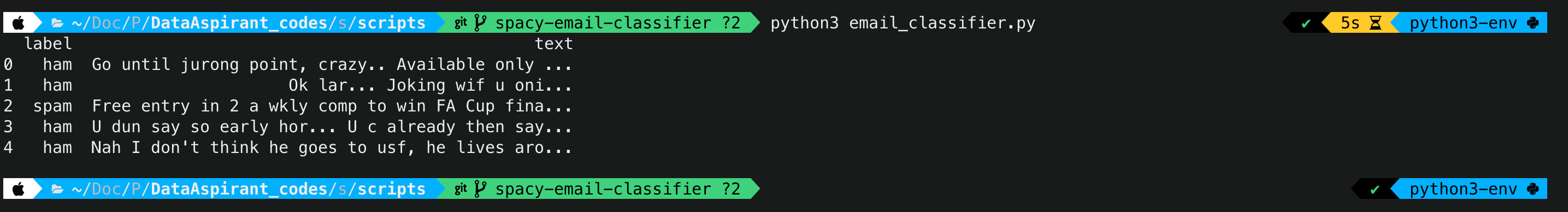

Email spam classification load dataset output

Let’s quickly check the attributes of the loaded dataset. The dataset is having information in 2 columns, one column contains the label, the other column contains the text.

- label: Helps in identifiy the text is spam or ham.

- text: The email text

Now let's check the total numer of observations of the loaded dataset and also let's understand the spam and ham distribution in the data.

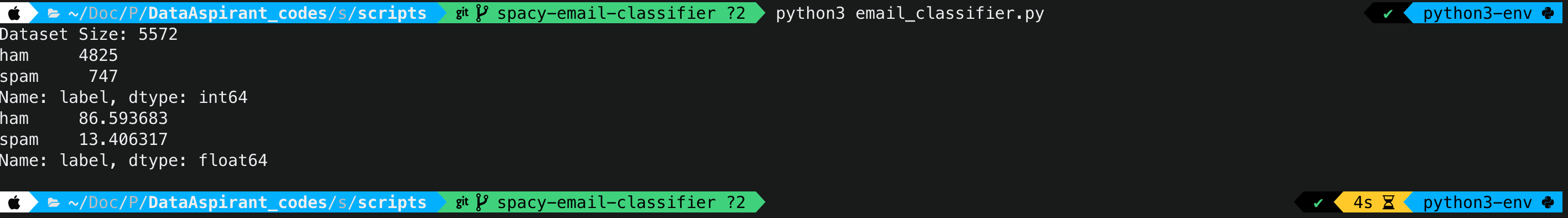

Email spam & ham dataset size

We’re having 5572 observations in the loaded data.

- ham count : 4825

- spam count: 747

Spam and ham distribution

The above graph shows the ham and spam distribution. The distribution says the dataset is having majority of ham class population than the spam class population.

Create spacy text categorization model pipeline

To build models with spacy you can load the existing pipeline models or you create an empty model and we can add the modeling steps in a pipeline fashion.

Let’s understand the above code line by line.

In the 10th line, we have created the empty model with spacy and passing the language which is English (en).

Next lines we are creating a pipeline saying that we need this model has to perform text classification.

In the config specifing it as exclusive class, which means we will provide the target classes in our case spam or ham. Using the bow architecture which means using the bag-of-words approach for modeling.

We can use other inbuild spacy architecutres also, But for this article we are using the bag of words approach.

Next, we are adding the created text_cat pipeline to our empty model.

At this stage we are having a model and we are saying that this model has to perform the text classifcation using the bag of words approach.

Next we are adding the target classes spam and ham to the created text categorization model.

In this article we’re not going to perform any NLP related data preprocessing techniques on the text.

Generally, people will do a number of things before building a text related model such as

- Creating tokens

- Cleaning tokens

- Removing stop words

- Lemmatization

- Stemming ..etc

But we are not performing any of these for this article. If you want you can perform these steps before starting the modeling. You can perform all these NLP preprocessing techniques in one go. To get the code, check this article popular natural language preprocessing techniques implementation.

Split train and test datasets

We will split the loaded data into two separate datasets.

- Train dataset: For training the text categorization model.

- Test dataset: For validating the performence of the model.

To split the data into 2 such datasets we are using scikit learn model selection train test split method, in such a way that the test data will be 33% of the loaded data.

Let’s say we are having 100 records in the loaded dataset, if we specify the test as 30% then train and test split method split 70 records for training and the remaining 30 records for testing.

This is same like implementing the Randomforest model and decision tree models with Sklearn.

Creating training model data

Now we have two datasets one is for training and the other one is for testing our model.

Unlike the other scikit-learn models you can not pass the target as a single column for spacy, we need to explicitly create the targets like a boolean list.

Like for each email text, the target label is true for which class and false for which class.

In the below code let’s do that.

Basically we are creating onehot encodings for target categories, where we are creating two boolean labels and assigning ture for the actual label and false for the other label.

Now we’re having features and target for training the model but first we need to combine the feature and targets into a single dataset to build the email classifiaction model.

We are taking features (email text), converted train labels (booleans) and joining them using the zip method, the same appraoch we are applying both for training and test datasets.

Creating a training function

Now we are having both train and test data to build the model next Let’s create a train function that takes the below input parameters to build the model.

- model: Created empty model

- train data: Created train data

- optimizer: Optimizer (will create before calling the train function)

- batch size: Size of the bathes

- epochs: Epochs size

Let's understand the above code line by line.

For each epoch we are shuffling the data using the random shuffle method then creating the batches. For each batch updating the model using the optimizer, at the end capturing the losses.

Here the optimiser uses various optimization functions to reduce the loss.

For building email classifier model we created the optimizer and we are using a batch size of 5 and 10 epochs run the above function which we created for training the model.

Let’s see what was the losses we are getting.

email spam classifier losses

Creating a prediction function

We have trained the model now we can check the efficiency of the model we build.

For that, we need to create a prediction function before doing that let’s check what the model is predicting for any given email text.

Email spacy spam classifier sample predictions

For the above email text, the actual output is ham and our model is having high probability which is nearly 99% for ham and 1% for spam. Which means our model is predicting the email text properly.

Now let’s write a generalized function that takes the model, email texts and predicts the outcome labels.

The predictions function takes two parameters one is model the other one is email text, here text is basically the email content.

In the 4th line of the function text is tokenizing then splitting the content of the email and storing in docs.

In the next lines calls the textcat method we created, using the textcat object to predict the email class that is ham or spam for the text.

Scores basically gives the probabilities for both of the classes, for identifying the label class we’re taking the max probability using the argmax then we are returning the predictions.

Spam Email Classifier Model Evolution

To measure the performance of the build email classifier we are going to use acuuracy and confusion matrix metrics.

Accuracy

Now let’s call the predicts function on the training dataset and test dataset to measure the accuracy of the model.

For calculating the accuracy we are using scikit learn accuracy score method, this method takes two parameters which are the actual labels and the predicted labels.

Email spam classifier accuracy

Dataset | Observations | Accuracy |

|---|---|---|

Training dataset | 3733 | 99% |

Test dataset | 1539 | 98% |

For training dataset the model is getting 99% of accuracy on test data set we are getting 98% of accuracy.

For evaluating any model accuracy alone cannot be an efficient way to estimate the model is accurate or not.

Let me give you an example.

Let’s say we are predicting whether someone will default for any bank organization or not. If we see the data people defaulting rate will be very less let’s say 10%. suppose if you build a model to predict a customer is going to be default you see you are getting accuracy as 90%.

Do you think the model is good?

Take a moment and think on that, data itself says 90% of people or not going to be default and 10% people are going to be default. If we say people or not going to be default without building any machine learning model the accuracy is 90%

To handel these kind of issues we use other metrics which check if the accuracy we got is the is reasonable. For that we can use the confusion matrix.

Confusion matrix

Confusion matrix on train dataset

Confusion matrix on test dataset

Confusion matrix shows the actual predictions and the miss classifications for each target class. The above confusion matrix plots for the train and test showcasing the same.

For checking the performance of the model, we are having various evaluation methods, which helps in checking the model performance various machine learning models.

Complete code

You can fork the complete code for building the email spam classifier in our github repository.

What Next?

Now we build the email spam classifier, using this pipeline you can solve similar kind of problems. All you needs to change is the inputs, outputs and apply few natural language text preprocessing techniques before modeling.

- Sentiment analysis classifier

- Documents topic indentification

- Sports or business news articles classification

Conclusion

In this article we learned how to build the email spam classifier with sapcy in few lines of code. Don't stop, using the same code try to build various text classification models.

Recommended NLP courses