How Blending Technique Improves Machine Learning Model’s Performace

Have you tried blending algorithms or blending technique in your machine learning projects to improve the performance of the model?

If not, take a cup of coffee and read this article; by the end of this article, you will know how to use blending technique in machine lenairng, which boost your model's performance to the next level.

The key advantage of blending algorithms is we can use them for both classification and regression. In some cases, they are also used for

- Anomaly Detection,

- Recommendation Systems,

- Image Recognition.

Have you tried blending algorithms

In blending we build multiple models. The main reason behind implementing multiple algorithms and considering them with majority voting or weighted average is because it penalizes the lower performance models and their outputs will be combined, and as we know that:

“A single individual can be wrong, but the crowd can not be wrong“

This is just the tip of the ice burg. In this article, we will discuss the blending algorithms, their core idea, the working mechanism of the blending technique, and how they differ from stacking algorithms.

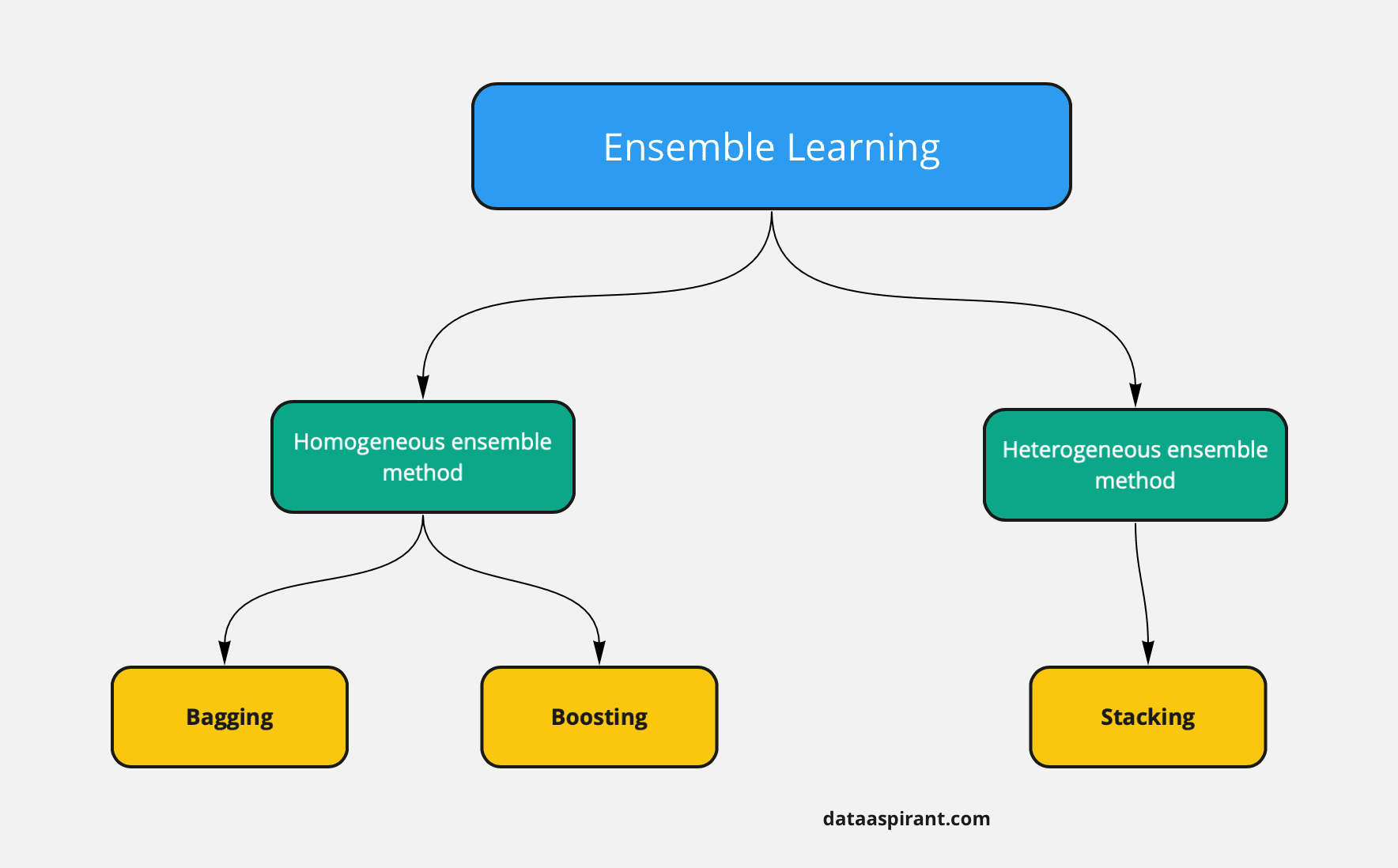

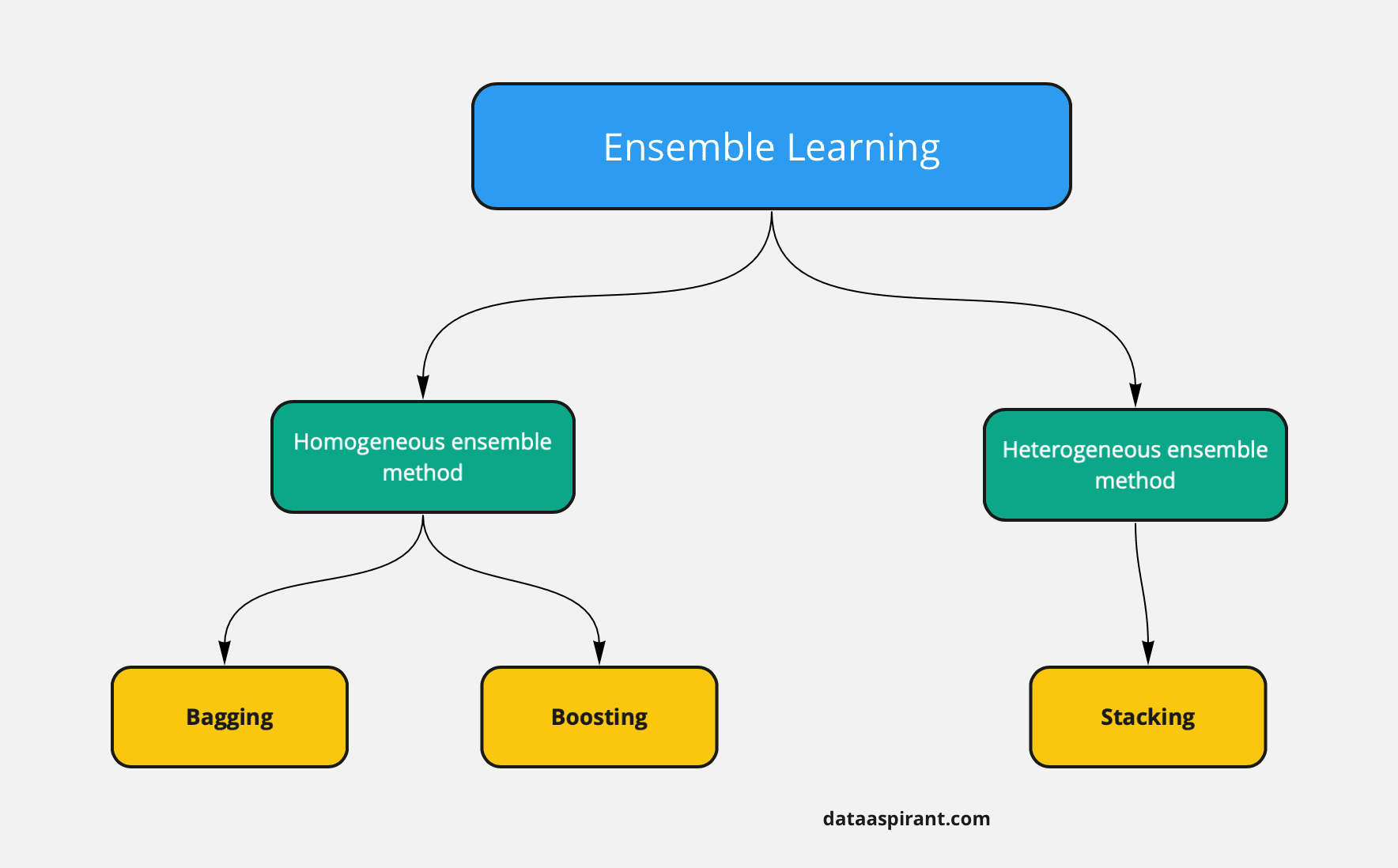

Blending technique is one of the ensemble methods used in machine-learning model building. Let’s spend time on understanding the ensemble methods before we learn about blending.

What are Ensemble Methods

Before directly jumping to the Blending Algorithms, let us understand the concept of ensemble methods.

The ensemble methods in machine learning are the type of algorithms or models where multiple machine learning algorithms are used and fed with the training data. They build multiple machine learning algorithms in parallel using data bootstrapping techniques to train.

The final results are obtained on the basis of every output from each build algorithm.

In simple language,

Ensemble models combine the power of multiple algorithms and return an output considering every individual model or algorithm involved.

Various types of ensemble models in machine learning. Such as

The working of the ensemble models is different from other traditional machine learning algorithms. As we discussed above, multiple machine learning models are chosen, and the training data is fed to them.

Once training is completed, the results or the outputs of each and every model is aggregated, and the final output is decided on the basis of voting of multiple results or weighted averaging of the same.

The working mechanism is different for different ensemble models.

The stacking and voting ensemble haves different machine learning algorithms as base models, and they are trained on the training data. Once they are trained and able to predict the test data, the final results are obtained by voting or averaging with weights.

On the other hand, in the case of Bagging and Boosting, we have a homogeneous machine-learning algorithm, and we split the data into multiple parts, known as bootstrapping.

Here the bootstrapped data is then fed to the multiple same machine learning algorithms, and then the model is trained.

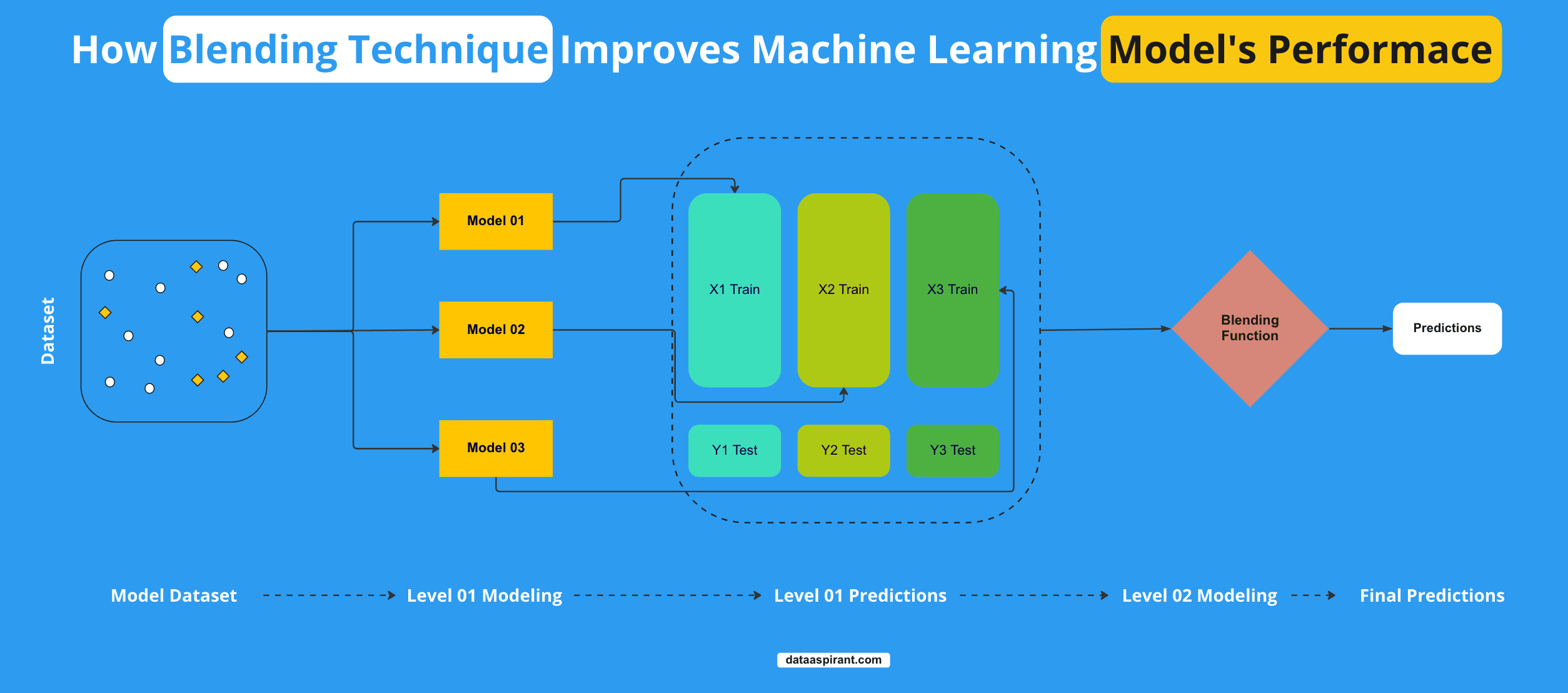

How Blending Technique Algorithm Works

Four types of ensemble methods are used for real-time model training and predictions:

- Voting ensembles,

- Bagging techniques,

- Boosting techniques,

- Stacking ensembles.

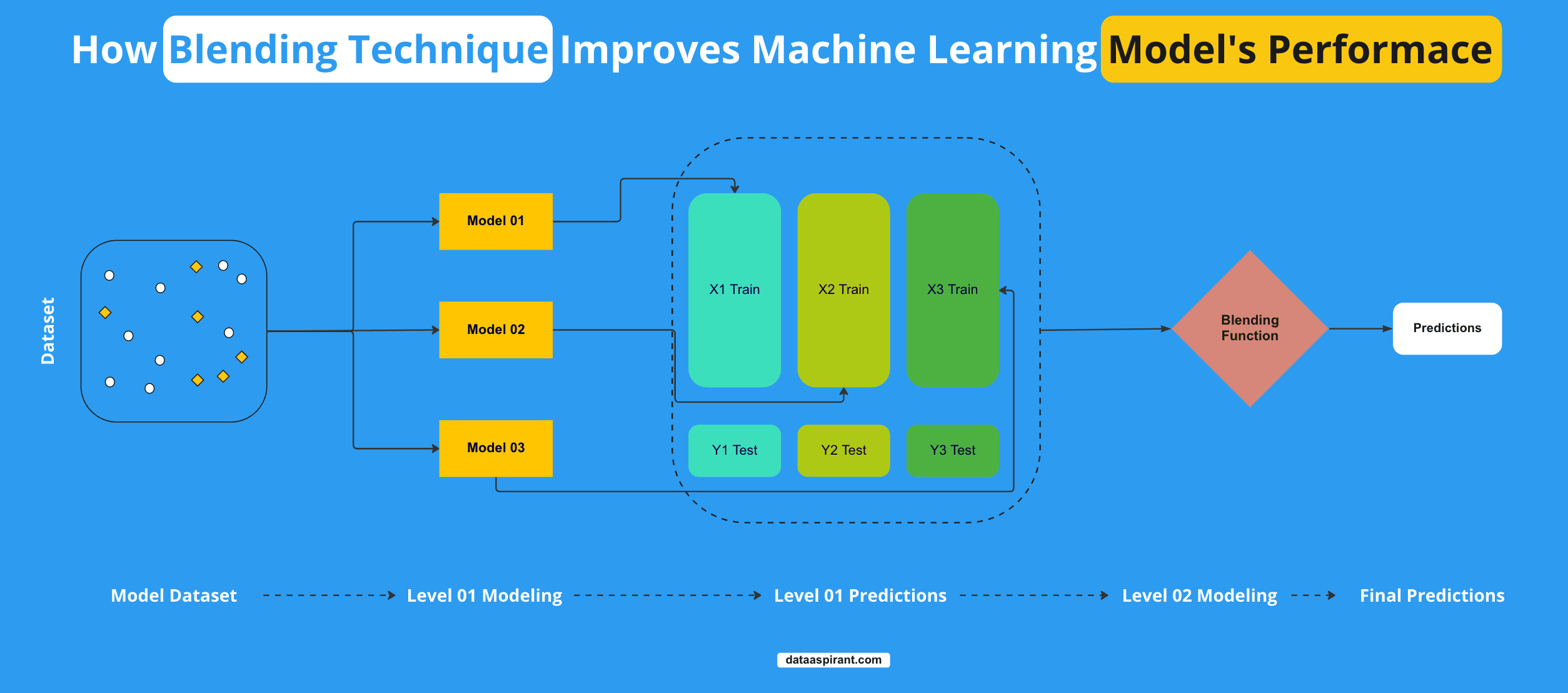

Blending is the variations of stacking method, where multiple layers of algorithms are used to train and predict the data.

Unlike other ensemble methods, which use multiple algorithms in single layers, stacking and blending use a number of layers with various algorithms.

Here mainly, two levels/layers are used.

In the first layer, we have multiple algorithms called base models, and in the second or last layer, we have one deciding algorithm called metamodel.

The general approach in stacking and blending algorithms is that the data is first fed to the base model for the training. Then the outputs from the base model are provided to the metamodel as a training set, and once the metamodel is trained, the result is considered the final result of the algorithms.

If we specifically talk about the blending approach, the dataset is fed to the base models first. The algorithms in base models get trained on the training data provided, and once they are trained, the test set is given to them for the prediction of the same.

The base models are now trained to predict test data, and whatever results they predict for the test data will be fed to the metamodel or the algorithms in the last player for training.

Now the metamodel will be trained on fed data, and the results or accuracies that the metamodel will return will be considered the final results of the algorithm.

One important thing to note is that the stacking and blending working mechanism is almost identical. Just the stacking algorithms split the data using K parts, and then the base models are trained, whereas the blending approach classically splits the data in a conventional way, and then the base models are trained on the same.

Challenges With Blending Technique

In previous sections we discussed that we first divide the dataset into parts, training and testing set, and then the training set is provided to the base models for the training purpose.

Once the base models are trained, the test set is provided, and the results of the test set are used as a training set of the metamodel. The problem lies here, where we train the metamodel with test results from the base models.

As we are training our metamodel on already known data, the results obtained from the base models on the test set as base models have already seen the dataset.

Here we can clearly understand that the data is leakage, which can cause poor performance of the model, which may not give reliable results.

For example, let us say we have a dataset of 100 rows, and we split the same into training sets of 70 and testing sets of 30. Now we will train the base model with the training set of 70 rows, and once the model is trained, now it is ready for the prediction phase.

Now to train the metamodel, we will need training data for the same. To get the same, we simply perform predictions on the base model on the test data set of 30 rows.

The base model will be trained on the same, and whatever results from the base model gives as an output will be used as the training set for the meta models.

Here we can see that we used the test set from the prediction on base models and we used the results of the same for the training of the meta-model; here, we can see the case of data leakage, and this needs to be resolved to get a reliable and accurate model.

Now to overcome these issues, there can be only one solution.

We need to stop data leakage somehow.

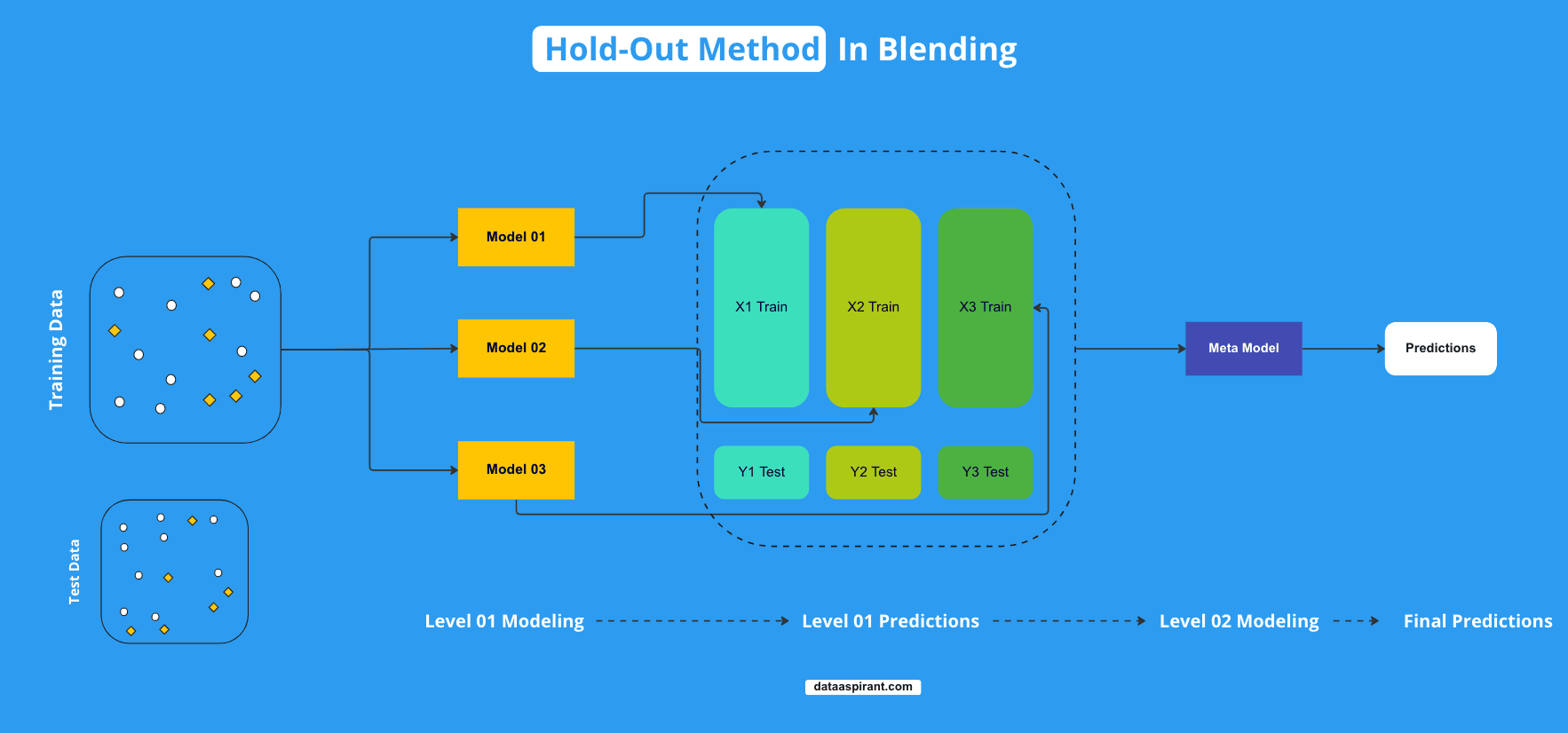

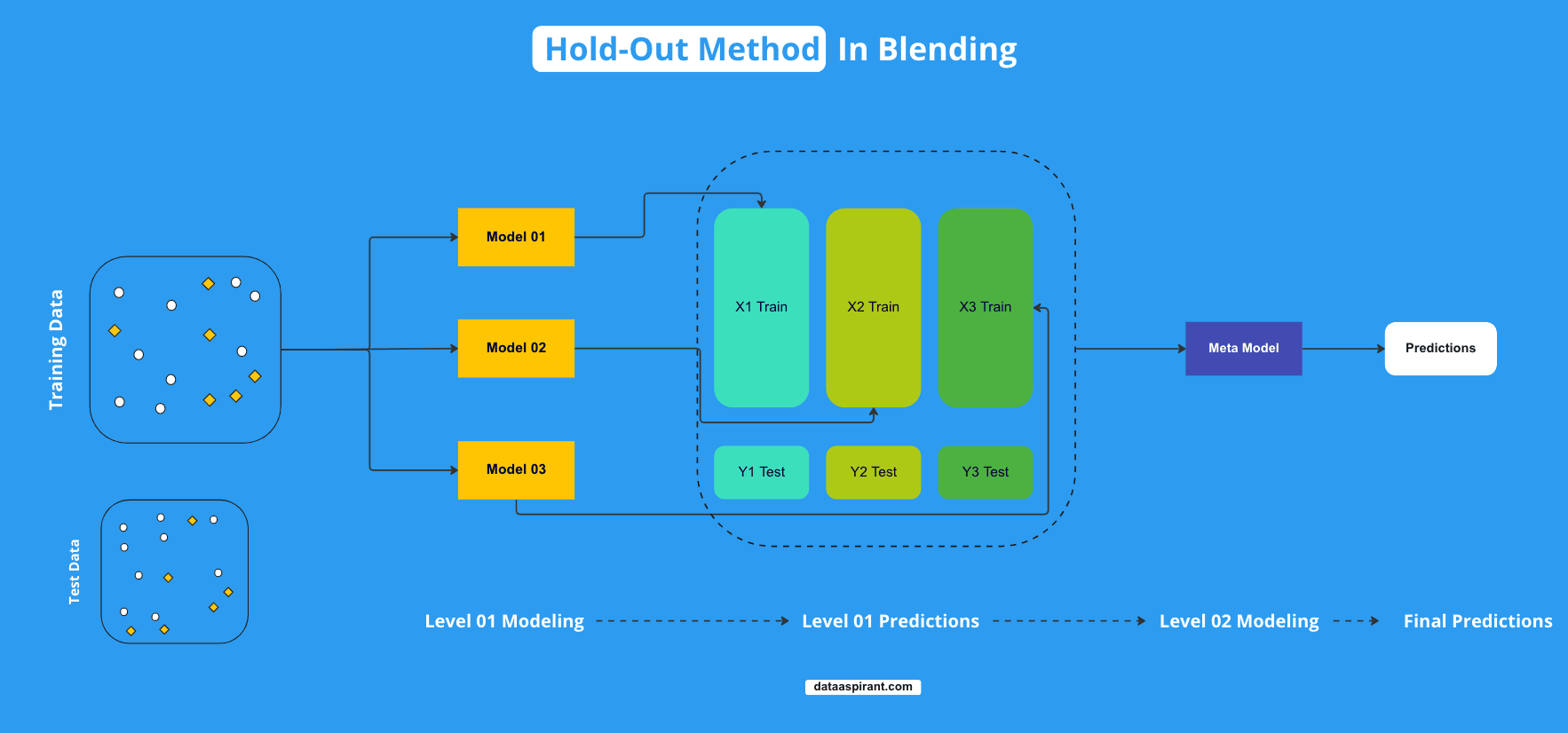

Hold-Out Method in Blending

Previously, we saw that we were using the test set for the prediction on the base model. Then the results from the same are used for training of the meta-model, which is somehow leading to the problem of data leakage and which needs to be resolved.

To overcome this issue, a method called the hold-out approach is used in blending models.

In the hold-out method of the blending algorithms, we change the way of splitting the data into training and testing sets.

Until now, we have been splitting the data into two parts, training and testing sets, but from now on, we will split the data into three parts: training, testing, and validation split.

So here, first, the whole dataset will be split into two parts: training and testing datasets. Then the training dataset will again be sp, fitted into two parts: training and validation datasets.

Now the problem of data leakage is solved, as the training dataset will be used to train the base models. The validation data will be used as a test dataset for base models.

Now whatever the base models predict results will be given to the metamodel for training, and the actual; test model from the first split of the data will be used to evaluate the final model that is obtained after the training of the metamodel.

Difference Between Stacking and Blending

Stacking and blending are two different ensemble learning techniques used to combine the predictions of multiple base models (also called base learners) to improve overall predictive performance.

Let me explain each technique and its workflow.

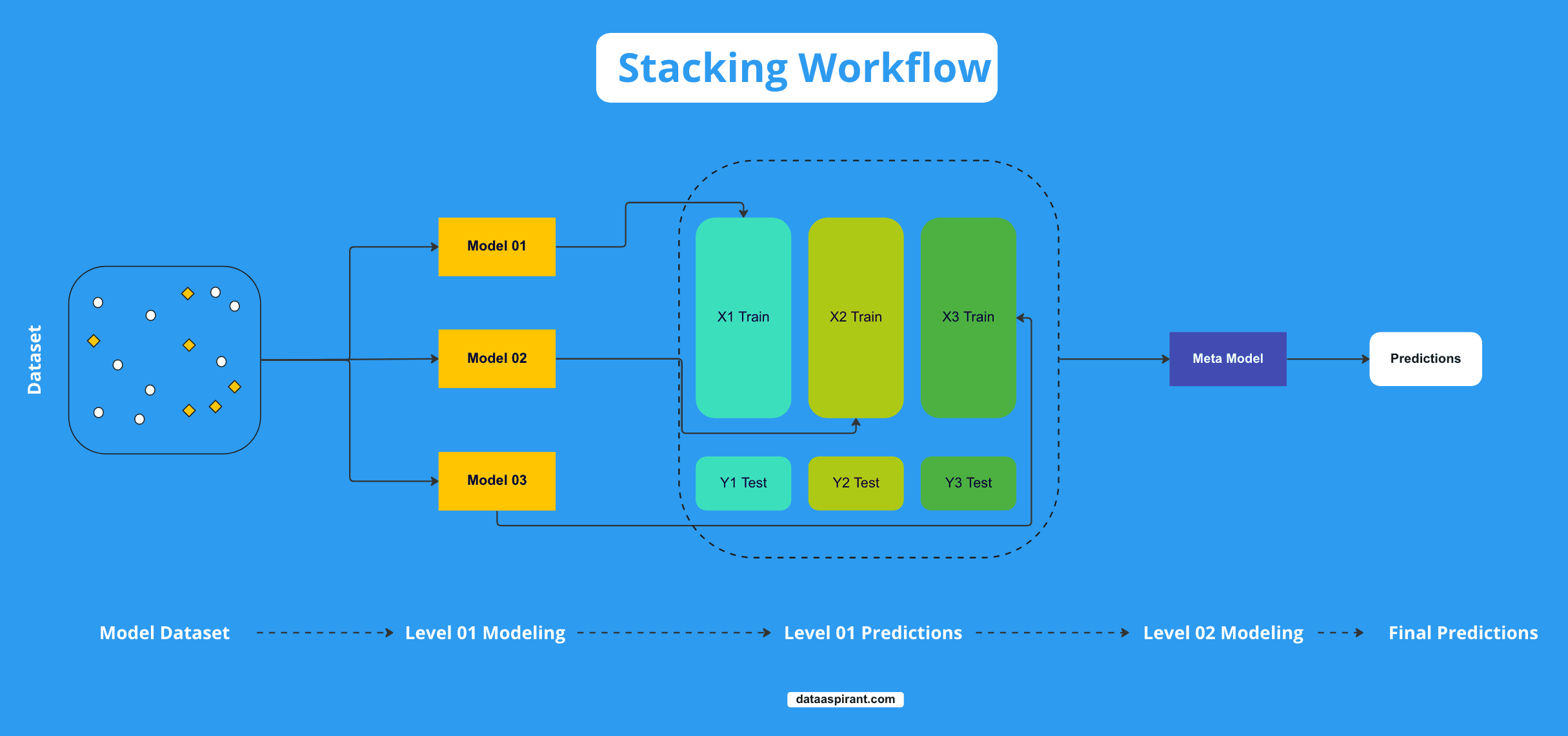

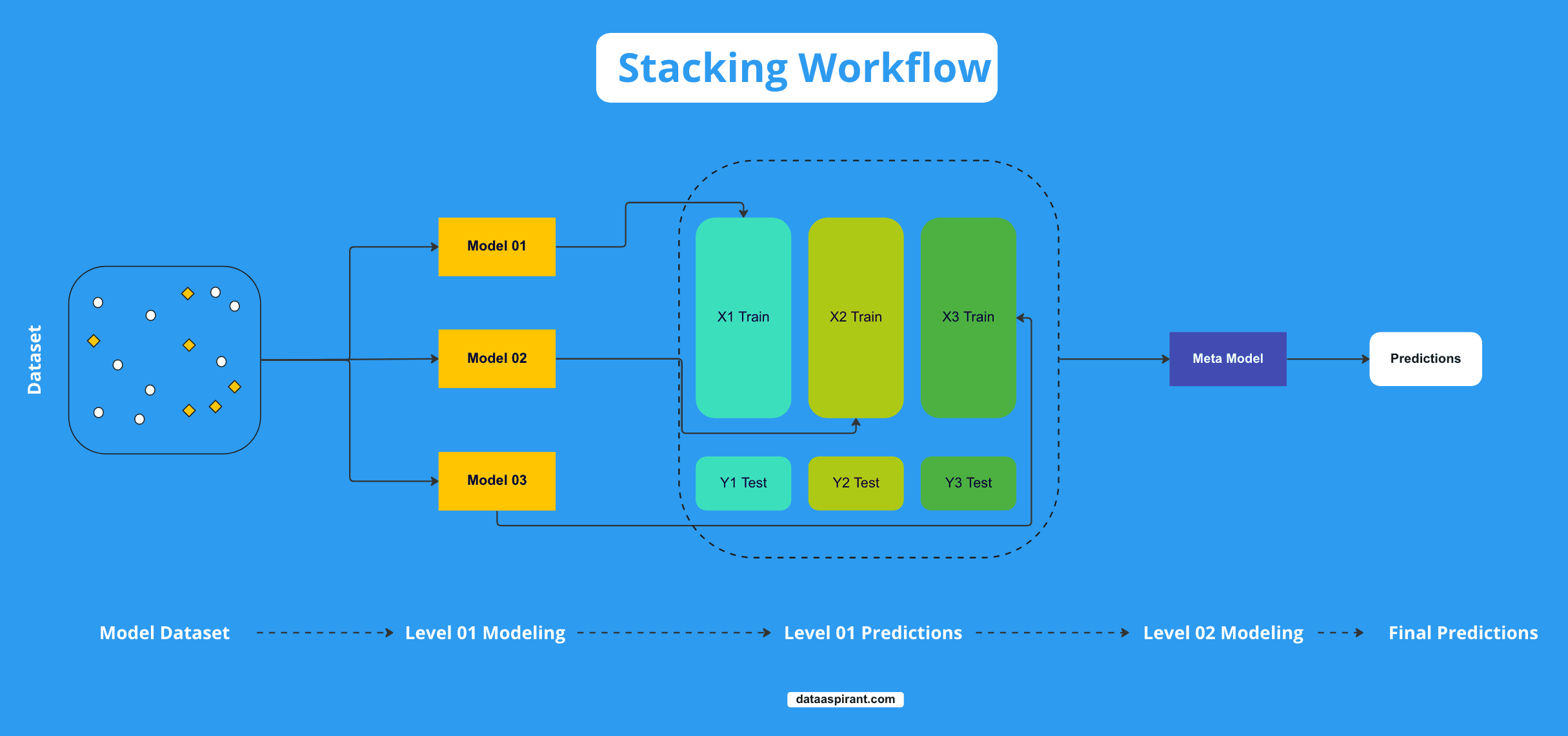

Stacking Workflow

Stacking, also known as stacked generalization, involves training a meta-model (also called a second-level model) on the predictions of multiple base models. The workflow for stacking is as follows:

Split the training data into K-folds (for example, using K-fold cross-validation).

Train each base model on K-1 folds and make predictions on the remaining fold.

Repeat this process for all K folds, resulting in a set of out-of-fold predictions for each base model.

Combine these out-of-fold predictions to create a new dataset, which serves as the input features for the meta-model.

Train the meta-model on this new dataset, with the original target variable as the output.

To make predictions on new, unseen data, feed the data through the base models to generate predictions, and then use these predictions as input features for the meta-model.

In stacking, the focus is on leveraging the strengths of different base models by learning how to optimally combine their predictions through the meta-model.

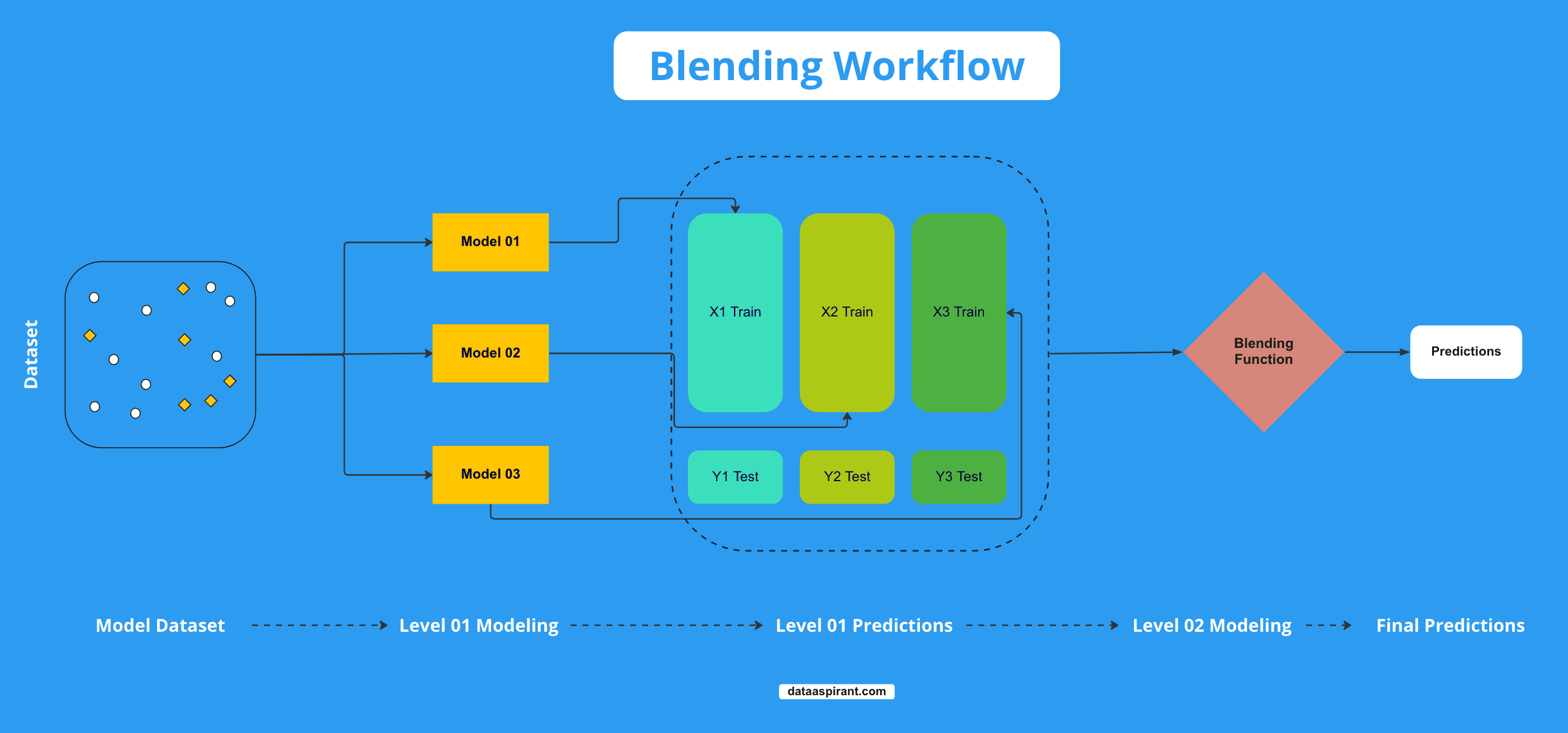

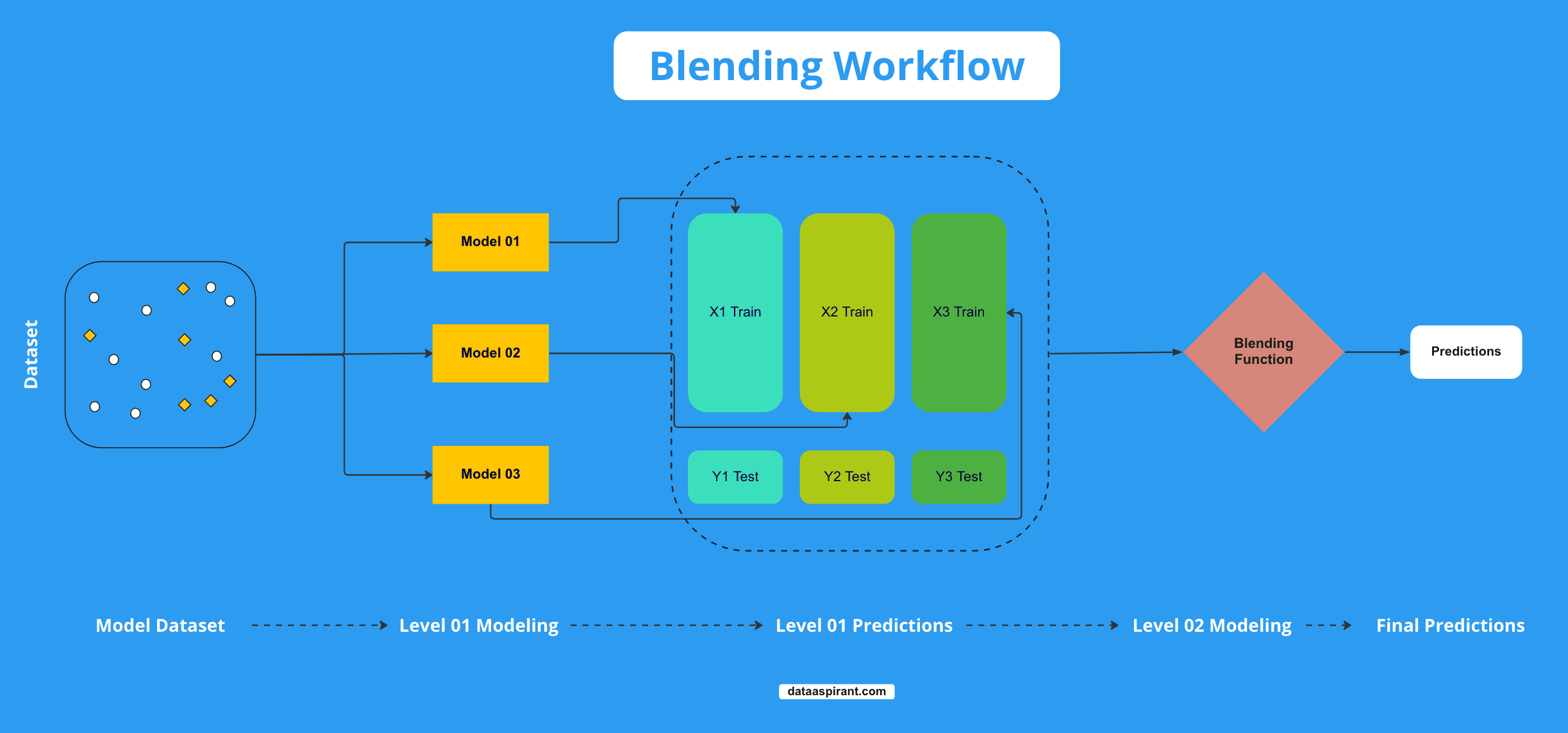

Blending Workflow

Blending is a simpler technique compared to stacking. It involves training base models independently and then combining their predictions using a weighted average, majority vote, or another aggregation method. The workflow for blending is as follows:

Split the training data into two parts: a training set and a validation set.

Train each base model on the training set.

Make predictions using the base models on the validation set and new, unseen data.

Combine these predictions using a predefined blending function an aggregation method (e.g., weighted average, majority vote) to produce the final output.

The main difference between stacking and blending lies in the way the base models' predictions are combined. Stacking uses a meta-model to learn the optimal combination, whereas blending relies on a predefined aggregation method.

In summary, stacking is a more complex ensemble technique that trains a meta-model to learn the optimal way of combining base model predictions, whereas blending is a simpler approach that involves aggregating base model predictions using a predefined method.

Both techniques aim to improve overall predictive performance by leveraging the strengths of multiple base models.

Using Blending Algorithm for Both Classification and Regression Problems

As we discussed above, blending can be used for both regression and classification problems; the exact mechanisms follow in both approaches.

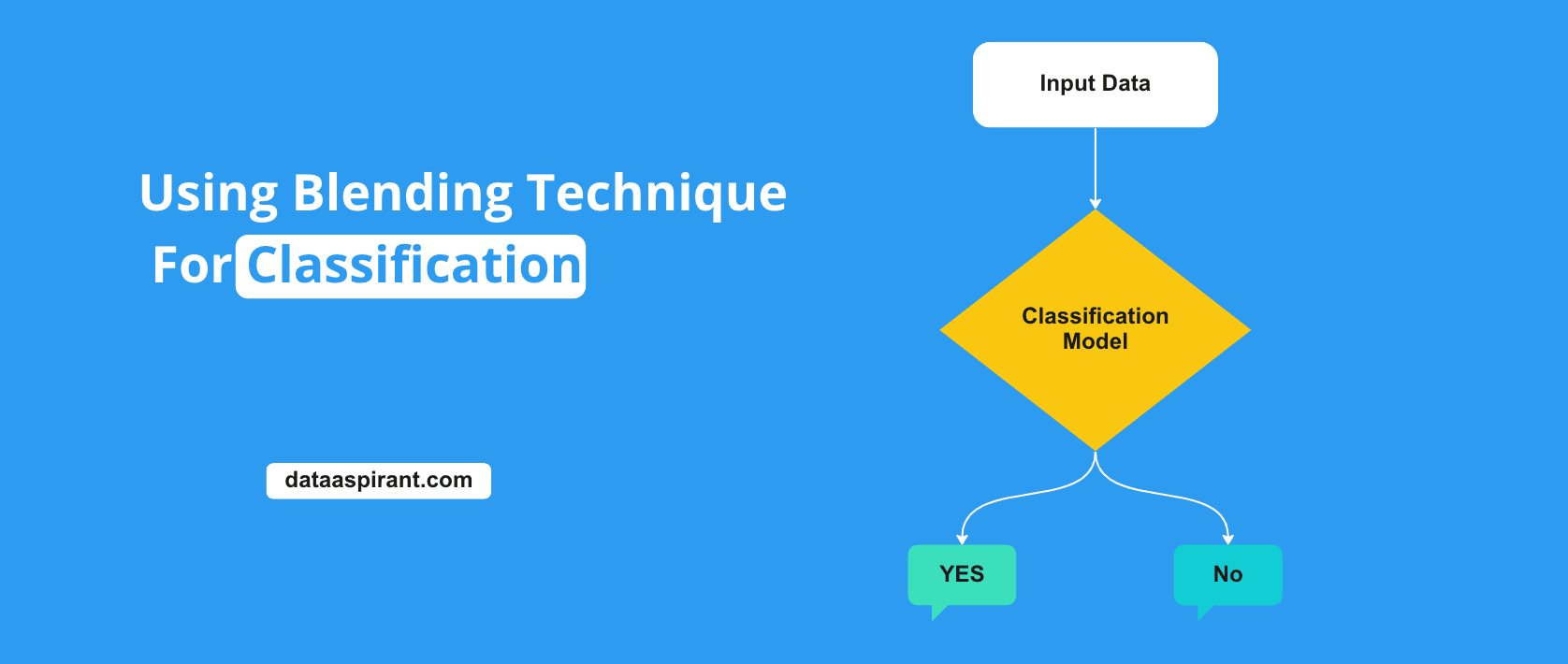

How to Use Blending Technique for Classification

As we know that in classification problems, the output or target column is textual or has categories or labels to classify.

As per the working of the blending algorithm, we first feed the training data that is split from the whole dataset to the model, and the base models will be trained on the same.

So here, basically, the base model will be fed with the training data, and each of the classifiers or the model will be trained on the same. Here the algorithms or the classifiers can be any algorithm like logistic regression, decision trees, etc.

Once the base models are trained, the prediction are taken from the base models, and the final output is decided on the basis of either majority voting or the weighted average of all the classifiers where the weights to the outputs are assigned.

Step 1: Importing Required Libraries

Let us import the required libraries first.

Step 2: Importing the Data

Step 3: Removing Unnecessary data

Let us remove the unnecessary data from the dataset.

Step 4: Impute Missing Values

Let us fill the missing data points from the data.

Step 5: Encoding

Encode the categorical data present the dataset in order to feed to the model.

Step 6: Train Test Split

Split the dataset into training and testing datasets.

Step 7: Define and Train the Model

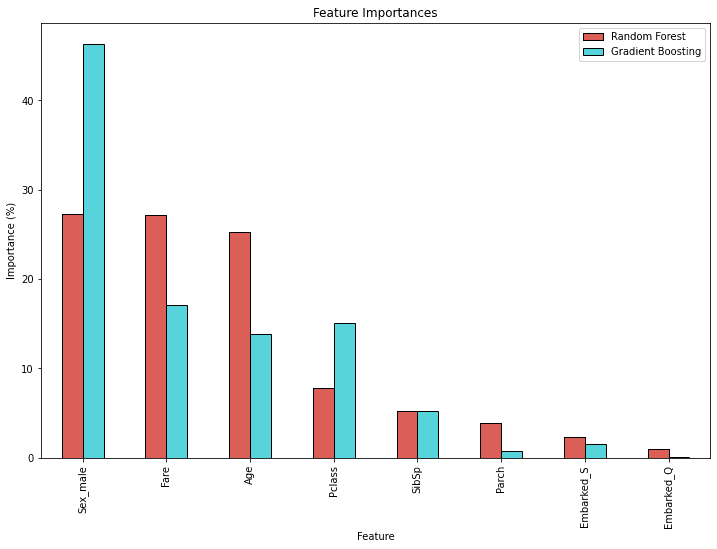

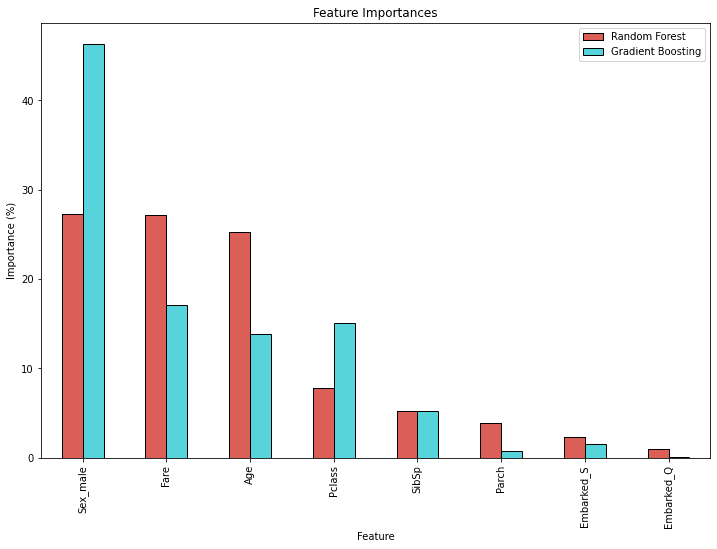

Let us take Random Forest and Gradient Boosting algorithms in the blending model.

Step 8: Define the Blending Model

Let us define the blending model with the help of the previous two algorithms.

Step 9: Accuracy Score

let us print the accuracy of the model.

Step 10: Feature Important from the Blending Model

Let us try to get the Feature Importance of the algorithms in the blending model.

Blending Classification Model Output:

- Random Forest Classifier score: 0.8212

- Gradient Boosting Classifier score: 0.8045

How to Use Blending Technique for Regression

The working of a blending algorithm for regression problems is very similar to the classification problems. As it is now a regression problem, the output or the target variable would be numerical.

As discussed in the first step, the dataset will be split into training and testing stages.

The base model will be trained on the training data, and once trained, they are ready for the prediction phase. the test data is given to the base model for predictions in each of the regressor models here will predict for the given data.

Now the final output is considered on the basis of each regressor output; here, the simple average is calculated, and that value is considered as the output of the blending model, or we can calculate the weighted average in order to assign weights to the different algorithms.

Step1: Import Required Libraries

Importing required libraries for implementing the blending algorithm.

Step2: Load The Dataset and Define X and Y.

We will use the Bostion Housing data here. Link: https://www.kaggle.com/datasets/puxama/bostoncsvStep 3: Defining and Training the Models

Here we will use linear regression and decision trees as regressor models.

Step 4: Blending Model

Now we will define the blending logic.

Blending Regression Model Output:

- MSE on validation set: 16.659434386454944

- MAE on validation set: 2.61614579217624

Step 5: Visualization

Let us visualize the results.

Blending Key Takeaways

- Two layers of algorithms are used in blending algorithms: base and meta models.

- In blending algorithms, we may face data leakage problems as the metamodel will be trained on the predictions from the base model.

- In blending algorithms, the hold-out approach is used to prevent data leakage while training the model.

- In stacking, the metamodels train first, whereas, in blending, the base model trains first, and then the metamodels train.

- Stackinh algorithms divide the training data into K parts, and then the training is done, whereas in blending, the data is divided into three parts for the training of the base and meta models.

Conclusion

In conclusion, blending is an effective and straightforward ensemble technique in machine learning that offers several advantages. By combining the predictions of multiple base models, blending can boost overall predictive performance, reduce overfitting, and capitalize on the strengths of diverse algorithms.

Despite its simplicity compared to other ensemble methods like stacking, blending has proven to be a valuable tool in various real-world applications, including data science competitions and industry projects.

It is crucial to carefully select and fine-tune the base models and their aggregation method to achieve optimal results.

As machine learning continues to evolve, blending will undoubtedly remain a popular and widely-used approach for harnessing the power of multiple models.

By experimenting with blending and adapting it to specific tasks, practitioners can unlock new levels of performance and robustness in their machine learning solutions.

Recommended Courses

Machine Learning Course

Rating: 4.5/5

Deep Learning Course

Rating: 4/5

NLP Course

Rating: 4/5