Adaboost Algorithm: Boosting your ML models to the Next Level

Adaboost algorithm, short for Adaptive Boosting, is a boosting algorithm that has been widely used in various applications, including computer vision, natural language processing, and fraud detection.

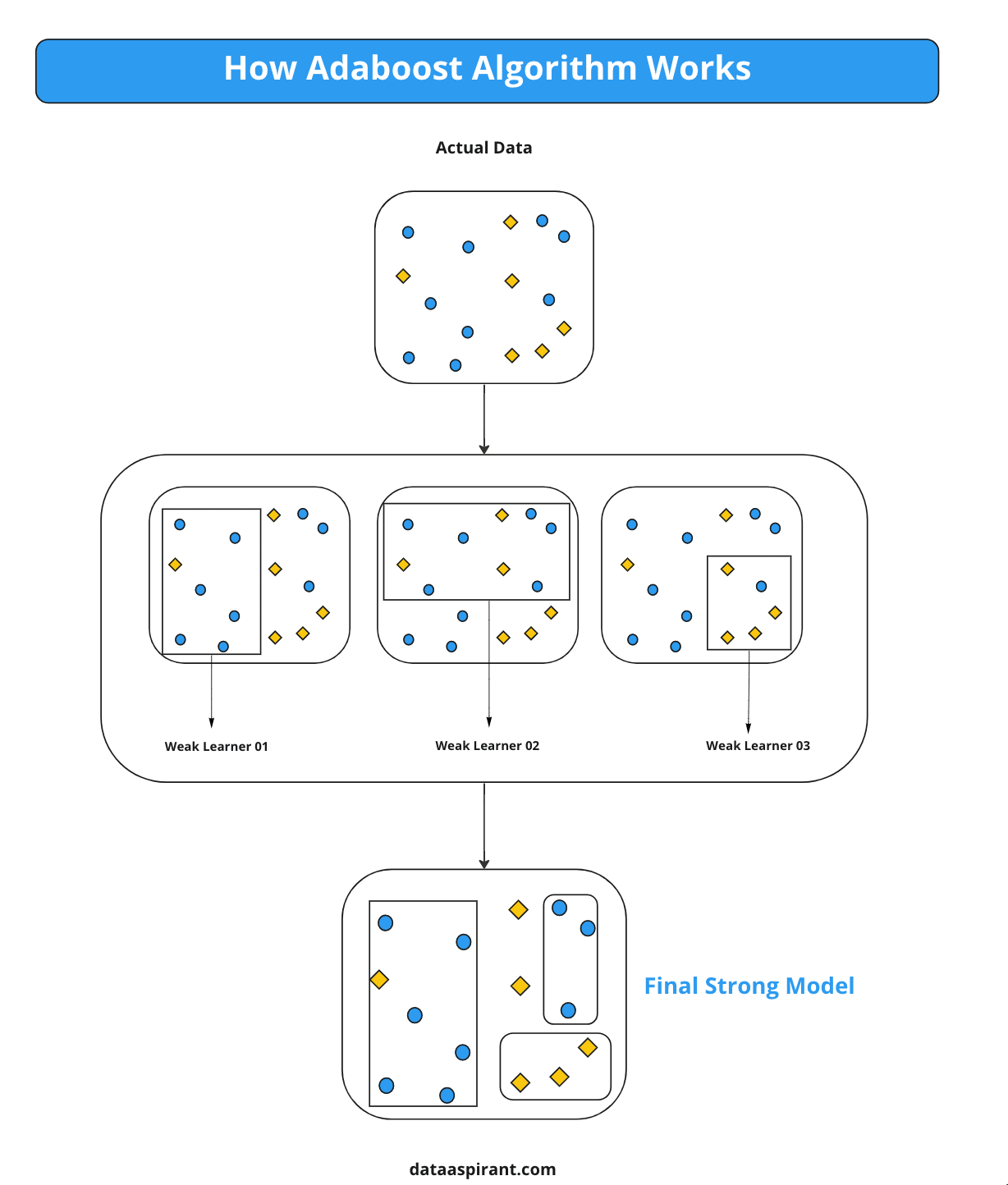

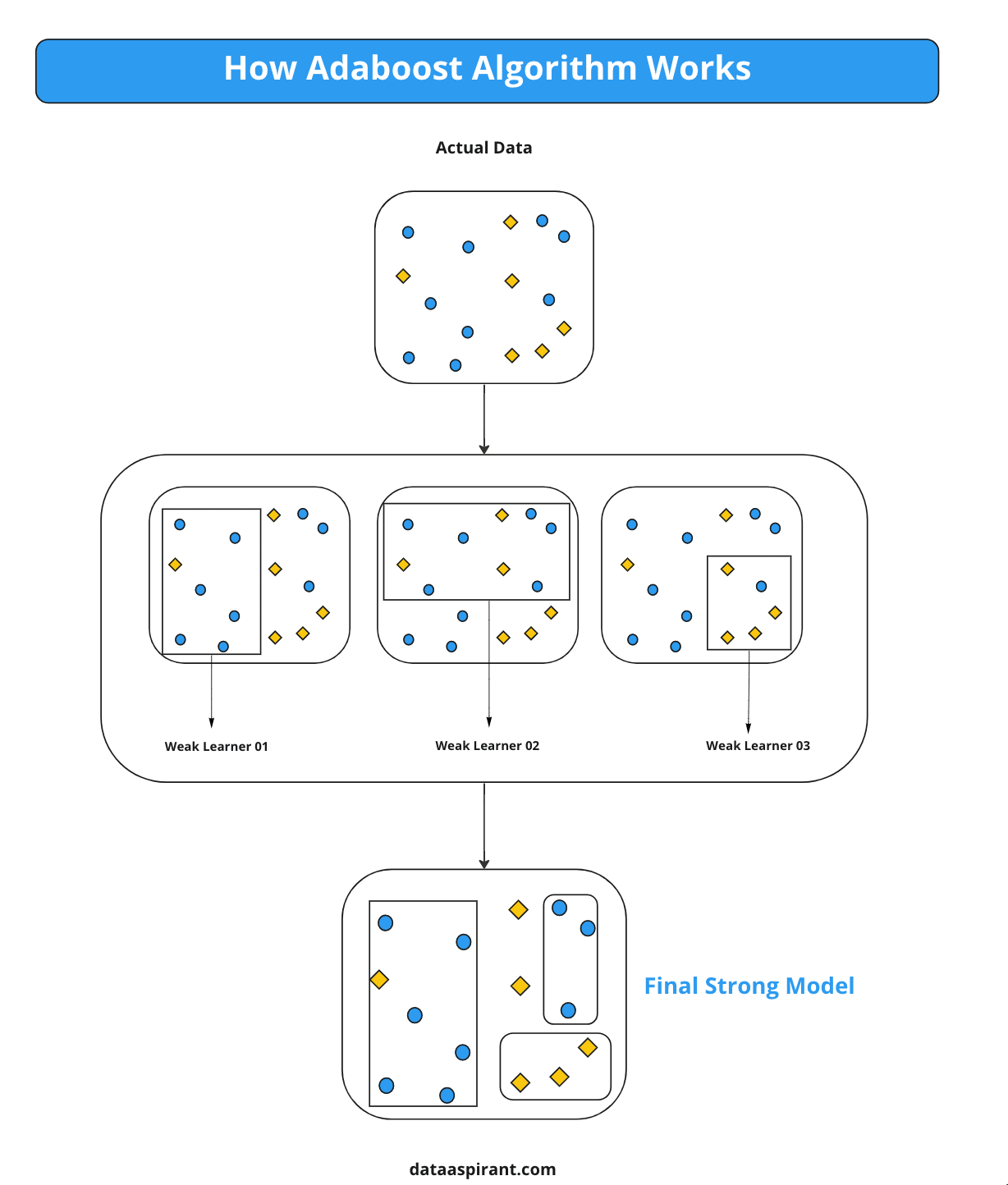

The Adaboost algorithm works by iteratively training weak learners, such as decision trees or linear models, on a dataset and assigning weights to each training instance based on its classification error.

The algorithm then combines the weak learners to create a strong learner that can accurately classify new, unseen data.

How Adaboost Algorithm works

One of the main advantages of Adaboost is its ability to handle complex data and feature interactions. It can also prevent overfitting by using a weighted combination of weak learners.

Additionally, Adaboost has been shown to have high accuracy and is relatively easy to implement.

If you're interested in boosting techniques and want to improve the performance of your machine learning models, learning about Adaboost is definitely worth your time.

This article will explore the AdaBoost algorithm. We will discuss how Adaboost works, its real-world applications, and its advantages and disadvantages.

We will also provide tips for using Adaboost effectively and avoiding common pitfalls. Finally, we will discuss the potential future of Adaboost in machine learning.

What is Adaboost Algorithm, and Why is it Important?

Adaboost (short for Adaptive Boosting) is a popular boosting algorithm used in machine learning to improve the accuracy of binary classification models.

Boosting is a technique that combines multiple weak learning algorithms to create a strong learner that can make accurate predictions. Adaboost is particularly useful when complex relationships exist between the input features and the output variable.

Adaboost works by sequentially training a series of weak classifiers on weighted versions of the training data. The weights are adjusted after each iteration to give more importance to misclassified data points.

The final model combines the weak classifiers using a weighted sum to make predictions on new data.

Adaboost has become an important tool in machine learning due to its ability to improve classification models' accuracy significantly.

It has been successfully applied in various fields, such as computer vision, natural language processing, and bioinformatics. Adaboost is relatively easy to implement and can be used with a wide range of weak learning algorithms.

How Adaboost Works: Understanding the Algorithm

Adaboost works by combining multiple weak classifiers to create a strong learner that can accurately classify new data.

This section will provide an overview of the Adaboost Algorithm and explain its step-by-step process.

Boosting Algorithms Overview

Boosting is a family of machine learning algorithms combining several weak models to create a stronger one. Weak models are classifiers that perform only slightly better than random guessing, but when combined, they can produce highly accurate predictions.

Several boosting algorithms exist, such as

- Adaboost,

- Gradient Boosting,

- XGBoost.

Each algorithm has its unique characteristics and applications.

For example, Adaboost is suitable for binary classification problems, while Gradient Boosting is more suitable for regression problems. XGBoost is a scalable and efficient boosting algorithm that has been successful in various machine learning competitions.

They have been successfully applied in various fields, such as

- Finance,

- Healthcare,

- Natural language processing.

Boosting algorithms can also be used with a variety of weak learning models, such as

This flexibility makes boosting algorithms a versatile tool for improving the accuracy of machine learning models.

Adaboost Algorithm Intuition and Theory

Adaboost Algorithm is a powerful machine learning technique that combines multiple weak classifiers to create a strong learner. The idea behind Adaboost is simple yet effective.

It focuses on misclassified examples and adjusts the weights of the training data to prioritize these examples. By doing so, the algorithm can learn from the mistakes made by the weak classifiers and produce a strong model that can generalize well on new data.

The Adaboost Algorithm works by iteratively training a series of weak classifiers on weighted versions of the training data.

Each weak classifier is trained to classify the examples as either positive or negative. After each iteration, the weights of the misclassified examples are increased to give them more importance in the next iteration.

This process continues until a predetermined number of weak classifiers are trained or a specified threshold is reached.

Adaboost can be used with a variety of weak classifiers, such as decision trees or linear models. The choice of a weak classifier depends on the problem's nature and the data's characteristics.

The key idea is to choose weak classifiers that perform slightly better than random guessing. The algorithm then combines the weak classifiers to produce a strong model that can make accurate predictions on new data.

The training process of AdaBoost is relatively simple and can be implemented on any dataset. The biggest advantage of this algorithm is its ability to handle noisy data and outliers.

It is also less prone to overfitting than other algorithms such as support vector machines. Additionally, the algorithm is easy to interpret and can be used to generate insights about the data.

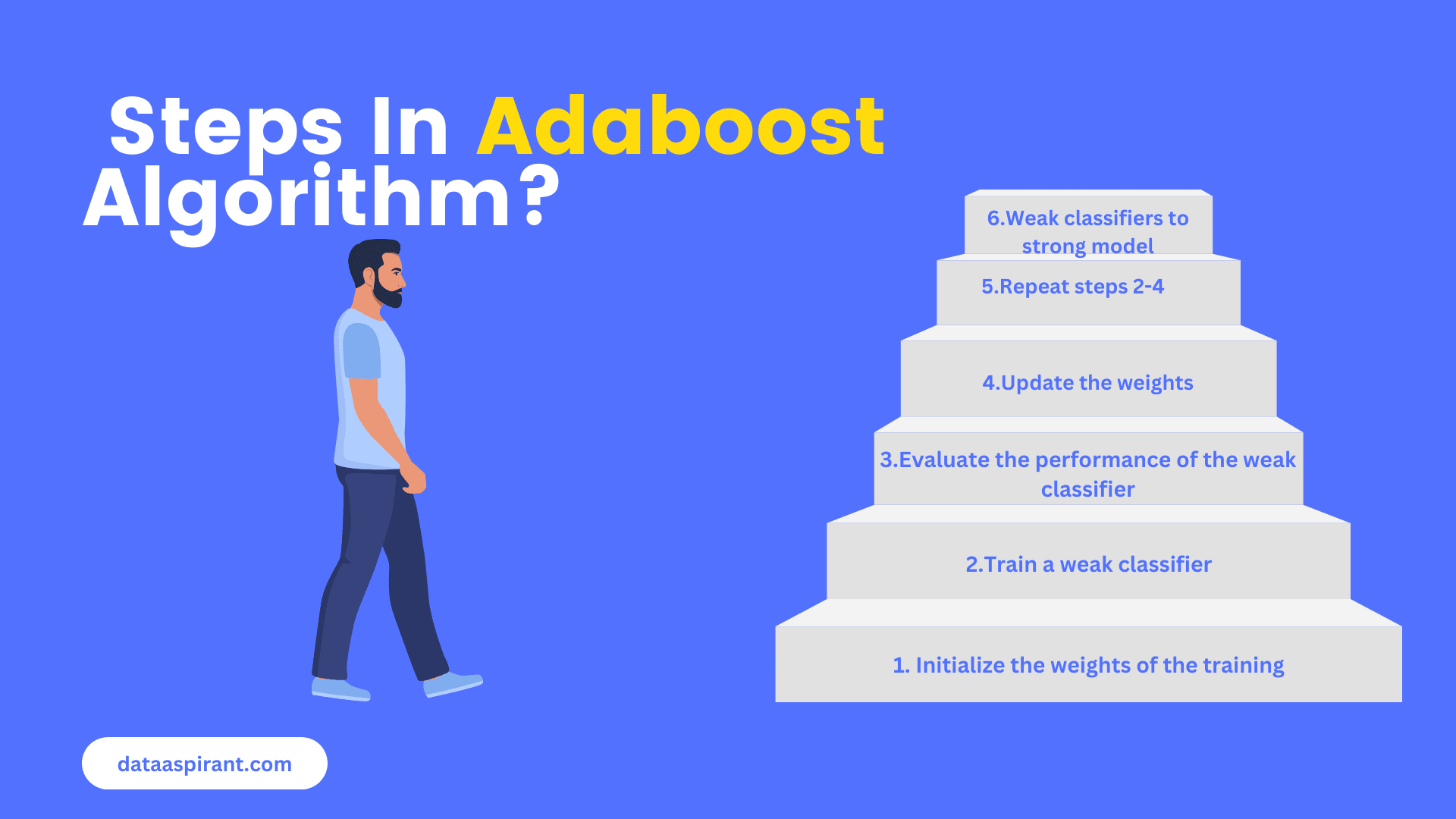

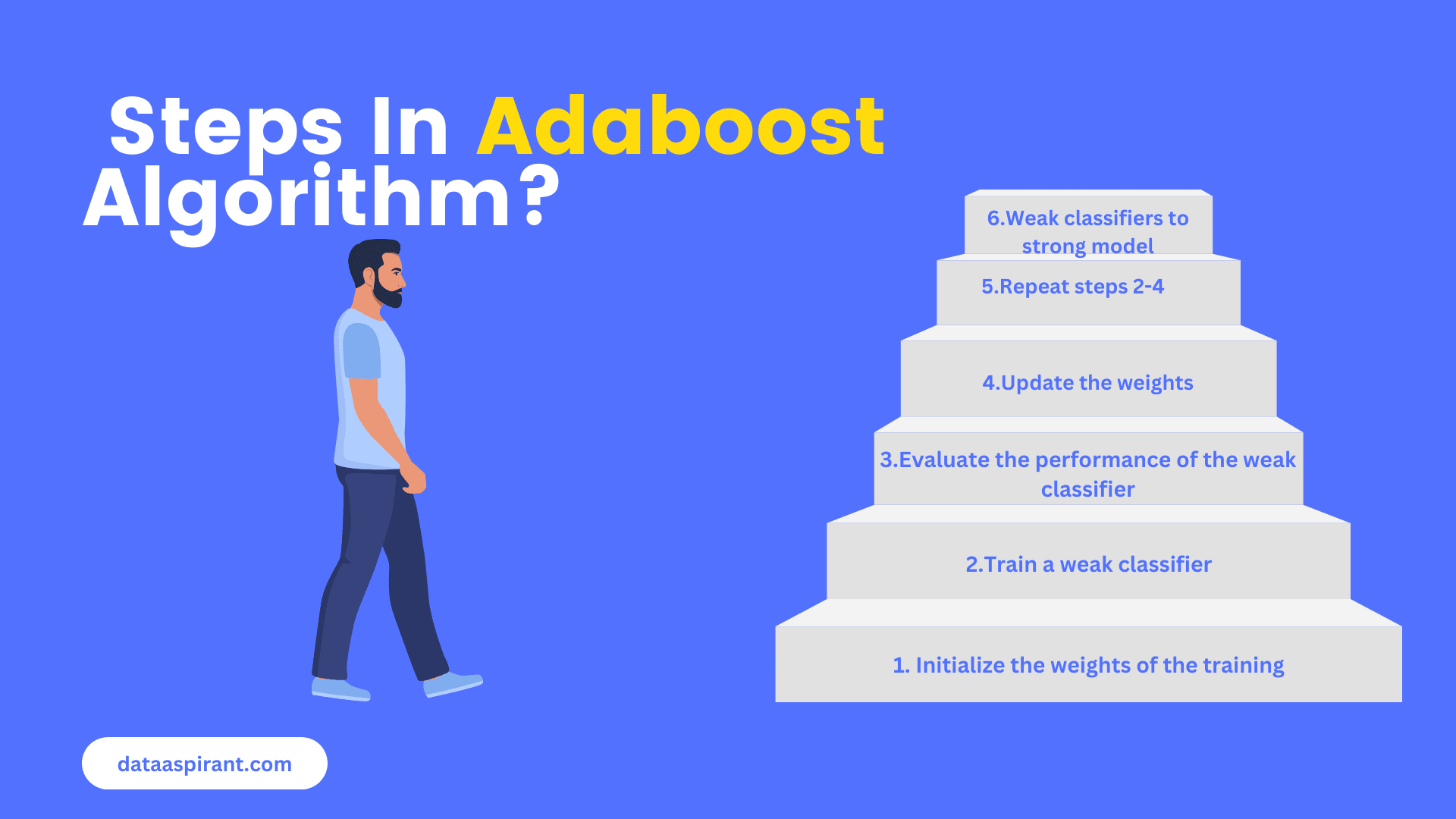

Adaboost Algorithm Step-by-Step Process

The popular AdaBoost Algorithm consists of several steps that are repeated iteratively to train a series of weak classifiers and combine them into a strong model. The following is a step-by-step process of Adaboost:

Initialize the weights of the training examples: The first step is to assign equal weights to all the training examples. These weights are used to emphasize the misclassified examples in the subsequent iterations.

Train a weak classifier: A weak classifier is trained on the weighted training data in each iteration. The weak classifier aims to classify the examples as positive or negative. A variety of weak classifiers can be used, such as decision trees, linear models, or support vector machines.

Evaluate the performance of the weak classifier: Once the weak classifier is trained, its performance is evaluated on the training data. The misclassified examples are given higher weights to prioritize them in the next iteration.

Update the weights of the training examples: The weights of the training examples are updated based on their classification by the weak classifier. The weights of the misclassified examples are increased, while the weights of the correctly classified examples are decreased. This ensures that the weak classifier focuses on the challenging examples.

Repeat steps 2-4 for a predetermined number of iterations: The previous steps are repeated for a specified number of iterations, or until a threshold is reached. The weights of the training examples are adjusted in each iteration to prioritize the misclassified examples and learn from the mistakes made by the weak classifier.

Combine the weak classifiers into a strong model: After the specified number of iterations, the weak classifiers are combined into a strong model using a weighted sum of their outputs. The final model can make accurate predictions on new, unseen data.

Adaboost can be implemented using various programming languages and libraries, such as Python and scikit-learn.

The number of iterations and the choice of weak classifier are hyperparameters that can be tuned to improve the performance of the model.

Adaboost is a powerful algorithm that can significantly improve the accuracy of machine learning models, especially in complex tasks where the relationships between the input features and the output variable are not straightforward.

Adaboost Real-World Applications

Adaboost Algorithm has been successfully applied in various real-world applications. In this section, we will explore the real-world applications of Adaboost in these domains and discuss how it has been used to improve the performance of machine learning models

Image Classification with Adaboost

Image classification is the process of categorizing images into different classes or categories based on their visual content. Adaboost algorithm can be used to enhance the performance of image classification models by combining weak classifiers to create a stronger one.

In this code snippet, we first load the iris dataset using the load_iris function from sklearn.datasets. We then split the dataset into training and testing sets using the train_test_split function from sklearn.model_selection.

We create a decision tree classifier as a weak classifier using the DecisionTreeClassifier class from sklearn.tree.We then create an AdaBoost classifier with 100 estimators using the AdaBoostClassifier class from sklearn.ensemble. We specify the dt_clf decision tree classifier as the base estimator for the AdaBoost classifier.

Next, we train the AdaBoost classifier on the training data using the fit method. Finally, we evaluate the performance of the model on the testing data using the scoring method and print the accuracy score.

Text Classification with Adaboost

Text classification is the process of assigning text documents to one or more predefined categories or labels based on their content. It is a common task in natural language processing and has numerous applications, such as sentiment analysis, spam detection, and topic modelling.

Adaboost Algorithm can be used to improve the accuracy of text classification models by combining multiple weak classifiers into a strong one, which can effectively identify the relevant features and patterns in the text data.

In this example, we load the 20 Newsgroups dataset and extract features from the text data using CountVectorizer. We then train an Adaboost Classifier on the training data and test it on the test data. Finally, we print the accuracy of the classifier on the test data.

Medical Diagnosis with Adaboost

Adaboost is a powerful machine learning algorithm that can be used for medical diagnosis. It is a boosting technique which combines multiple weak learners to create a stronger and more accurate classifier.

Adaboost is used in medical diagnosis to help healthcare professionals identify diseases more accurately and quickly.

It can also be used to analyze medical images to detect abnormalities, aiding in early detection and treatment of various diseases.

Adaboost is a powerful tool that can help healthcare professionals make more informed decisions about diagnosis, treatment and care for patients.

This code is using the AdaBoostRegressor algorithm to predict the severity of diabetes using the diabetes dataset available in sklearn.

It starts by importing the necessary libraries and loading the diabetes dataset. Then, the dataset is split into training and test sets. Next, the adaboost regressor is created and fitted to the training data. Finally, the accuracy of the model is calculated and printed.

Advantages and Disadvantages of the Adaboost Algorithm

By leveraging the power of ensemble learning, Adaboost has become a widely used algorithm in the field of machine learning.

In this section, we will discuss some of the main advantages and disadvantages of Adaboost and how they can impact its performance in real-world applications.

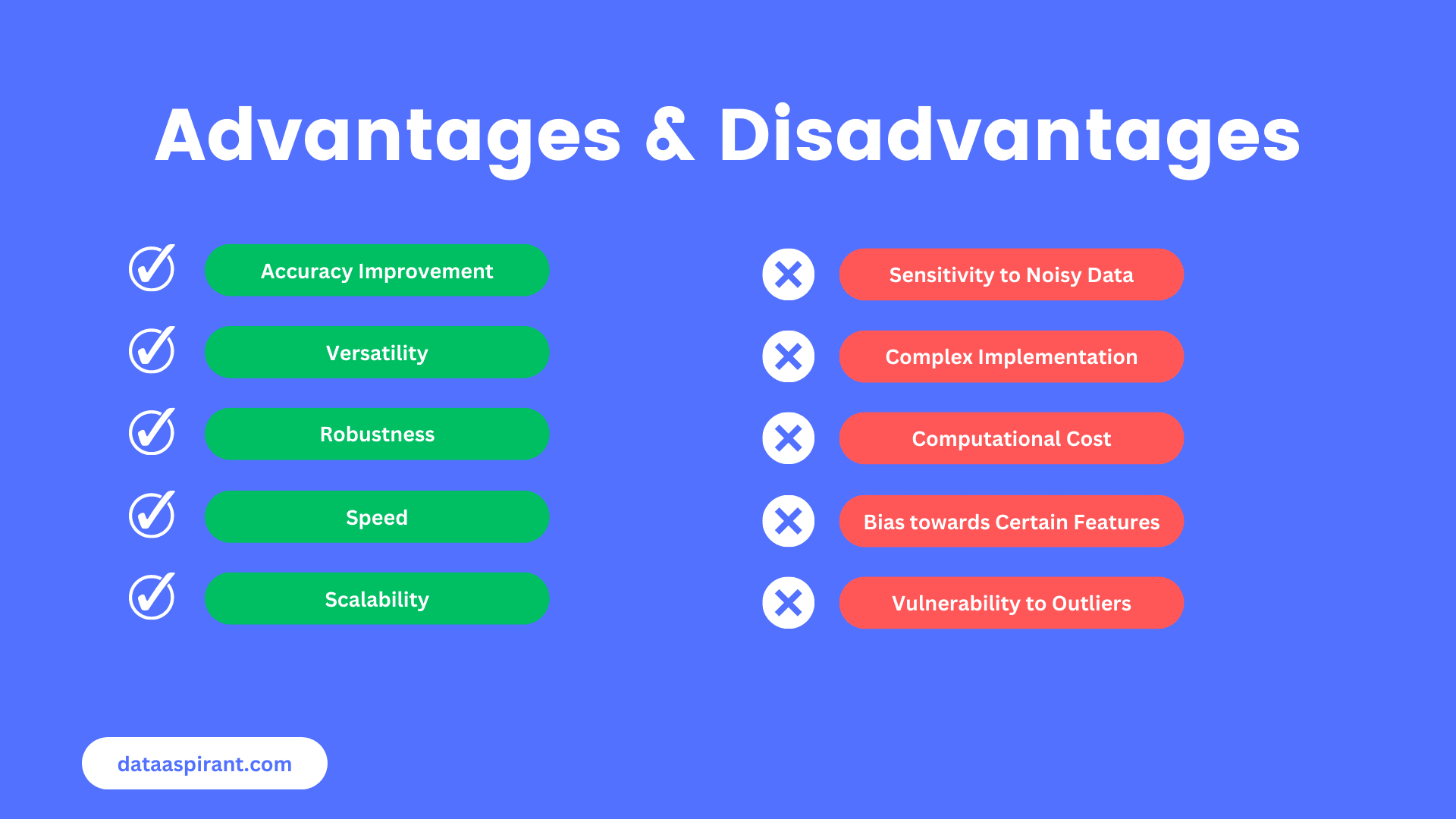

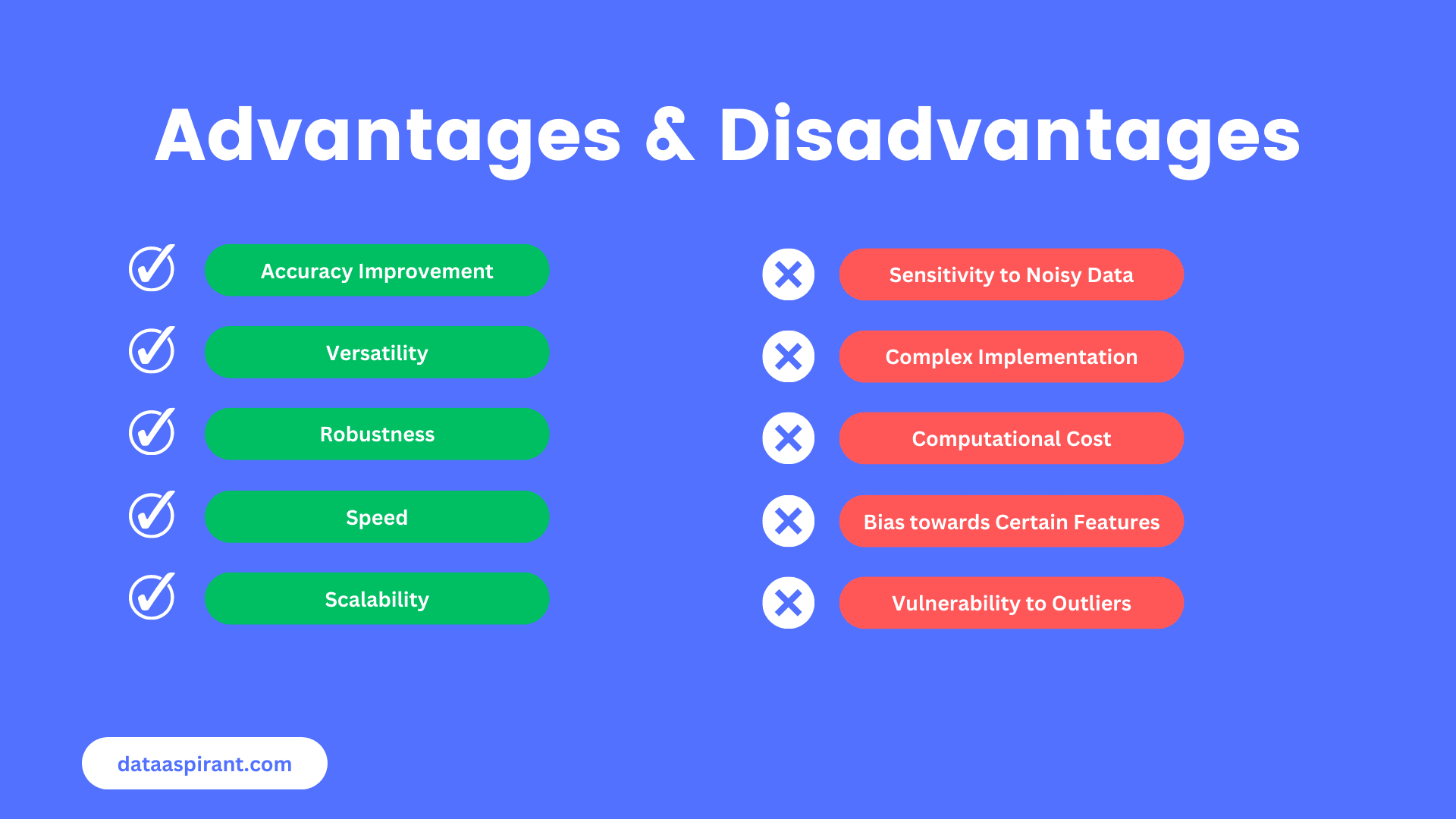

Advantages

- Improved Classification Accuracy: Adaboost is great at improving the accuracy of classification models by combining multiple weak learners into strong ones. This means you can get better results than using a single model alone.

- Versatility: Adaboost can work with different types of data and models. So, whether you're working with decision trees, neural networks, or support vector machines, Adaboost can adapt and improve their accuracy.

- Robustness: Adaboost is less likely to overfit than other machine learning algorithms. This is because Adaboost adjusts the weights of misclassified samples, which helps to reduce overfitting and increase accuracy.

- Speed: Adaboost is a relatively fast and efficient algorithm. Compared to other ensemble methods like bagging and random forests, Adaboost can be much faster.

- Scalability: Adaboost can handle large and complex datasets. So, if you're working with big data, Adaboost is a great choice. Interpretability: Adaboost is easy to interpret and explain. It combines simple models to form a more complex one, which helps you better understand the patterns in your data.

Disadvantages

- Sensitivity to Noisy Data: Adaboost is sensitive to noisy data. This means that if your dataset has a lot of errors, Adaboost can produce inaccurate results.

- Complexity of Implementation: Adaboost can be challenging to implement and train, especially for beginners. It requires careful parameter tuning, and getting the settings right can be difficult.

- Computational Cost: Adaboost can be computationally expensive, especially when working with large datasets and complex models. This means it may take longer to train and may require more resources.

- Bias towards Certain Features: Adaboost may give more weight to features that are highly correlated with the target variable. This can cause bias in the model, which can lead to inaccurate results.

- Lack of Transparency: Adaboost can be easy to interpret, but the individual weak learners that make up the ensemble can be difficult to understand. This makes it hard to diagnose and fix errors in the model.

- Vulnerability to Outliers: Adaboost is vulnerable to outliers and anomalies. This means that if your dataset contains extreme values, Adaboost may produce inaccurate results.

How to Evaluate Adaboost Algorithm

While Adaboost is a popular and effective algorithm, it's important to evaluate its performance to ensure that it's producing accurate and reliable results. Several metrics can be used to evaluate the performance of an Adaboost model:

Accuracy

This is the most common metric used to evaluate a classification model. Accuracy measures the proportion of correct predictions made by the model.

Precision and Recall

Precision and recall are two metrics that are often used together to evaluate the performance of a classification model.

Precision measures the proportion of true positives (i.e., correct predictions) among all positive predictions made by the model. Recall measures the proportion of true positives among all actual positive instances in the dataset.

F1 Score

The F1 score is a weighted average of precision and recall. It provides a single score that summarizes the overall performance of a model.

Confusion Matrix

A confusion matrix is a table that summarizes the performance of a classification model. It shows the number of true positives, true negatives, false positives, and false negatives for each class in the dataset.

ROC Curve

The Receiver Operating Characteristic (ROC) curve is a plot of the true positive rate (TPR) versus the false positive rate (FPR) for different classification thresholds. It provides a way to evaluate the performance of a classification model across different thresholds.

When evaluating an Adaboost model, it's important to consider the specific problem domain and the goals of the model. For example, in some cases, maximizing precision may be more important than maximizing recall, while the opposite may be true in other cases.

In addition to these metrics, it's also important to evaluate the generalization performance of an Adaboost model. This can be done by using techniques such as cross-validation, where the model is trained on a subset of the data and tested on the remaining data.

This helps to ensure that the model is not overfitting to the training data and can generalize well to new, unseen data.

Adaboost is a powerful algorithm that can be used for a wide range of classification problems. However, in order to use it effectively, it's important to understand the various evaluation metrics that can be used to assess its performance.

Using the appropriate evaluation metrics, you can identify areas where your model can be improved and make better-informed decisions about optimising it.

Overall, by evaluating the performance of an Adaboost model using a combination of metrics and ensuring that the model generalizes well to new data, you can ensure that your model is accurate, reliable, and effective for your specific problem domain.

Tips for Using Adaboost: Best Practices and Common Pitfalls

Adaboost is a powerful machine learning algorithm that can improve the accuracy of machine learning models.

However, to get the most out of Adaboost, following some best practices and avoiding common pitfalls is important. In this section, we will look at some of them.

Feature Selection

Choosing features is a crucial step in the Adaboost process because it lessens model complexity and boosts the reliability of the output. Including too many features can result in excessive customization and subpar performance, which is a common mistake.

Therefore, the most pertinent features to the current issue should be carefully chosen.

To accomplish this, one method is to use feature engineering techniques or domain knowledge to determine the most crucial features.

A different strategy is to employ a feature selection algorithm like this: B. whether to choose features based on mutual information or correlation. The procedure for choosing the best features can be automated.

It is crucial to keep in mind that various data types and issues may respond better to various feature selection methodologies.

Therefore, it's crucial to test out various approaches and assess their effectiveness using cross-validation or other methods. By selecting the appropriate features, you can increase your Adaboost model's accuracy and stay clear of common pitfalls.

Choosing the Right Weak Learner

Choosing the right weak learner is crucial for the success of Adaboost. The weak learner should be simple enough to be easily trained but not too weak that it can't classify the data better than random guessing.

Common weak learners used in Adaboost include decision trees, neural networks, and support vector machines. The choice of a weak learner often depends on the type of data and the problem at hand.

It's also important to avoid using a weak learner that is too complex, as this can lead to overfitting and poor generalization. One approach to selecting the right weak learner is performing a grid search over a range of possible models, evaluating each using cross-validation or another performance metric.

Choosing the right weak learner ensures that your Adaboost model performs best.

Avoid Overfitting

Overfitting is a common pitfall when using Adaboost. This occurs when the model is too complex and captures noise in the data rather than the underlying patterns.

To avoid overfitting, using techniques like cross-validation to estimate the model's generalisation performance is important.

Cross-validation involves splitting the data into training and testing sets and evaluating the model on the testing set to estimate its performance on unseen data.

Another approach to avoiding overfitting is to regularize the model. Adding a penalty term to the objective function encourages the model to be simple and avoid overfitting.

In Adaboost, regularization can be achieved by limiting the number of weak learners used or by adding a penalty term to the weights of the weak learners. It's also important to ensure that the weak learners are not too complex, as this can also lead to overfitting.

By avoiding overfitting, you can ensure that your Adaboost model generalizes well to unseen data and achieves the best possible performance.

Dealing with Class Imbalance

Dealing with class imbalance is a common challenge when using Adaboost. Class imbalance occurs when one class has significantly fewer examples than the other, which can lead to biased models that perform poorly on the minority class.

One approach to dealing with class imbalance is to oversample the minority class or undersample the majority class. This can help balance the data and improve the model's performance on the minority class.

Another approach is to use cost-sensitive learning, which involves assigning different costs to misclassifications on different classes. In Adaboost, this can be achieved by assigning higher weights to examples in the minority class or by adjusting the decision threshold to favour the minority class.

It's also important to use evaluation metrics that are sensitive to class imbalance, such as precision, recall, and F1 score, rather than accuracy. By addressing the class imbalance, you can improve the performance of your Adaboost model and avoid common pitfalls.

Conclusion: The Future of Adaboost Algorithm in Machine Learning

Adaboost is a powerful and widely used machine learning algorithm that shows great potential for solving various classification problems.

As machine learning continues to advance, Adaboost will continue to be a popular choice because it can combine simple and weak learners into powerful ensemble classifiers. However, there are still many areas in need of research and development.

Potential Developments and Research Directions

Deep learning integration: Adaboost has traditionally been used with shallow learning models like decision trees, but there is potential for integrating it with deep learning models. This could lead to improved performance and new applications in areas like computer vision and natural language processing.

Online learning: Adaboost was originally designed for batch learning, where all the training data is available upfront. However, there is growing interest in online learning, where the model is trained incrementally as new data arrives. Adaboost could be adapted to this setting to handle streaming data and changing distributions.

Multi-class classification: Adaboost has primarily been used for binary classification, but there is potential for extending it to multi-class classification problems. This could involve using different strategies for combining weak learners or adapting the algorithm to handle more complex decision boundaries.

Explainability: Adaboost can sometimes be difficult to interpret due to its ensemble nature. There is potential for developing new techniques for visualizing and explaining Adaboost models, which could be useful in areas like healthcare and finance.

Final Thoughts and Recommendations

Adaboost is a robust and widely used machine learning algorithm that shows great potential for solving various classification problems.

As machine learning continues to advance, Adaboost will continue to be a popular choice because it can combine simple and weak learners into powerful ensemble classifiers. However, there are still many areas in need of research and development.

Recommended Courses

Machine Learning Course

Rating: 4/5

Deep Learning Course

Rating: 3/5

NLP Course

Rating: 5/5